- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

I want to match RGB & Depth

02-11-2018 07:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I am currently conducting KINECT research at the university using Hero 3D. (kinect v2)

I want to match RGB & Depth. However, due to different resolutions, it is difficult to match them (1920x1080, 512x424)

So we divided the points, colors, into calibrated clouds.

I checked the color values.

However, the X and Y values in the array

The values for X, Y, and Z of the points do not match.

Can you suggest an easier use?

02-11-2018 07:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

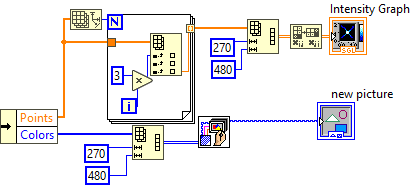

The cloud of points structure consists in two 1D arrays of points and RGB colors. The color array has a length corresponding to the product of the image dimensions you selected. The point arrays has 3X that size (because of the X, Y, Z components for each point). You can reshape the arrays into 2D but you have to take the 3X size into account. Below is an example of how you can do it using the 480x270 resolution:

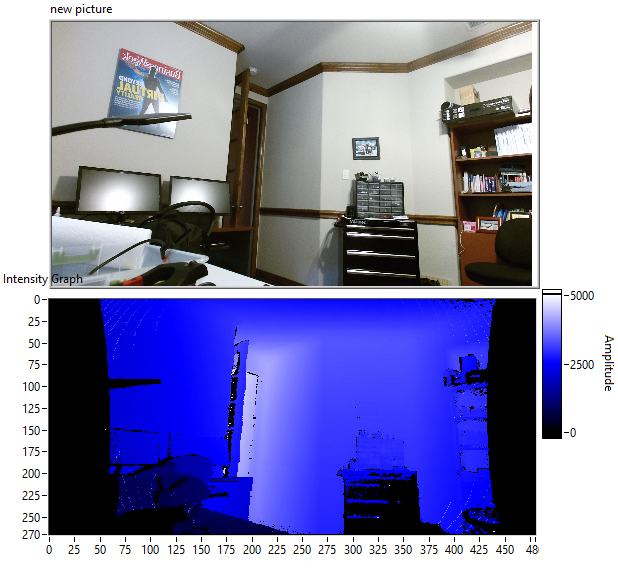

The result is show below in the RGB image and the corresponding Z-value image.

Good luck