- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

CUDA driver error -359631 when running Multi-channel FFT example

04-24-2020 04:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi everyone. Complete newbie to GPU programming, want to do some image processing on a GPU. I downloaded the LabVIEW GPU Analysis Toolkit and I am getting the error below when I try to run the Multi-channel FFT example. Can anyone point me in the right direction for overcoming this error? I am running Win10 64 bit and LabVIEW2019 64 bit. I downloaded the latest version of CUDA, version 10.2. The error says something about installing an updated NVIDIA display driver, so I also updated my display driver to the latest version. Suggestions? I'm wondering since the LabVIEW GPU Analysis Toolkit hasn't been updated in a while if I need to downgrade to an earlier version of CUDA. Thanks in advance for any help you can provide!

Error message follows:

"Error -359631 occurred at call to cudaRuntimeGetVersion in cudart64_102.dll.

Possible reason(s):

NVIDIA provides the following information on this error condition:

code:

cudaErrorInsufficientDriver = 35

comments:

This indicates that the installed NVIDIA CUDA driver is older than the CUDA runtime library. This is not a supported configuration. Users should install an updated NVIDIA display driver to allow the application to run.

library version supplying error info:

4.1

The following are details specific to LabVIEW execution.

library path:

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.2\bin

call chain:

-> lvcuda.lvlib:Get CUDA Runtime Version (cudaRuntimeGetVersion).vi:3630004

-> lvcuda.lvlib:Get CUDA Runtime Version.vi:230003

-> lvcuda.lvlib:Initialize Device.vi:3260001

-> Multi-channel FFT.vi

IMPORTANT NOTE:

Most NVIDIA functions execute asynchronously. This means the function that generated this error information may not be the function responsible for the error condition.

If the functions are from different NVIDIA libraries, the detailed information here is for a unrelated error potentially."

04-25-2020 07:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

IMHO, the LabVIEW GPU Analysis Toolkit was a tool needed when CUDA required the programmer to manage the compute contexts (see my post here:

Now that modern NVIDIA CUDA has remedied that problem, you're best writing yourself some c++ code and building a .dll that you can call from LabVIEW. Then you can take advantage of the latest CUDA features (and use a modern display driver).

Mark Harris's articles really helped me get this going:

https://devblogs.nvidia.com/author/mharris/

Especially these:

https://devblogs.nvidia.com/easy-introduction-cuda-c-and-c/

https://devblogs.nvidia.com/even-easier-introduction-cuda/

Of course there are even more higher level things that can create the CUDA code for you (OpenCL, for example) or implement the GPU calls in a library.

04-27-2020 07:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for the links, I will check them out. Unfortunately, I don't speak C++ as the last time I did text-based programming was 30+ years ago in the days of Fortran, haha. But I have used LV many times to access properties and methods in a .dll, especially using the .NET calls. Is there a convenient way to access the CUDA functionality in this sort of approach? In the meantime, I will try the "even easier" tutorial and see if that is a path that would work for this old noggin...

04-27-2020 09:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Programming in CUDA has some similarities to FPGA. So with "great flexiblity" comes "great complexity". So at some point, you're probably going to be looking at some text code.

Depending on where you need the GPU power, there are some higher level libraries that avoid you making the cuda code like Thrust.

https://developer.nvidia.com/thrust

Since you were posting about the FFT, look here:

https://developer.nvidia.com/cufft

if you're familiar with fftw (a particularly popular FFT library), this will be pretty familiar stuff.

A few more higher level platforms:

https://developer.nvidia.com/language-solutions

In particular this might have some directly callable .NET stuff. I haven't tried it, but looks pretty cool:

Let us know if something works for you.

04-27-2020 11:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It looks like my problem is probably related to the CUDA install itself. When I try to run one of the CUDA Toolkit examples from Visual Studio (not LabVIEW), I get this error:

"CUDA error at C:\ProgramData\NVIDIA Corporation\CUDA Samples\v10.2\common\inc\helper_cuda.h:775 code=35(cudaErrorInsufficientDriver)"

The documentation on the NVIDIA website for error 35 gives the same error message I see in LabVIEW.

I have Googled "cudaErrorInsufficientDriver" but so far not been able to find what to do to solve the issue. I already have installed the latest NVIDIA driver for my graphics card, so that does not seem to be the issue. I have also checked compatibility of my graphics card with CUDA 10.2.

Any ideas?

04-27-2020 03:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Build and run the "devicequery" example first. This is handy and will tell you what your hardware is showing to the software you build.

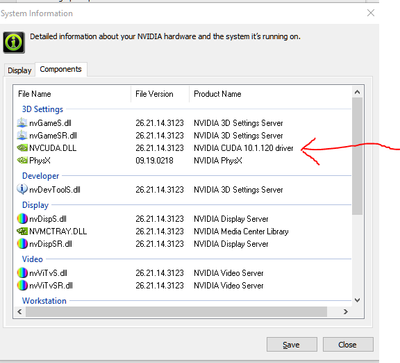

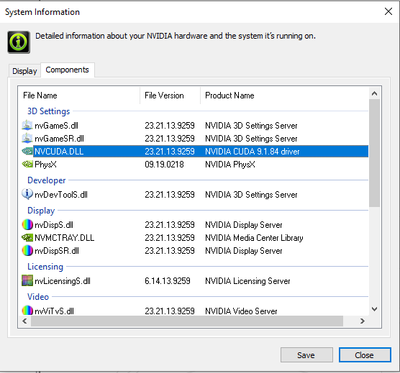

You can also get the CUDA version from NVIDIA Control Panel app, Help->System Information->Components

Then look at the NVCUDA.DLL version

My guess is that the display driver may need to be updated, but I would have suspected that the CUDA Toolkit would have done that already.

The other thing, is that you have to make sure that if you're on a laptop, that the GPU is enabled (often to save power, it's not getting used).

You can also get some info (if you have multiple GPUs) from teh NVIDIA SMI utility :

https://developer.nvidia.com/nvidia-system-management-interface

04-27-2020 04:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi. Thanks for the suggestions!

1) Here is the result of running the deviceQuery example:

"C:\ProgramData\NVIDIA Corporation\CUDA Samples\v10.2\1_Utilities\deviceQuery\../../bin/win64/Debug/deviceQuery.exe Starting...

CUDA Device Query (Runtime API) version (CUDART static linking)

cudaGetDeviceCount returned 35

-> CUDA driver version is insufficient for CUDA runtime version

Result = FAIL"

This is the same error I see in LabVIEW or running other examples.

2) Here is what I see in the NVIDIA Control Panel.

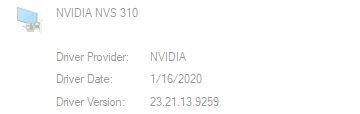

This looks like it is likely the problem, since I am trying to run CUDA 10.2, but the NVCUDA.DLL driver shows version 9.1.84. I don't understand, however, why there is a version mismatch or how to fix it. I have run the CUDA 10.2 install both with and without the display driver option selected. And I have separately updated to the latest NVIDIA driver for my graphics card, one from 1/16/2020.

I'm wondering if this means I that the latest that the graphics card driver supports is CUDA version 9.

3) It is not a laptop or multiple GPU. It is also not a high end graphics card, just the stock card used in a Dell tower computer, though it is supposed to be compatible with CUDA.

04-28-2020 07:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Right. If it’s an older card it may not have a driver for CUDA 10. You can install the CUDA 9 toolkit (if you can find it). For getting started, this is a fine version to begin with.

Otherwise you will want to install newer GPU hardware.

BTW, In the past the CUDA library didn’t integrate into the latest Visual Studio. So you may want to double check that the CUDA toolkit you use supports your installed VS version (I think the free VS community is fine to use).

04-28-2020 01:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I tried yesterday switching to CUDA 9.1, and I did get the deviceQuery and deviceQueryDrv examples to work after retargeting the Windows SDK. But with any of the other CUDA examples, I get this error:

"fatal error C1189: #error: -- unsupported Microsoft Visual Studio version! Only the versions 2012, 2013, 2015 and 2017 are supported!"

I am using VS2017, which is supposedly a supported version, but looking at the error source in VS, it looks like CUDA 9.1 only supports VS2017 up through V15.3, whereas I have V15.8. At this point, I think I'll buy a newer GPU, but I would definitely appreciate any other suggestions. I'm looking at the GEFORCE GTX 1060 which looks like the beefiest unit I can fit in my current PC without a power supply upgrade.

One other thing I found running one of the LabVIEW examples Get GPU Device Information is I got an error where it asked "Device count inquiry failed. Is remote desktop active?" Like millions of other people I am stuck at home and I am using Remote Desktop. The documentation has this helpful tidbit: "Running this example from a remote connection can produce most of the error conditions below. Under Remote Desktop, most NVIDIA display drivers cannot detect GPU devices installed in the system. In most cases disconnecting a remote session will not allow the NVIDIA display driver to see GPU devices. The system must be logged into locally or restarted." But this doesn't explain why the deviceQuery example does work.

04-29-2020 08:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

"I am using VS2017, which is supposedly a supported version, but looking at the error source in VS, it looks like CUDA 9.1 only supports VS2017 up through V15.3, whereas I have V15.8."

Ugh. How lame. Yeah, everytime I dip my toe into text based languages, I spend days just trying to get the stupid toolchain installed. LabVIEW never has that problem.

My cruddy solution to this was to create a dedicated virtual machine with a compatible VS and CUDA. After compilation, I would transfer the built stuff and run the .exe or .dll from my host computer. Yeah, buying a newer card is going to be the best.

GEFORCE GTX 1060. Yeah that looks fine. The next generation NVIDIA cards (Ampere) should be announced (maybe on Friday?). Not sure if that would affect which card you should get now, though.

"Is remote desktop active?" Error:

I wouldn't worry about it. The "deviceQuery" is the one to pay attention to.

Remote Desktop is great because you don't need a physical screen attached to the machine. But because it doesn't use a physical screen, some GPU stuff doesn't work.

So Old Remote Desktop (Win7) did not let CUDA programs operate. It seems that New Remote Desktop (Win10) usually does. That said, if you use a different type of remote login (TeamViewer, VNC, etc.), that always works, because these require a physical monitor to be connected to the machine, and they simply mirror what comes out of the GPU.

Going one more layer down this "rabbit hole": If you have only a single GPU installed:

* If you are processing on the GPU that drives the display, Windows will terminate your GPU program if a GPU operation takes too long. A workaround is to set the windows display driver timeout to something larger (like 10 seconds instead of default 5 seconds) :

see http://stackoverflow.com/questions/17186638/modifying-registry-to-increase-gpu-timeout-windows-7

Running this command from an adminstrator command prompt should set the timeout to 10 :

reg.exe ADD "HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\GraphicsDrivers" /v "TdrDelay" /t REG_DWORD /D "10" /f

Or better yet, use a second GPU. The GPU you wish to use for computation only should use the TCC driver (must be a Titan or Tesla or other GPU that supports TCC). This card should be initialized after the display GPU, so put the compute card in a slot that is > display card. The TCC driver is selected with NVIDIAsmi.exe -L from an administrator cmd window to show the GPUs, then NVIDIAsmi.exe -dm 1 -i 0 to set TCC on GPU 0. Then if you use the command "set CUDA_VISIBLE_DEVICES" you can pick the GPU the deconv code should execute on.

I'm not sure what would happen if you left your current card in, and added the 1060 just for CUDA processing. My guess is that you cannot have two NVIDIA display driver versions, so it might default to the old one.