- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Problems performing Real FFT on GPU

03-04-2014 04:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

I was hoping someone would be able to help me out with a problem I've been having.

I'm new to gpu computing, and the LabVIEW toolkit is my only experience. I wrote a program to check I was able to successfully perform real FFT and inverse FFT of an image on the GPU. Basically this was a test to check that if I put an image in and do this, I get the same image out again, as I will eventually be using this as part of an image smoothing algorithm.

Initially I wrote this on a GeForce 9800 GT, and it works fine. However, when I then performed the exact same program on a Tesla c2075 it didn't work as expected, instead of the image I got an all black output with a row of regularly spaced white squares at the bottom. After 1 run through, if I try to run the program again the operation seems to do nothing and the output is all zero (intensity graph shows all black with amplitude 0 to 1). It might be worth saying that this was on a completely separate machine to the previous.

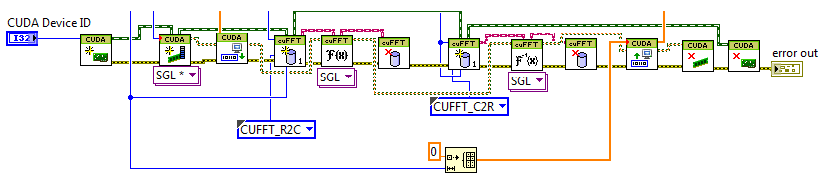

A section of my original code is at the bottom of this post. Any ideas on why it might not be working on the Tesla would be helpful, as this is a much more powerful GPU so I would prefer to be using it. I tried performing the operation out of place but this seemed to give the same result.

So any advice?

Thanks.

03-06-2014 03:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Unfortunately, you've cut off some of the code so it's not possible to render much help. First, if you could attach the VI w/ the code, this allows inspection of code features that can't be verified visually (e.g. you're 0 constant does not appear to be SGL as the array downstream has a conversion dot on the Upload Data VI).

So many things can be different from you system setup (e.g. which version of CUDA is in use and did it change between the 9800 and Tesla). In my experience, I've found many functions that ran correctly on older GPU hardware that had bugs in them (e.g. buffer over-runs) that are now caught on cards like the Tesla.

From your snapshot above, I'd want to know:

- Has the device ID changed so that you are targeting the Tesla rather than a low-end video card?

- You wire the size (apparently) to both the size inputs and batch inputs of the Initialize Library VI calls. The means the GPU is doing an NxN operation not just size N. However, the buffer you allocate to upload the results is of size N (via the lower Initialize Array function).

- The upload buffer wired into the Upload Data VI is of the wrong element type. That's why there's a conversion dot on it. This won't affect upload as LV will automatically convert the buffer to the right type. However, it does mean the buffer holding your input signal data might be wrong. In this case, data conversion could completely pass the wrong data to the FFT.

As I've recommended to others who've posted on this forum, a good first step is to back away from the specialized FFT (Real FFT in this case) and just do the normal complex FFT. Results aren't as pretty and they aren't as fast but they will tell you if your program is sound.

Also, the toolkit ships w/ a CSG version up and running. If you work from it, and gradually add what you need, you might find the culprit. Sometimes the iterative process avoids the mistake from coding everything at once.

Good luck!