- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Decimation for XY Graph - Timestamp

04-08-2018 10:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi to everybody. I would like to ask from you help to figure out what can be a good way to decimate data with uneven timestamps.

I have a 2D array where each row is data pair (Timestamp and Measurement) and I would like to decimate the data, I have done this in the past but with graph and Max Min Decimation, however I dont have a clear way to use the time factor for the decimation.

I will appreciate the help. Thanks in advance

04-09-2018 04:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You'll need to loop over the array. In a for loop, use a shift register and compare the timestamps with the previous value(s). Then you need to calculate which values are in the same "bin".

When you say XY data, is this really XY data? E.g. can the values go back in X direction? That would complicate things a lot. I've never done any decimation on real XY data. If the X values are sequential it's another story though.

04-09-2018 06:42 AM - edited 04-09-2018 06:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You didn't provided an example, nor did you tell us how you want the decimation to happen.

simple decimation by n is just take every n'th value (pair).

How about using the interpolation vi? (or Fitting?)

for each new point (usually in x scale and usually evenly spaced) you want, grap all points nearby (in the same bin) and fit a new point.

How unevenly are they sampled?

Hard to give a general answer...

Henrik

LV since v3.1

“ground” is a convenient fantasy

'˙˙˙˙uıɐƃɐ lɐıp puɐ °06 ǝuoɥd ɹnoʎ uɹnʇ ǝsɐǝld 'ʎɹɐuıƃɐɯı sı pǝlɐıp ǝʌɐɥ noʎ ɹǝqɯnu ǝɥʇ'

04-10-2018 12:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Wiebe, Henrik. First, thanks for the answers, I am providing an example data set of the data to decimate.

The delta time is not precise and irregular, however this normally has a pattern; 10, 10, 20 seconds and repeat for example, however the time can jump a couple of hours in time intervals. I don´t have in mind a specific method to decimate the data but I would like to only show data blocks of near points and avoid to include points after the time jumps to organize the data and be easy to follow the order.

04-10-2018 02:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

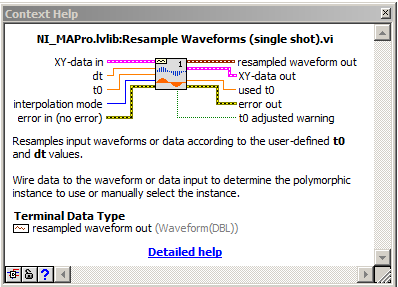

You may want to try the Resample Waveforms (single shot).vi

That VI is polymorphic and accepts XY data as input (just not Timestamp values so keep the X-array as DBL format).

The VI returns resampled data at constant dt intervals both as XY and waveform data.

Note that the FIR resampling mode does not apply for XY data (it defaults to Linear interpolation). Try for example Linear and/or Spline interpolation methods with dt input values between 100 and 1000 sec.

Also note that due to the nature of your data (with discontinuities), the spline method will insert samples that are significantly 'undershoot' but if you ignore these you may still get relevant data.

04-10-2018 04:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

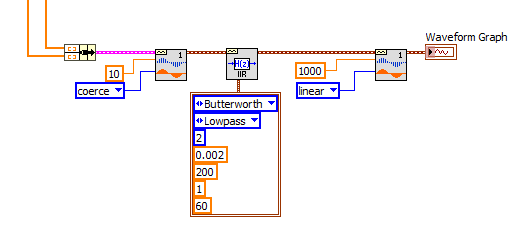

Actually in your specific case you can create a more advanced algorithm to solve your problem. Here is an example where I

1 - resampled your XY data using simple coercion to give me a evenly spaced waveform with a high sample rate (dt = 10)

2 - applied a smooth lowpass filtering to your signal

3 - decimated more than 100 times (dt = 1000)

I could have used a FIR Filter based resampling instead of independent lowpass filtering an resampling, but that would give more overshoot and ringing around your discontinuity points.

Just some ideas...