- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Interpolate Pixel

04-22-2024 04:51 AM - edited 04-22-2024 05:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Andrey_Dmitriev wrote:

Delete From Array is slow, as it always copies the data.

Use Array Subset instead, it always returns 2 subarrays.

I'd convert to u8 before building an array of 8 byte doubles... That is what the C code does.

04-22-2024 07:05 AM - edited 04-22-2024 07:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

Delete From Array is slow, as it always copies the data.

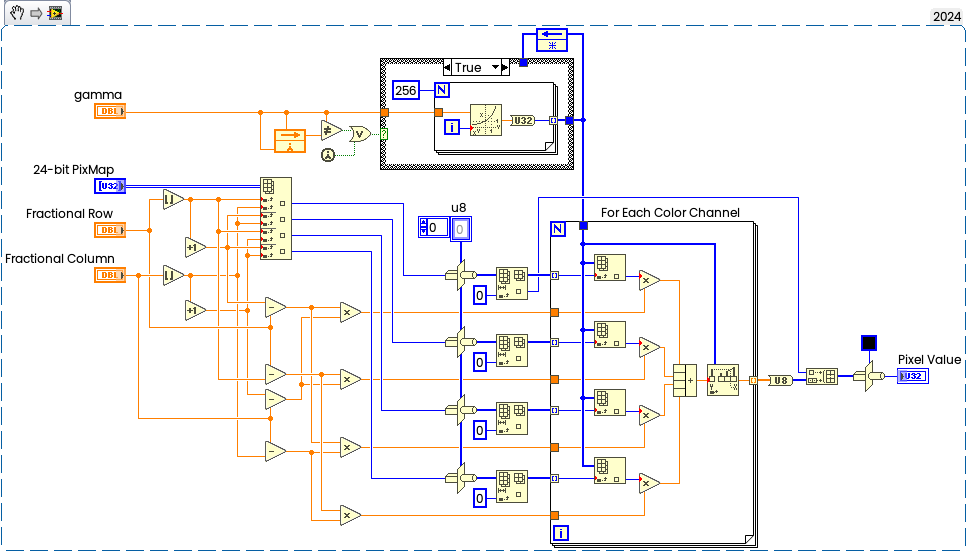

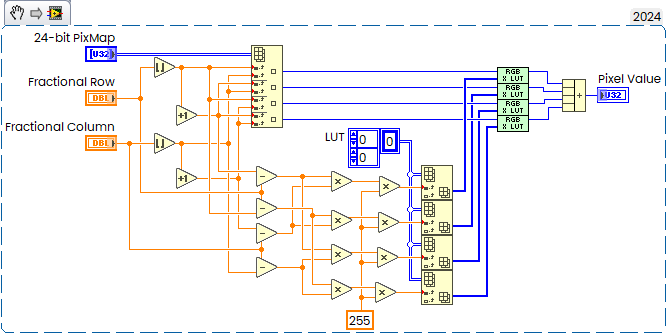

Yes, agree, the code was already changed by Christian. This is the fastest (for the moment) version:

From C side I'll made two modifications. One is to compute "repeatable" parts of the code only once (this will give only a little bit).

The C-code according to VI above is the following:

IP2D_API UINT fnIP2D(iTDHdl arr, double fCol, double fRow)

{

uint32_t* pix = (*arr)->elt; //address to the pixels

int cols = (*arr)->dimSizes[1]; //amount of columns (image width)

//Relative Weights to indexes

double ffRow = floor(fRow);

double ffCol = floor(fCol);

double ffRowDiff = fRow - ffRow;

double ffColDiff = fCol - ffCol;

UINT i0 = (UINT)rint((1 - ffRowDiff) * (1 - ffColDiff) * 255.0) << 8;

UINT i1 = (UINT)rint((ffRowDiff) * (1 - ffColDiff) * 255.0) << 8;

UINT i2 = (UINT)rint((1 - ffRowDiff) * (ffColDiff) * 255.0) << 8;

UINT i3 = (UINT)rint((ffRowDiff) * (ffColDiff) * 255.0) << 8;

//Four Pixels:

int iRow = rint(ffRow);

int iCol = rint(ffCol);

UINT p0 = getpXY(pix, cols, iRow, iCol);

UINT p1 = getpXY(pix, cols, iRow + 1, iCol);

UINT p2 = getpXY(pix, cols, iRow, iCol + 1);

UINT p3 = getpXY(pix, cols, iRow + 1, iCol + 1);

p0 = LUT_RGB(p0, &(LUT[i0]));

p1 = LUT_RGB(p1, &(LUT[i1]));

p2 = LUT_RGB(p2, &(LUT[i2]));

p3 = LUT_RGB(p3, &(LUT[i3]));

return (p0 + p1 + p2 + p3);

}

But now one very important change. I noticed that with large arrays the C-code only twice faster than LabVIEW. Its because I've got massive cache misses by iterating over the rows in inner loop, and columns on the outer loop. It should be opposite, so I swapped only two lines:

IP2D_API void fnIP2D2(iTDHdl arrSrc, iTDHdl arrDst, fTDHdl fCol, fTDHdl fRow)

{

int Ncols = (*fCol)->dimSizes[0];

int Nrows = (*fRow)->dimSizes[0];

double* fColPtr = (*fCol)->elt;

double* fRowPtr = (*fRow)->elt;

unsigned int* DstPtr = (*arrDst)->elt;

#pragma omp parallel for // num_threads(16)

for (int y = 0; y < Nrows; y++) { //now swapped

for (int x = 0; x < Ncols; x++) {

DstPtr[x + y * Ncols] = fnIP2D(arrSrc, fColPtr[x], fRowPtr[y]);

}

}

}

I also noticed issue with configuring iteration parallelism (was sure that P terminal will override threads settings defined in for-loop properties, but it seems to be does not if configured less threads than connected to "P" terminal).

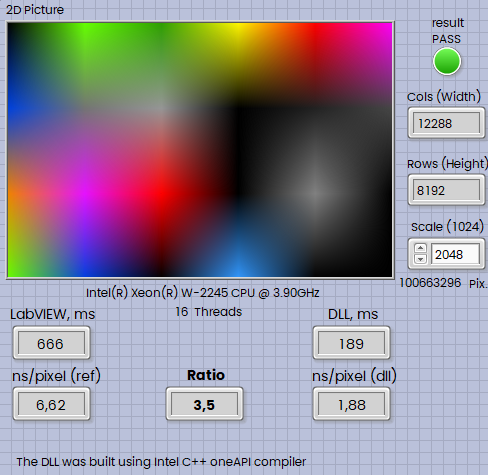

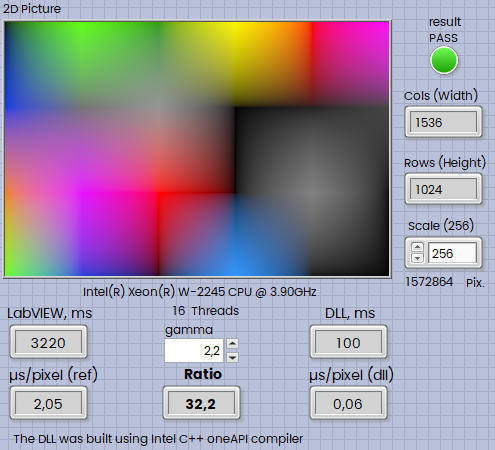

Anyway the DLL still three times faster. This is on W-2245 CPU:

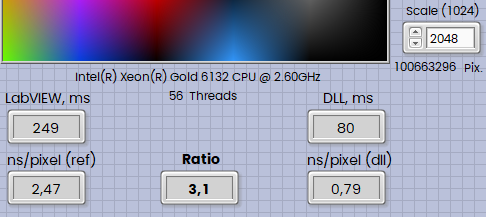

And this is on Xeon Gold

The screenshots above from LabVIEW2024Q1x64, on the LabVIEW 2018 32-bit the DLL is around twice faster.

The actual code snapshot in attachment.

04-22-2024 08:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

Wow, you put a lot of work into this and I'll have a more detailed look later.

Here are a few initial comments:

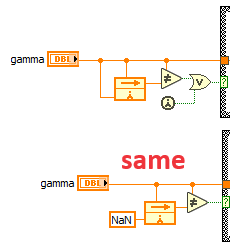

In you would init the FN with NaN, you could eliminate the "first call?" primitive and the OR. (since gamma is never zero we might even just leave the initializer disconnected)

If gamma=1, your code is off by up to 2 compared to the original (and my non-gamma) code. I think you need to tweak to match.

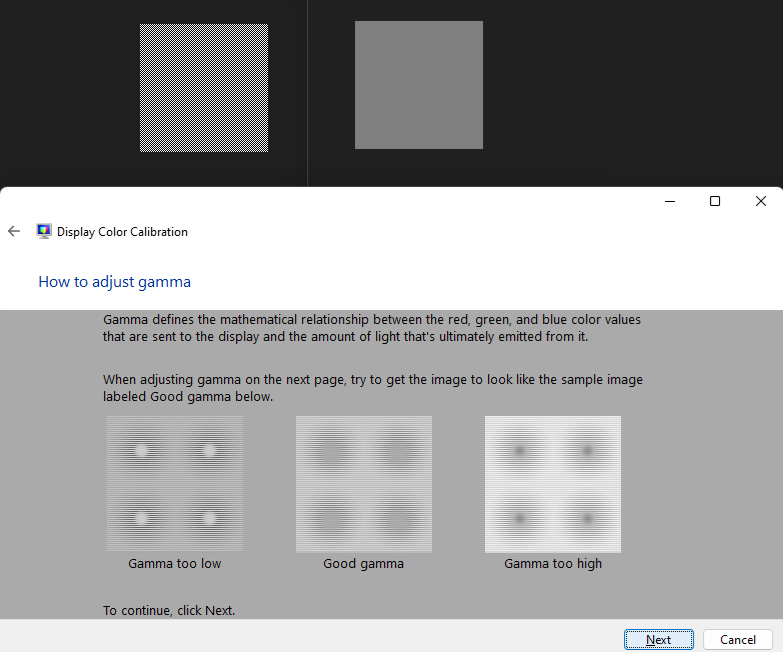

I still don't understand the song and dance with the gamma. Without it, my code is 10x faster than your dll. I thought speed was most important. If gamma is close to 1, the results look the same. And if gamma is significantly different, the result looks very ugly.

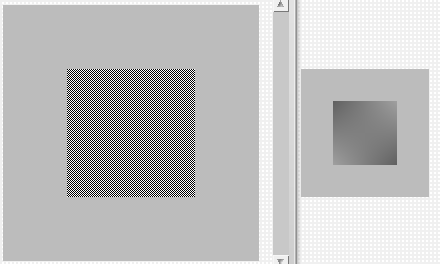

Perhaps pictures will help.

"Blk and Wht.png" has a checkerboard pattern of pixels. Each pixel has a level of either black (0), or white (255).

In "Gray 128.png" every pixel is gray, with a level of 128.

Look at them both from a distance. You'll notice that "Blk and Wht.png" looks darker than "Gray 128.png".

Interpolating pixels without accounting for gamma, results in an image that is too dark.

04-22-2024 09:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paul_a_cardinale wrote:

"Blk and Wht.png" has a checkerboard pattern of pixels. Each pixel has a level of either black (0), or white (255).

In "Gray 128.png" every pixel is gray, with a level of 128.

Look at them both from a distance. You'll notice that "Blk and Wht.png" looks darker than "Gray 128.png".

Interpolating pixels without accounting for gamma, results in an image that is too dark.

Strange, on my medical monitor is exactly opposite, but it depends from the settings.

Anyway, since gamma is important, I've made the changes and optimization in DLL based on our experience from this topic today.

On LV2024 x64 (as well as x86) the DLL is 30 times faster:

Source code in the attachment as usually.

04-22-2024 10:21 AM - edited 04-22-2024 10:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for the gamma illustration

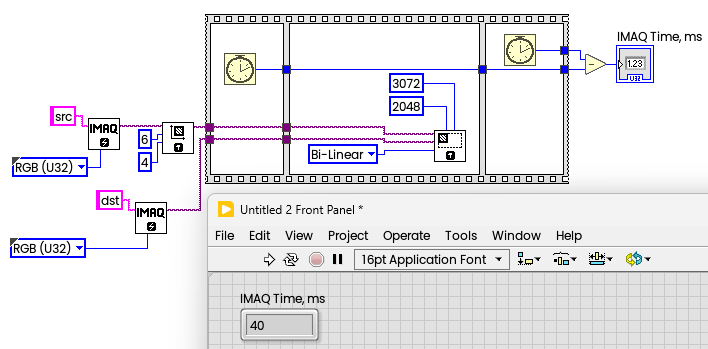

Andrey mentioned the IMAQ tool to do interpolation. Does that even have an option to account for gamma?

In a typical image, interpolation is between relatively similar values and the effect would be almost impossible to tell by eye and throwing 10x more computing power at it seems relatively pointless. Must we assume that the original pixels are already gamma corrected?

Of course I have no idea what your purpose for this tool is. Bilinear interpolation gives you an exact interpolated RGB value and I am not sure why perception and gamma even matters.

And yes, windows 11 has an option to adjust gamma of the monitor itself. Aren't there too many cooks?

(I remember adjusting gamma on the analog RGB monitor of the Silicon graphics workstation back in the days 😄 I think 1.6 to 1.7 was about right to account for the nonlinear response of the electron gun. 😄 )

04-22-2024 10:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Andrey_Dmitriev wrote:

Source code in the attachment as usually.

That picture looks horrible with these sharp edges, but I guess you are trying to demonstrate the effect of way too much gamma. 😄 !

04-22-2024 11:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

That picture looks horrible with these sharp edges, but I guess you are trying to demonstrate the effect of way too much gamma. 😄 !

Fully agree, but here we have two separate and independent tasks. One is "how to apply gamma on resample" and the other one is "how to increase the performance of the computation". I am appealing to the second task only. Addressing the "correctness" of the computation from an algorithmic point of view was out of scope.

Paul wrote above "Here's where I currently stand:", so I have simply taken this piece of code "as is" and put it into a DLL, then demonstrated a slightly better performance, that is all. The Gamma 2,2 just helps me to see that the gamma was taken into account. And the result from the DLL code exactly matches the result of the LabVIEW code, nothing more.

And no, the IMAQ Resample does not have any "gamma" input. There are different resampling methods available, but no gamma at all.

04-22-2024 11:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

Thanks for the gamma illustration

In a typical image, interpolation is between relatively similar values and the effect would be almost impossible to tell by eye and throwing 10x more computing power at it seems relatively pointless.

That's a valid point. So far I haven't investigated how much the effect is visible.

Must we assume that the original pixels are already gamma corrected?

Not "corrected", but applied. Virtually every imaging system will have a non-linear transfer function (and my camera certainly does).

Of course I have no idea what your purpose for this tool is.

Mostly I'll use it in a tool that applies a certain type of distortion. Each pixel on the output maps to non-integer pixel coordinates in the original. Currently it just picks nearest neighbor; and in most images that works very well, but sometimes odd aliasing is visible. I'm sure that ignoring gamma and merely interpolating RGB values would work well with most images, but I wonder if artifacts would be visible where there are steep gradients.

Bilinear interpolation gives you an exact interpolated RGB value and I am not sure why perception and gamma even matters.

I want to interpolate the light levels. RGB values are non-linear encodings of light levels.

And yes, windows 11 has an option to adjust gamma of the monitor itself. Aren't there too many cooks?

I'm not trying to cook an additional gamma adjustment; I'm trying to prevent the already-applied-gamma from introducing error.

04-22-2024 02:43 PM - edited 04-22-2024 02:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

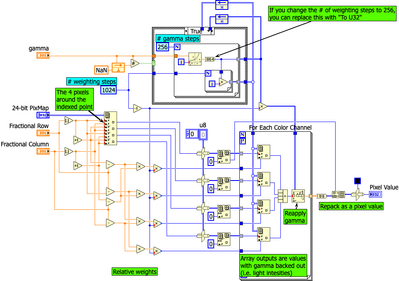

More lookup, less multiply:

04-22-2024 02:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paul_a_cardinale wrote:

So far I haven't investigated how much the effect is visible.

Attached simple VI where I added checker board, two small x-ray images and gradient.

I think, after reading the article "Gamma error in picture scaling" I understand what you mean.

Yes, after scaling down and up, the checkerboard pattern in the middle gets darker.

I think this effect is almost "invisible" on real images, but exists. I have seen this (very rarely), but in radiography is strictly required to view images in 1:1 mode (or with integer zoom), and we haven't such problem at all.

The artifacts on the gradients after scaling up are usually caused by the banding effect. You can't easily compensate for it with gamma correction (it's better to have a 10-bit monitor and proper software instead). However, gamma-corrected image resizing has a lot of information and discussions available on the internet.

The Vision Development Module is required for the VI in the attachment.