- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »

Issue with wait function in a while Loop (doesn't remain constant)

03-15-2016 08:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Now it's almost 300 kb and it is 10 seconds per iteration.

03-15-2016 08:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

That's not that big of a file to worry me.

But I'm still suspicious of the xlsx option on that Express VI.

Try putting in some bench marking code. Put in some Tick Count functions before and after that, and before and after other significant sections of your code. That should narrow down what part is causing the problem.

03-15-2016 11:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@RavensFan wrote:

But I'm still suspicious of the xlsx option on that Express VI.

So am I, particularly as the Help for the Express VI suggests that the xlsx option works even if Office is not installed. This means that LabVIEW itself is somehow generating an Excel-compatible file. That would certainly make me nervous ...

Bob Schor

03-15-2016 11:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I just checked, and Mattecst is apparently coding in LabVIEW 2013. Too bad -- if LabVIEW 2014 or 2015 were being used, I would suggest using the Report Generation Toolkit and simply writing the data (as rows) to a named Excel Workbook. Of course, one small problem is that there are Express VIs all over the place in this code, along with Dynamic Wires, so who knows what the data format is, and how the rows and columns are arranged ... But it could be done.

I would estimate that if a row contained 1000 entries, and you wanted to write one row per second, the RGT could probably keep up with that, no matter how large the file becomes (assuming you have enough disk space). It is certainly easy enough to test ...

Bob Schor

03-15-2016 11:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

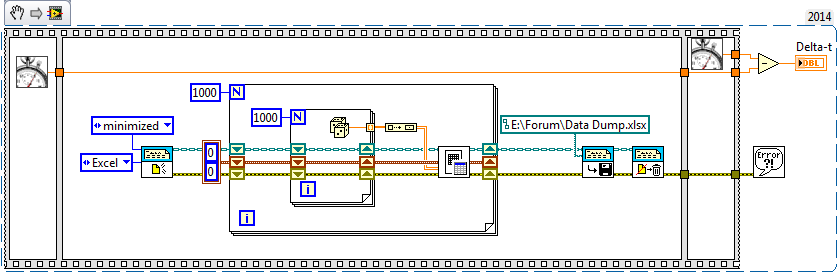

OK, I did the test. This routine generates 1000 random numbers/row, writes 1000 rows, then closes Excel.

The resulting .xlsx file is about 6.8MB, and the entire process took less than 15 seconds, which means that it wrote a row in 15 milliseconds, pretty good, eh?

Bob Schor

03-15-2016 11:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks but I solved changing to text file and switching my VI to producer-consumer archtitecture.

Now the sampling is smooth and costant.

Thanks to anyone who helped me through

03-15-2016 12:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'd be curious to know which of those 2 things fixed it.

Producer/consumer is a good idea. But my guess is that the actual fix was changing to the text file.

Two seconds is a slow loop rate and I think you could probably still do file I/O in that loop without a problem, but converting it to a a producer/consumer is safer.

If the problem is with the xlsx version of the Express VI like I suspect, then you'd still have an issue even with producer/consumer. The producer would still be able to keep up with the 2 second loop rate, but the consumer would slow down over time on the Express VI and you'd have a slowly building queue. Eventually the consumer would catch up, as long as the producer stopped sending data before the PC ran out of memory.

- « Previous

-

- 1

- 2

- Next »