- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

LUTs resources FPGA

Solved!01-30-2017 03:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

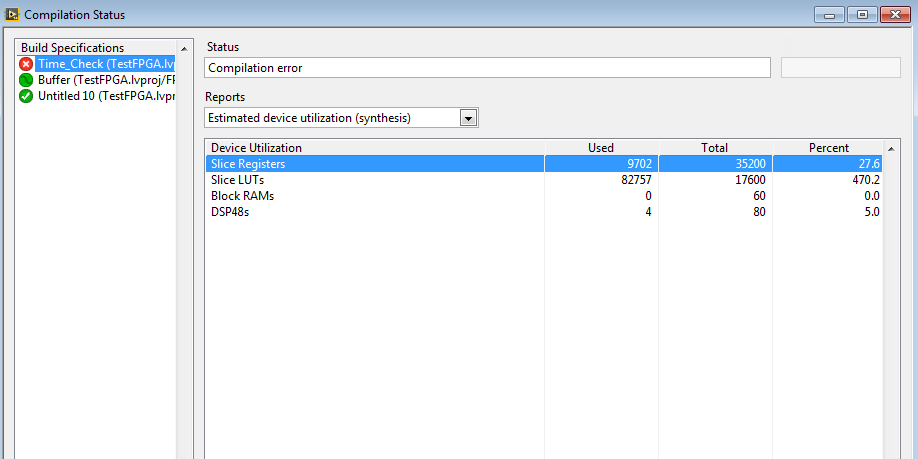

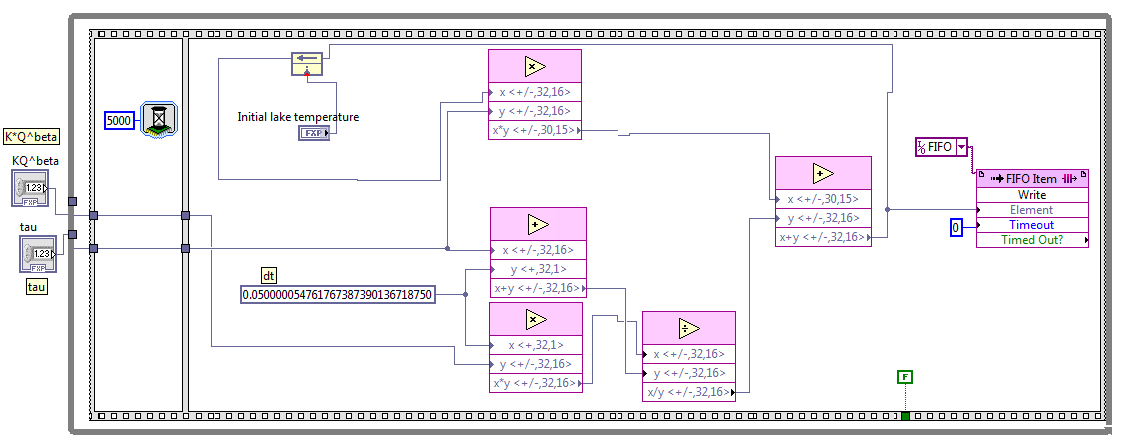

Good morning, I have a question about LUTs and DSP48s resources at my myRIO FPGA. So, I have a really not complicated piece of code, which I am trying to compile on my FPGA. Its how it looks like:

How is that possible? 470.2 percent? Maybe I have something wrong with my setting, or maybe something is broken.. I would appreciate aby kind of help.

Thank you 🙂

Solved! Go to Solution.

01-30-2017 04:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Could you please post your FPGA VI (no need for entire project) and we'll check it out.

Thanks!

01-30-2017 06:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Also it looks like you are performing a division with a known value since it's the sum of a control value (tau) and a constant (0.05).

Since division on FPGA is very expensive (and time consuming) it would be more efficient if you could calculate M = 1 / (tau + 0.05) on your host and perform a multiplication by M on your FPGA instead.

01-30-2017 06:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ok I am attaching my VI (Buffer.vi) (in LabVIEW 2014, if earlier version is needed, let me know)

LocalDSP, I would prefer to have my calculations on the FPGA, as I am reducing the participation of the host to minimum. Its dynamic host interface (more fpga bitfiles), so that is why there is tau control at the beginning.

And beside that, isn't it still weird that two division calculations would take almost 500% percent of my LUTs resources? I am really confused 😕 Thanks you for feedback!

01-30-2017 06:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ohh, and I also forgot to add, that I tried to do something simillar using DSP48e blocks, which were set to divide my fixed numbers. And in this case also my LUTs resorces were exceeded.

And here I dont use any Divide Highthroughput Math block. So there must be something I overlook and do not understand.

01-30-2017 07:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

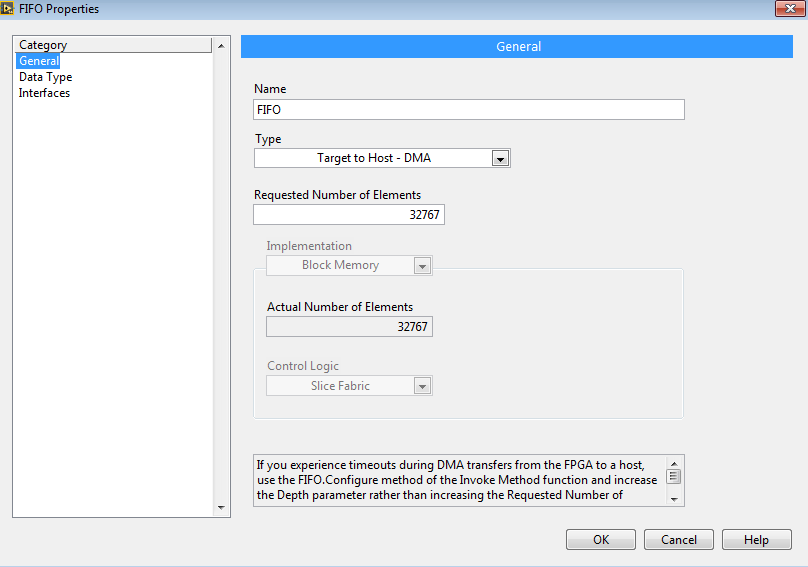

I noticed that you aren't using any BRAM at all, so I guess your FIFO is not a Target-to-Host DMA type.

What type of FIFO have you defined, how deep is it but more important what implementation have you selected. Since you are not using BRAM you can quickly run out of resources if you are using LUTs or Flip-Flops.

01-30-2017 08:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It actually is Target-to-Host DMA FIFO, so it may not be the reason 😞

01-30-2017 08:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

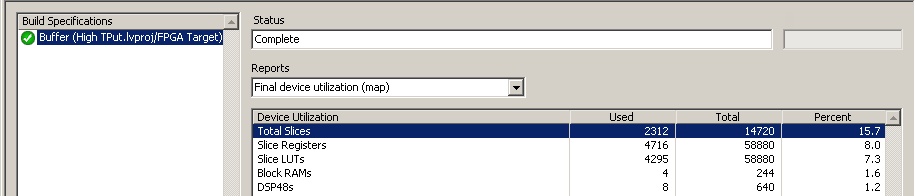

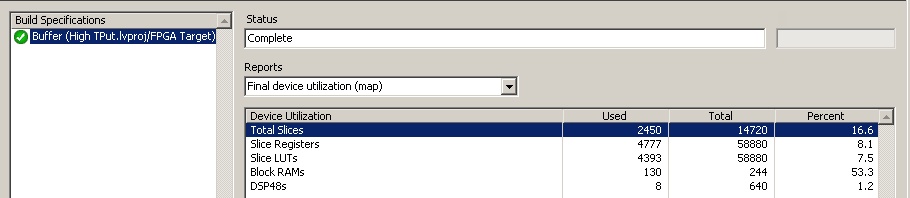

I have to admit it's weird. I've compiled your VI with two different Target-Host DMA FIFO sizes, resp. 1023 and 65535 elements and it passed without problem and with obvious Block RAM increase for the larger FIFO.

Here are the two corresponding compile reports for my used Target (PXIe 7965R). I do not have MyRIO installed so cannot totally reproduce your situation. So somehow it itsn't using BRAM but rather slices on your target.

Target-Host DMA FIFO of 1023 elements

Target-Host DMA FIFO of 65535 elements

01-30-2017 08:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I forgot, the following is not ment to be a solution to your problem but can you try to reduce your FIFO size to 1023 and see how it compiles? This would prove/disprove the theory but also allow you to move on with your project.

01-30-2017 10:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

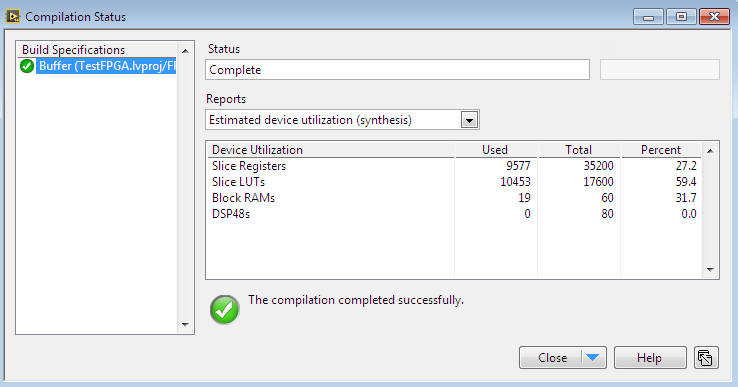

Wow you were right! Now I manage to compile this code 🙂 I am soo grateful, I wish I turned to you testeday, I wouln't loose whole night for struggling! My present results:

For following piece of code:

But still question, why is my progam not using those DSP48s while dividing? Wouldn't be it more efficient?

Thanks ones again!

Jola