- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Maths problem, converting change in voltage to change in displacement.

04-06-2016 10:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I get an input from an LVDT that ranges from roughly 0 - 5V. The arm travel on the LVDT is around 15.6cm or 156mm. So I've calculated that every time the arm moves 1mm the voltage changes by 0.03205128V. How do I go about the calculations to be able to show it on an indicator. I've tried to use a comparison to start with. So that every time the voltage equals let's say 4.7V, a case structure would increment an indicator by one. A problem here though is the voltage produced by the LVDT has too many decimals so that it never equals 4.7V. How can I change it so that it's limited to one or two decimals?

Essentially what I want to show is that a change in voltage in the LVDT corresponds to a change in displacement of the arm. So a decrease in voltage means an increase in displacement and vice versa.

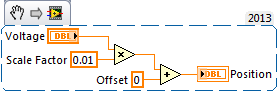

I have attached my code to show what I'm trying to get at.

Thanks for your help.

04-06-2016 10:28 AM - edited 04-06-2016 10:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You just need to 'scale' the voltage into a distance. You'll just need to multiply your voltage by a scale factor to convert to distance. Add an offset if you want to know the position a 'start' point. If when one increases, the other decreases, your scale factor needs to be a negative number. Wire the output of the multiply/add to a numeric indicator.

04-06-2016 02:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Brilliant, it works! Thank you