- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Slow TCP duplex handling on remote connections

03-28-2010 06:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi!

I am working on a TCP client server architecture for a RPC-based LV code execution.

Now, when running TCP with one TX and one RX in the same while loop everything becomes extremely slow. The RX-TX of data goes to about 2Hz when running on a remote machine on my network, and to about 1000Hz when i run the same locally.

Could I have some explanation of this by some NI engineer? Am I missing something obvious here?

Attached a project zip containing a TCPDuplex vi.

Thankful for help,

Roger Isaksson

03-28-2010 09:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Roger,

you can handle the read and the write operation in different loops. With two loops, one for writing the data and one for reading it, you should get the performance you need.

Mike

03-28-2010 09:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Mike,

Thanks for the reply. I am already aware of this "solution". But this will require a hackish-workaround as I have to pass data between the two loops.

How is it possible to create clean LVOOP code if every LV issue encountered has to be solved with a shoddy "workaround"?

As far as I see it, this is a bug in LV and should be looked into.

Any NI engineer care to respond?

Roger

03-28-2010 10:33 AM - edited 03-28-2010 10:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Roger,

why do you think there is a bug. You can do it in only one loop. Maybe you should use smaller timeouts. If it runs well locally,but slow in your network, how can it be a bug in software?

On which OS do you run this?

Mike

03-28-2010 11:49 AM - edited 03-28-2010 11:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I get good throughput on two loops passing data between them, then what magic is hindering the one loop of executing the exact same thing without the extra overhead of passing data?

My bet is that there is some issue in the LV TCP Read and Write code that executes in the context of a while loop, most likely not running in two instances, as when running in two loops. Even though there are two wires of TCP references?

I am running the server side on Win7 Professional and the client on XP, both fully patched.

If you suggest some network latency or issue:

From the server pinging the client:

ping 192.168.0.197

Skickar ping-signal till 192.168.0.197 med 32 byte data:

Svar från 192.168.0.197: byte=32 tid=2ms TTL=128

Svar från 192.168.0.197: byte=32 tid=1ms TTL=128

Svar från 192.168.0.197: byte=32 tid=1ms TTL=128

From the client pinging the server:

ping 192.168.0.190

Skickar ping-signal till 192.168.0.190 med 32 byte data:

Svar från 192.168.0.190: byte=32 tid=3ms TTL=128

Svar från 192.168.0.190: byte=32 tid=2ms TTL=128

Svar från 192.168.0.190: byte=32 tid=1ms TTL=128

Is it possible to have an NI engineer working with LV TCP looking into this?

Edit: I forgot to ask if you tried to run my code?

Roger

03-28-2010 01:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

03-28-2010 02:12 PM - edited 03-28-2010 02:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Mark, I am thankful for all the advice, but so far all I have got is workaround hack style "fixes" that seem predominant in the world of LV "programming"!

As stated in my description of the thread, I am not using both read and write on the same connection reference. It is a duplex implementation. Have a look in the code, it is attached in the first post.

My implementation will use something similar to the classes InputStream and OutputStream from Java. This particular one is a SocketStream with one aggregate InputStream, implementing a listener and TCP Read for various data types. The second aggregate is a OutputStream, implementing, TCP connect and a TCP Write for different data types.

The problem: Encapsulating a TCP connection reference and then passing data between an InputStream to the OutputStream in the same execution context(or inside the same block of code) is impossible, at least if I would like to have decent IO performance?

Clearly a LV bug? 🙂

Roger

03-28-2010 02:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

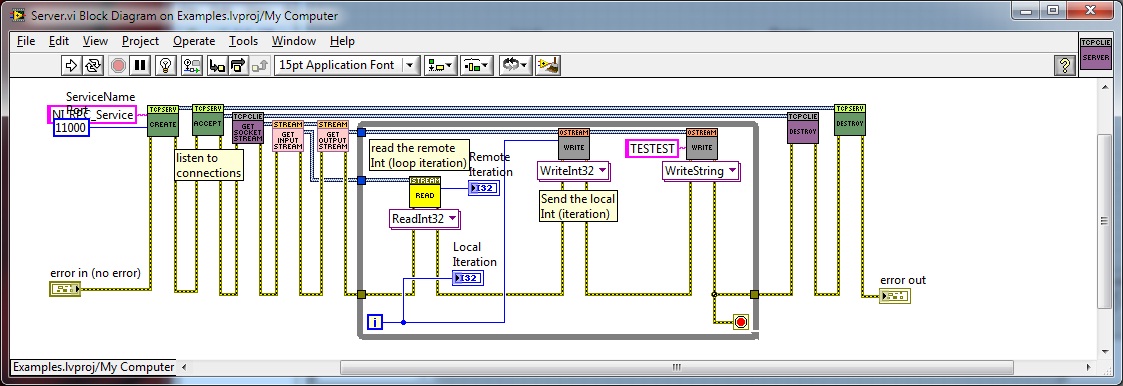

Here are images of the code.

There are a few classes associated. I guess this will do?

Roger

03-28-2010 05:21 PM - edited 03-28-2010 05:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

RogerI wrote:Now, when running TCP with one TX and one RX in the same while loop everything becomes extremely slow. The RX-TX of data goes to about 2Hz when running on a remote machine on my network, and to about 1000Hz when i run the same locally.

Could I have some explanation of this by some NI engineer? Am I missing something obvious here?

Attached a project zip containing a TCPDuplex vi.

Shot in the dark here, but I'm going to guess that this is not a LabVIEW issue. Have you been able to demonstrate that this doesn't happen with code written in another language?

I suspect that Nagle's algorithm is disabled for loopback connections, and enabled for remote ones. This could explain the speed difference you're seeing, because data going to the local machine would not be delayed, but data for the remote machine would be. It would also make sense to disable Nagle's algorithm for local data since there's no penalty in overhead and network traffic. If you search this forum for Nagle Algorithm you can find ways to disable it; see if that makes a difference.

EDIT: By the way, if this turns out to be the correct explanation, you could probably solve it by using a single TCP connection for communication in both directions. What is the advantage in your application to establishing two separate connections? Also, your ZIP file is missing the Data Client VI.

03-28-2010 06:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator