- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Timing Issues

01-17-2012 09:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi everyone,

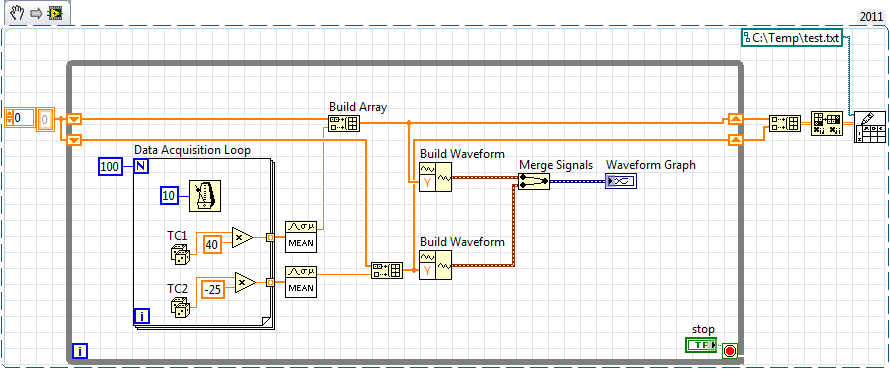

I am having trouble figuring out how to apply proper timing to the VI I've created (see attached). My DAQ boards are a Measurement Computing USB-TC -two of them- and one USB-2416. They are sampling temperature data at 100 Hz.

My VI includes a digital display, 2 waveform plots, and a data log function. All of these are running in a 1 second timed loop, which is sufficient time to complete all of these tasks, and 1 sec is perfect for my application.

I want to take a moving average of 100 samples per second in order to reduce noise.

However- if I'm not mistaken, since I am collecting data at 100 Hz and displaying/graphing/logging it at 1 Hz, my VI is really only taking the latest sample of those 100 and displaying/graphing/logging it.

Long story short - how can I sample data @ 100 Hz, take a moving average of that data every second, and then use that data in the digital display, 2 waveform plots, and data log?

Many thanks,

Laura

Ps. I know my VI is sloppy. It's the first one I've ever made, so any other unrelated tips are appreciated!

01-17-2012 10:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The first thing I would recommend is using clusters and/or subvi's to try to clean up your block diagram and make it more readable. What I would try to do is create a for loop within your while loop that receives the data first and use the mean function in the mathematics>Prob and stat>mean. I believe this should give you the average value for every iteration of your main loop.

Hope this helps.

-Franklin

01-17-2012 11:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If you are new to Labview, I would assume that my suggestion may be a bit of a challenge. It would be better for you to redesign your code using a producer/consumer - data architecture. This would be a good example: https://decibel.ni.com/content/docs/DOC-2431

I have a superficial sample of what CTSFranklin has suggested above, but you may find it difficult to scale / maintain this in the future.