- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Numeric Limit Test with data array and consecutive/total failure counts

Solved!10-18-2021 03:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello TestStand Gurus,

I am self taught in TestStand, so I'm not sure if what I want to do is possible. I have come close with trial and error, but can't get it quite right so it is time to ask for help.

Essentially, I have a large array of data (~1k points) that I want to apply a single Greater than Limit to each element of the array. (Element >= Limit = pass)

The cavate is that I need to track the number of total and consecutive failures (which fall below the limit). These failures I will compare to a maximum failure allowance for each type of failure to determine if the unit passed or failed.

The brute force method of a for each loop, with if/else statements take way too long to process and I am trying to improve the processing time. A plus would be that if the maximum number of consecutive failures were exceeded, then the processing set a failure flag and stop. But if the processing time is reduce enough then this is not an issue.

Any help is greatly appreciated.

Thanks for your time.

Solved! Go to Solution.

10-18-2021 04:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This is definitely a tricky requirement and cannot be solved in a single layer of limit checking as the results of the first level of limit checking is subjected to the next level of limit checking.

Could you please provide sample data and results you extract from them? just to think unconstrained to see if you can derive some parameters from the code module and subject them directly for limit checking instead of a two-layer approach.

Soliton Technologies

New to the forum? Please read community guidelines and how to ask smart questions

Only two ways to appreciate someone who spent their free time to reply/answer your question - give them Kudos or mark their reply as the answer/solution.

Finding it hard to source NI hardware? Try NI Trading Post

10-19-2021 11:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Santhosh,

It doesn't necessarily need to be solved in one step. The methods that I have taken are multiple steps. Please find the attached files. It is a stripped down debugging version I was using to develop with. One is in 2017, and one is 2014. Set the Locals array to a desired size, and the data will pre populate on each run. It is currently set for 20 elements.

I have 2 methods that run in a for loop. One is a Numeric Limit Test, and one is an If Else Statement. The main problem I am trying to solve is efficiency of the processing of each array. A 20 array element takes about 1 second to run in debug mode. When running on our Test Stand in deployment it takes about 10 to 20 seconds for 1000 data points.

I saw a post where someone was able to compute all array values with a multiple Numeric Limit Test, but I wasn't able to make that work with my failure counters requirements.

Thanks again for your time.

10-20-2021 04:04 AM - edited 10-20-2021 04:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

My 2 cents,

I would imagine the processing time is due to the TestStand tracing, are you able to disable tracing for your processing steps and see what performance gains you get? I haven't looked at the sequences you posted, apologies if they are already have tracing disabled.

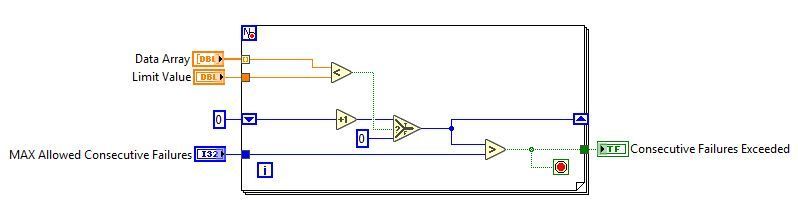

If I was looking for flexibility and efficiencies I would do the processing inside a code module. Something like that below could be used to crunch an array and give an indication if the data contained a run of consecutive failures above a pre defined MAX amount.

Regards

Steve

10-20-2021 09:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Steve,

Thank you for the feedback. I do have the tracing turned on during debugging, but the sequence is ran from a LabVIEW call from a separate program. It is turned off during executing.

I agree with with the code module approach. We have used this in the past but I tend to avoid it if I can do it in TestStand. It is by far the easiest implementation (LabVIEW FTW), but it does cause a two minor issues, and a major issue with our revision control.

First some back ground. We run a core LabVIEW based software which handles data communication, collection, GUI, etc, etc. LabVIEW then launches TestStand scripts which run a test procedure on a unit, collect data points at different conditions, and logs it to a .pdf file/printout along with a pass/fail for the unit. The LabVIEW core is similar from bench to bench, the scripts control everything.

Minor

1. The code is complied so the block diagram can not be viewed and is "fixed" for any modifications on the floor. The source code is on a development system, then pushed to the final bench for checkout/production. Since this is used in tandem with a LabVIEW program, the compiled .vi's must be left in a support directory, and not compiled as an executable. If it is not then the data is not "Public" between the scripts and the LabView. This has led to a lot of special .vis that you have to know about when you write the script. This is could have changed since 2015, and it is partially our fault due to poor program management of supporting .vis. This is why I tend to do things in TestStand, it helps reduce the number of "one-off" modules.

2. It is easier to make an on the fly change on the test bench in the TestStand editor than it is to modify a module on a development stand. This is usefully for new models of the same part family that may need their stabilization timeouts increased because it is less stabile (but moves faster) than previous models. The script change is then pushed back up to the host server. And the changes are signed off of.

Major:

3. The way that we manage our configurations is with checksums. All of our LabVIEW code is in 1 project. The program is compiled into an executable and all modules are placed in a support directory which allows TS to access them. Each time a change is made to the source code, all the checksums have to be re-compiled (automated) and entered into a data base. The changes then have to be signed off on. Doing most processing in the script is desired, since it would only change a few files instead of a few thousand due to recompile. It is much simpler to release a few file changes for production, then to release the whole project.

It may be something that we have to deal with, which I am ok with, but I wanted to make sure that it was a last resort before I took that approach. I will mark your post as the accepted solution.

Thank you.

10-20-2021 10:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I hear that, wouldn't be the first time process overhead has impacted the direction of a solution.....and wont be the last. Hopefully someone else will weigh in here with a idea that fits your process better.