- New 17

- Declined 52

- Needs Info 0

- Development Started 24

- Released 41

- Looking for Maintainer(s) 0

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

Gereralize communication (Variant Reply Payload)

All,

The suggestion/request is mainly caputured in Implementation (1) at "https://delacor.com/dqmh-generic-networking-module/"

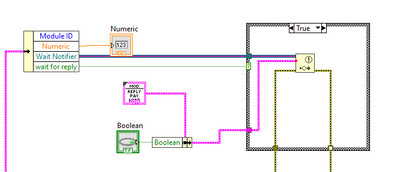

The proposal is to generalize the 'reply' communication by using a 'variant notifier' instead of a 'typedef notifier'.

I agree with the statement mentioned on the delacor website.

"This allows us to send and receive messages without knowing about their actual contents, and that’s the prerequisite for separating the module’s actual use code from the networking code."

The proposal is to change the DQMH scripting to always use the variant notifier. (instead of manually changing)

Actually I have changed the scripting to be able to have this automatically, which I use already 2 years. (If interest I can share with the consortium)

Personnally I don't see any disadvantages of using a variant instead of typedef in this case.

Also for writing the reply payload, this is not a problem as the typedef is also scripted automatically. (see screenshot)

Any comments on potential disadvantages?

Regards,

Lucas

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.