- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Stream with Replicate example - Clarify recommended buffer sizes

Solved!05-16-2017 04:06 AM - edited 05-16-2017 04:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

As I promised here I come with another Channel question.

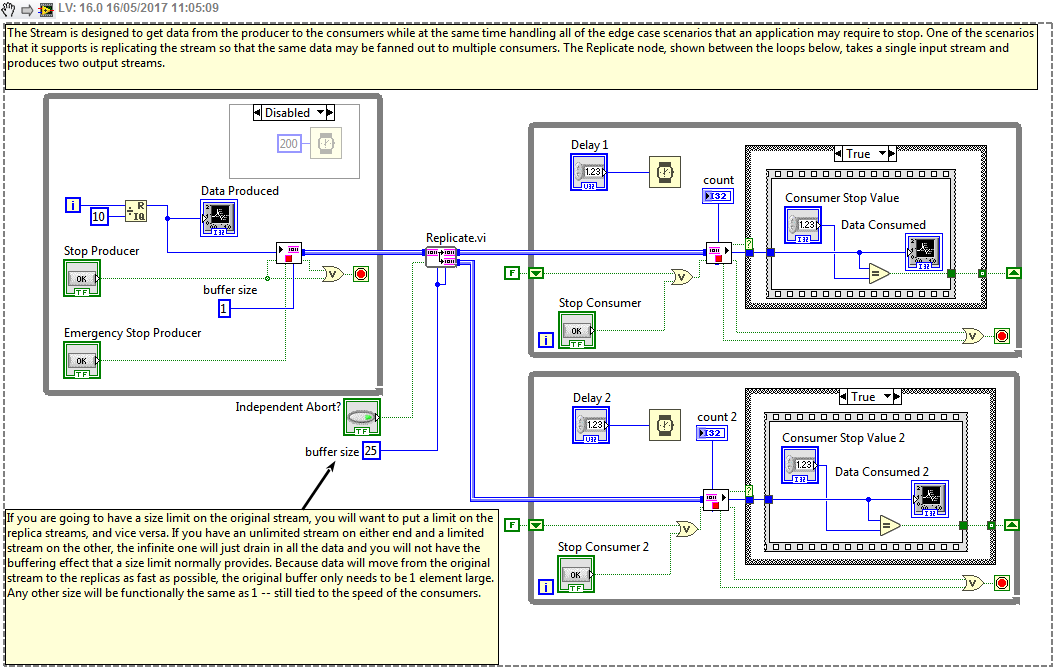

I refer to the example project "Channel - Stream.lvproj", and the VI called "Stream with Replicate.vi" (see the snippet below for better following my questions).

- I really like the Replicate function, so we can transmit the same data to two consumers, which was not possible with a single Queue before

- I understand this is a demo example only, so the two consumers are artificially slowed down (Delay, 1, Delay 2 in msec) representing File operations, calculations, etc...

- I am thinking about a real life scenario, and how I would specify the buffer sizes.

- The example states that: "If you are going to have a size limit on the original stream, you will want to put a limit on the replica streams, and vice versa. If you have an unlimited stream on either end and a limited stream on the other, the infinite one will just drain in all the data and you will not have the buffering effect that a size limit normally provides. Because data will move from the original stream to the replicas as fast as possible, the original buffer only needs to be 1 element large. Any other size will be functionally the same as 1 -- still tied to the speed of the consumers."

- If I imagine a real life scenario, like I have a DAQmx Read in the Producer, and it generates a data pack at every 200 msec lets say.

- I do not want the iteration speed of the Producer to be effected by the Consumer loops, so I would specify the buffer size as "1" (as in the example VI) for the Stream Writer. But I would specify it as unlimited buffer for the REplicate.vi, since I want a lossless stream...

- The description says this is not a good idea, because: "If you have an unlimited stream on either end and a limited stream on the other, the infinite one will just drain in all the data and you will not have the buffering effect that a size limit normally provides. "

I think I do not fully understand the working of these Streams with Replicate here. What I try to do, is to use the Stream+Replicate.vi instead of two unlimited buffer sized Queues. So that is why I would set the buffer size unlimited for the Replicate.vi. In this case why I would not have the "buffering effect"? Just to note here, I assume that the Consumer loops ALMOST always faster iterates than the Producer, otherwise obviously I would get the problem of a memory leak with an infinite buffer...

Hmm, actually what the example describes is (theoretically) a lossy Queue, not (unless I set the buffer to a very high value (~size of the RAM as extreme), which I could...)?

If someone could explain these maters to me, thanks very much! Also, how would you setup a DAQmx Producer with two Consumers to work in a lossless way?

ps: Extra question: why "0" represents "unlimited" size for Channel functions? For Queues, the "-1" means unlimited. Does this change has any meaning?

Solved! Go to Solution.

06-01-2017 04:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The "buffering effect" being a throttling of the producer for a slow consumer when the buffer is bounded. If you set a bounding size, it is *because* you want that limitation. And if you don't limit both the original and the replications, you don't get that behavior.

06-01-2017 04:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

> ps: Extra question: why "0" represents "unlimited" size for Channel functions?

> For Queues, the "-1" means unlimited. Does this change has any meaning?

Because a queue size of 0 makes no sense, on the queues we return an error if you pass zero.

We didn't want to have to put an error cluster on the channels for such a dumb reason. So we made zero or any negative value mean unlimited. Arguably it's a better choice anyway.

06-01-2017 04:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

> Also, how would you setup a DAQmx Producer with two Consumers to work in a lossless way?

I do not understand the question. The example (the very picture included in your post) already shows how to set up a producer and two consumers that is lossless.

06-01-2017 11:17 PM - edited 06-01-2017 11:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@AristosQueue (NI) wrote:

> Also, how would you setup a DAQmx Producer with two Consumers to work in a lossless way?

I do not understand the question. The example (the very picture included in your post) already shows how to set up a producer and two consumers that is lossless.

I think I am "overthinking" the buffer sizing scenarios a bit 🙂 So to reformulate my question, if I want to replace a Queue with unlimited buffer, into a Stream channel, I just simply do not specify buffer sizes at all, thus having unlimited buffers?

Edit: in the picture what I posted, the consumers have a buffer size of 25. If the consumers slow down for any reason, and that 25 element buffer fills up, what happens in the producer loop? The producer specified with one element buffer size, so when the consumer buffers get full, the producer will start to wait, yes? But if there is an ongoing DAQmx task in the producer, which requires fix and constant iterations, this will cause problem, no?

06-02-2017 09:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Blokk wrote:

...Edit: in the picture what I posted, the consumers have a buffer size of 25. If the consumers slow down for any reason, and that 25 element buffer fills up, what happens in the producer loop? The producer specified with one element buffer size, so when the consumer buffers get full, the producer will start to wait, yes? But if there is an ongoing DAQmx task in the producer, which requires fix and constant iterations, this will cause problem, no?

It seems unnatural to me to use a fixed size queue for a constant DAQ loop since the data is coming in at a constant rate that requires keeping the hardware buffer empty to avoid problems with data being over-written.

I would think the exception would be a RT application were jitter is a concern. In that case the consumer has to be written to not introduce any delays and stay on top of the newly acquired data.

I was once told "Queues are almost always completely full or completely empty."

Staying up on a DAQ process is the case where we want to keep the queue nearly empty.

The "nearly full" situation would be used if we were converting a multi-gig data file as quickly as possible. In that case, reading additional data from the file can be paused to let the consumers that are decoding the data stream to crunch numbers as fast as possible.

May be my understanding is wrong but queues and channels are after all a buffer. The decision we have to make is the buffer being stretched or squished.

Just my 2 cents,

Ben

06-02-2017 09:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yes, this is the point I was asking about. So for example in a case of a DAQmx producer/consumer using Streams, I just do not specify fixed buffer sizes, thus it will behave like a Queue with unlimited buffer size...

06-02-2017 09:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Blokk wrote:

Yes, this is the point I was asking about. So for example in a case of a DAQmx producer/consumer using Streams, I just do not specify fixed buffer sizes, thus it will behave like a Queue with unlimited buffer size...

If you are wrong about that, I am equally wrong.

Ben

06-02-2017 12:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Blokk wrote:

I think I am "overthinking" the buffer sizing scenarios a bit 🙂 So to reformulate my question, if I want to replace a Queue with unlimited buffer, into a Stream channel, I just simply do not specify buffer sizes at all, thus having unlimited buffers?

Correct. Either don't specify a buffer size or pass in zero or any negative value to indicate unlimited.

@Blokk wrote:

Edit: in the picture what I posted, the consumers have a buffer size of 25. If the consumers slow down for any reason, and that 25 element buffer fills up, what happens in the producer loop?

It waits and misses a scheduled read.

@Blokk wrote:

The producer specified with one element buffer size, so when the consumer buffers get full, the producer will start to wait, yes?

Yes.

@Blokk wrote:

But if there is an ongoing DAQmx task in the producer, which requires fix and constant iterations, this will cause problem, no?

Yes. So don't limit the buffer size. Limiting the buffer size means you want to pause the producer when that buffer fills up. You could use the Lossy Stream, but that is -- as its name implies -- lossy. If you want lossless data acquisition, you either need a system that can buffer as much as is needed by the consumer OR you need a producer that can pause. If you don't have one of those two things, you cannot have lossless data acquisition. Optimizing your consumer to run faster is about the only option if you cannot afford open-ended buffering.

06-02-2017 12:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@AristosQueue (NI) wrote:

.... Optimizing your consumer to run faster is about the only option if you cannot afford open-ended buffering.

Adding to that thought...

I have used multiple methods when just optimizing was not enough. One approach can still use Channels and applies the concept of pipe-lining in the consumer. By introducing another stage of processing in the consumer, part of the work can be carried out in one core and the rest in another core.

Another approach I learned From Chilly Charlie when he and I were writing code to scrape the Discussion forums for the leader board. I can not say that it would apply to channels but I will share anyway.

We wanted to gather all of the stats from all users for Monday morning updates. The slow part of the process was the Datasocket read from the NI site. We could have more than one of those going at a time. So a queue was used to hold a list of the User IDs and then multiple background loops would use the single queue as the source from which they got the User ID. each background loop would de-queue from the single queue which was a type of "master List" where each loop would handle one user at a time.

While it did kind of violate the idea of a queue (Forgive me Father of the Polymorphic Queue, for I have sinned) it did speed up the collection process enough that we had to actually throttle the queues because we were being seen as a denial of service since we were issuing too many queries too fast.

Just sharing ideas,

Ben