- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Conversion from U32 to FXP on host side after FIFO DMA transfer

Solved!11-29-2023 07:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Guys,

I have data acquired at FPGA side from Analog Input ( voltage +/- 10V).

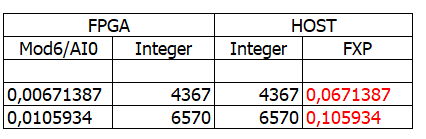

verification at FPGA side gives for example:

raw value= 0.010025 and after conversion to U32 = 6570

after transfer to host via FIFO DMA I'm receiving:

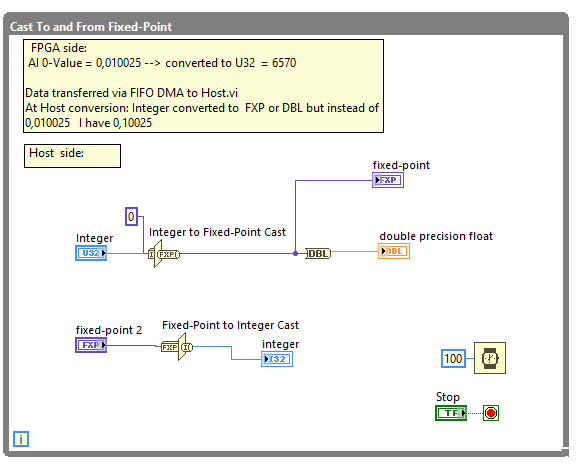

Integer U32 - 6570 but after conversion with Integer to Fixed-Point Cast function Instead of 0.010025 I have 0.1025.

Where is my mistake?

It seems that conversion permanently loosing data

Could You support, please

Thank You

Solved! Go to Solution.

11-29-2023 07:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

11-29-2023 02:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Gerd,

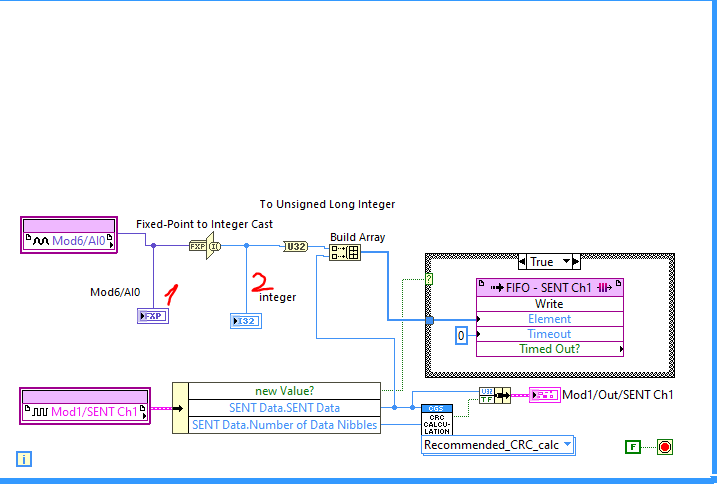

I use 4 channel dedicated module for receiving signal in SENT ( SAE 2010/2016).standard.

Signal is in FPGA as Integer U32.

Furtheromore I have parallel signal from Analog Input (Mod6 /AI0). This signal is converted from FXP to U32 and then both are transferred via DMA FIFO to the Host. FIFO DMA is configured for U32 as Data type and 2 as Number of Elements Per Write.

FPGA code looks like below. If I verify value at point 1 and point 2 is "almost the same" ( I guess that difference in values appears because AI changes as fast as possible and value which arrived to FXP Indicator is "fresher" than value converted by Fixed-Point to integer Cast and shown on Integer Indicator.

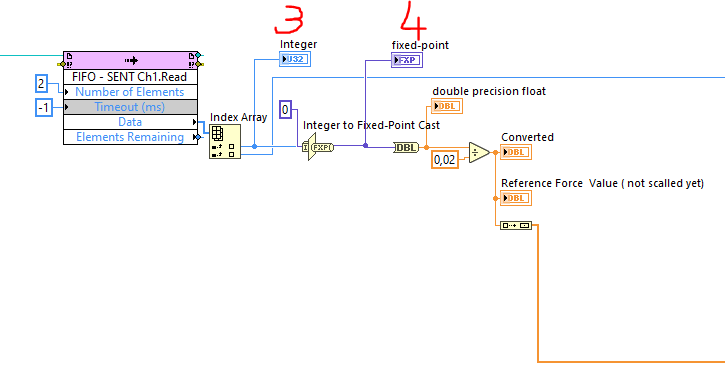

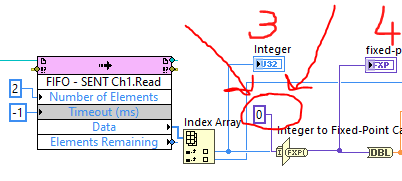

On Host side the code looks like below:

Integer Indicator (point 3) shows values which are provided from FPGA but decoding shows:

FXP values are 10 times multiplied vs original data.

Where is data corrupted? Has the Integer to Fixed-Point Cast some specific configuration?

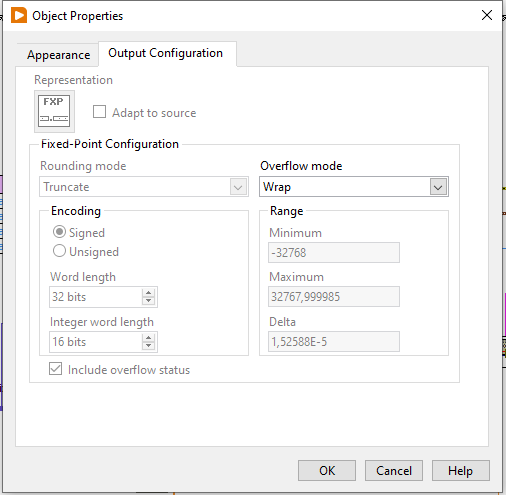

My is configured as below:

Thank You for eventual feedback.

Tomasz

11-29-2023 03:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You should not use a typecast to convert between the calibrated and raw data. See Working With Calibrated and Uncalibrated Data on NI R Series to learn how to do the conversion properly.

Control Lead | Intelline Inc

11-30-2023 02:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Zyong,

I work with Crio 9068 and NI 9222 as AI C Series therefore I try to follow: Switching Between Calibrated Fixed-Point and Raw Integer Modes for FPGA I/O Node

I see a little bit differences.

According to the documentation C Series Module Properties Dialog Box for the NI 9222/9223 (FPGA Interface) I must calibrate on Host side:

Maybe I do something wrong with typecast conversion?

11-30-2023 03:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The factor of 10 in your difference is the 10V range.

The FPGA ready the ADC as +-1, but in real-world calibration terms, this is +-10. Your host software is applying this correction and the values are not as they are read from the ADC, but rather calibrated to represent the actual voltage measured.

This is what is meant by RAW and CALIBRATED values.

the 0.006 is the ADC value, the 0.06 is the VOLTAGE.

11-30-2023 03:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

So,on the Host side can I simply only divide FXP value by 10?

11-30-2023 04:28 AM - edited 11-30-2023 04:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Try using a "Number to Boolean array" and then "Boolean Array" to Number with the correct target datatype set and see if your results are different.

What I said cannot be true if your decimal value is the same, I need to read better. Where are the values of 0.06 and 0.006 in your post above from? I think you are reporting multiple different sources for these values, there may be driver operations in between the direct transmission and the values you're showing. Your incorrect typecast just happens to be approximately (but not quite) a factor of 10 off. The 0.06 and 0.006 do seem tobe exactly factor 10 off though, which looks like a calibrated / raw difference. It's very hard to track doen exactly which values you're reporting where.

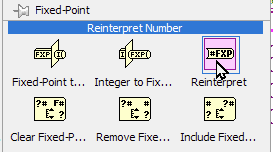

Typecast is not the correct function for this binary conversion. On FPGA, there's "Reinterpret number" which keeps the bit-pattern but tell LV to interpret it as a new datatype. Typecast does NOT guarantee keeping the bit pattern at all.

Tip: You can create a< VI with "Reinterpret" on FPGA, open it on host and it will work. The function is just not on the pallette outside of FPGA targets. It's right there next to the typecast.

11-30-2023 09:11 AM - edited 11-30-2023 09:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Tomasz,

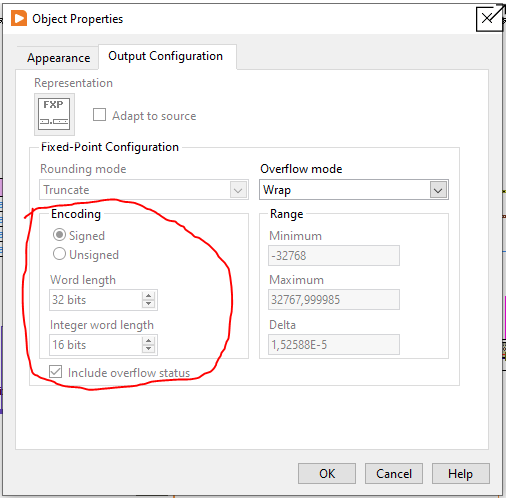

You just forgot to configure the correct fixed-point representation of the constant wired to "Integer to Fixed-Point Cast" on the host side.

This should be Signed, Word length: 24, Integer word length: 5, as are all analog inputs from module NI 9222.

Here I see it is set to the wrong type:

Change the representation of your constant, it should then reflect automatically in the Output Configuration of the Cast function:

Also, I don't see why the "Integer to Fixed-Point Cast" and vice-versa should not be used, and the issue has nothing to do with calibration or raw data.

Regards,

Raphaël.

PS: You should have kept posting on the same issue you created initially https://forums.ni.com/t5/LabVIEW/Two-data-types-transferred-via-DMA-FIFO-Hexadecimal-and-FXP/m-p/434...

since this is exactly the same problem. Otherwise each time new people will come in trying to help without having the history of your previous attempts.

11-30-2023 09:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@raphschru wrote:

Also, I don't see why the "Integer to Fixed-Point Cast" and vice-versa should not be used

My take on this is that the cast allows for types of different widths, whereas the "reinterpret" does not, it keeps the bit width. Since the config of this exchange is kind of hidden (hence the confusion in this thread), there are more opportunities to make mistakes using the type cast than the reinterpret. I've been programming FPGA for a while and have stopped using the type cast completely. It's more a personal preference and experience thing than anything fundamental.

If I'm specifically looking to change the bit width, there are more "visible" ways to do it than via Type cast. When debugging FPGA code, finding hidden configuration errors like that can be a major PITA. So I avoid them and make everything as self-explanatory as possible.

But as with a lot of things in any programming language, YMMV.