- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Error -200621 memory underflow on cDAQ-9189 while writing digital outputs

Solved!03-21-2018 10:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hey everyone.

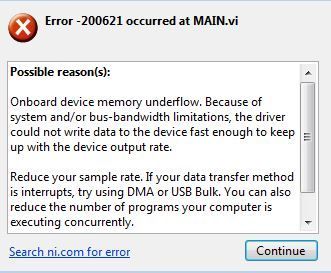

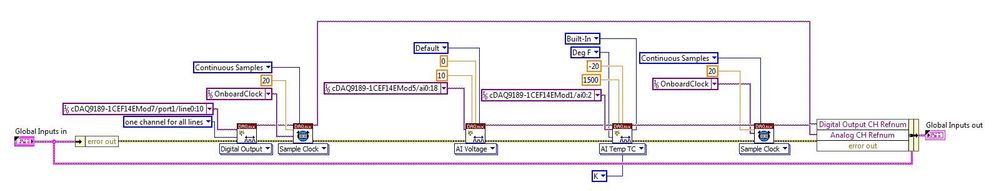

The test control software I am writing requires me to take 21 analog samples (1 sample from 21 unique channels) and write to 11 digital outputs that drive relay coils. I seem to have the inputs working correctly, but when I attempt to write to the output I get error 200621 as shown below:

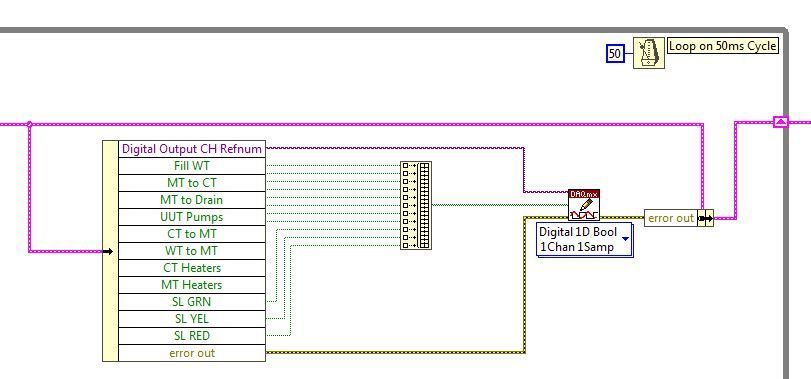

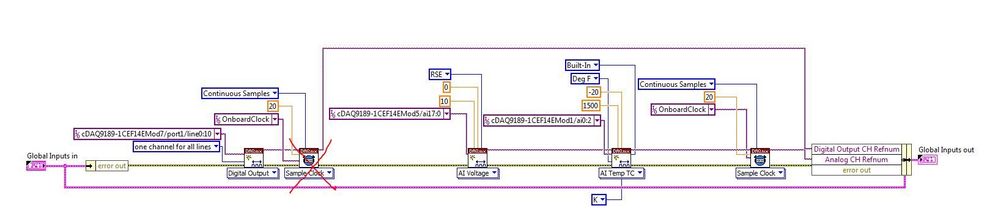

The loop polling the inputs and writing outputs only executes every 50ms, so I am confused about how I could be possible overwhelming the bandwidth on my cDAQ. Here is where I am setting up the tasks, and where the data is being written:

Full code is attached. Any ideas what I'm doing wrong, or what could resolve this? Is there a better method to read/write a single sample per channel per run of the VI loop?

Lab Technologist, Alto-Shaam Inc.

Solved! Go to Solution.

03-21-2018 02:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

First off, you aren't overwhelming the bandwidth, you're underwhelming it. The error message refers to underflow which means you aren't giving the device enough data. There are different ways to fix it, but

What I see: it's generally a mistake to combine fixed sample quantities in DAQ tasks (in your case, 1 sample) with fixed time wait functions in a loop. It tends to overconstrain timing. Let one be fixed and make sure the other has some "breathing room" to float.

For example, assuming only an input task in your loop:

- set a fixed wait timer at 50 msec, but then call DAQmx Read with # of samples set to -1, a special sentinel value that means "all available samples." It'll be possible to receive back 0, 1, or 2+.

OR

- remove all fixed wait timers from the loop. Call DAQmx Read with # samples set to "50 msec worth". (In your case, 1 sample). DAQmx is pretty good about being CPU friendly while waiting for requested samples to arrive.

I'd be looking into an option where the DO task uses on-demand software timing (by never calling DAQmx Timing.vi) so that the relay coils change immediately. Buffered DO will have latency between the time you write to the buffer and the time that those values get generated as signals on the board.

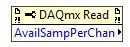

Further tips: in the loop, read your AI data first and *then* do your on-demand DO. Further, to prevent the possibility of the software loop lagging behind the data acq task, leading to an AI task buffer overflow, use a DAQmx Read property node to query "available samples" from the AI task before doing the Read. If 0 or 1 do a normal 1 sample Read. If > 1, read them all. You'll need to evaluate what to do with these extra samples in the context of the process you're controlling -- maybe you need to consider the whole history of some channels, maybe you only need to consider the single most recent sample.

-Kevin P

03-21-2018 03:46 PM - edited 03-21-2018 03:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Kevin,

Thanks for your reply. So, I'll admit I'm having a little trouble following your whole explanation. I'm fairly new to writing I/O in LabVIEW, so that probably explains my lack of complete comprehension. If I'm following your correctly, you think I should do the following to improve my code and solve the error 200621:

1. Either set the DAQmx Read to a setting other than that shown below, so that it takes 'n' samples instead of 1 OR remove the 50ms timer from the main vi loop;

2. Remove the DAQmx Timing from the setup of my DO card;

3. use a property node to determine how many samples are available from the Read VI and act based on that knowledge

Regarding the first point above, my analog read seems to be working perfectly. I can load those values into my 'global variables' cluster without issue, and then that data properly determine the state of the system. If I use a diagram disable structure on my DAQmx write code the program functions marvelously, reading the inputs and recording the data I want to save to a TDMS. (TDMS write code was added after I uploaded the program earlier, so you wont see that sub-VI if you go looking for it.) The error only occurs when I am trying to write to the DO cards and energize the relays. Should I still change this to conform to 'best practices' or is it not really necessary?

Regarding the second point, removing the DAQmx timing vi seems to have solved the issue. The error no longer occurs.

Regarding the 3rd point, should I bother to use the property node and create logic if all I ever want is the most recent sample? I don't care if the other data points are lost. I only need 1 point of data for each channel for run of the VI. What is the general method used to discard the excess data so I can't end up in an overflow state?

Again, thanks for your assistance.

- Dylan

Lab Technologist, Alto-Shaam Inc.

03-22-2018 09:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

First off, I'd say you understood me better than you give yourself credit for.

Second, for many of the "fine-tuning" details, the best true answer is going to the frustrating one, "it depends."

1. The risk you have with the fixed timer *and* the fixed # of samples is that you could get out of sync. For example, let's say you launch Excel while your program runs and Windows starves you of CPU for 300 msec. The next loop iteration happens 300 msec after the previous one and 6 new samples get buffered. Then you read exactly one of them. From here forward, you'll always be reading a single data point that's 5 samples (250 msec) old. Until the next time Windows starves you of CPU and you get even farther behind...

Not a big deal if you're only logging and post-processing, but potentially a very big deal if you're making real-time decisions based on stale data.

2. Yep, as expected, no more DO errors when switching from buffered to on-demand.

3. If what you *really* want from AI is only the single most recent sample, there's an easier and a harder way. I think the easier way will be sufficient for your current needs, the harder way is needed when DAQ must run at high speed or have very consistent sample timing

- Easier - change the AI task from buffered to on-demand too by removing the call to DAQmx Timing.vi. Also put back your fixed 50 msec wait timer in the loop. Removing the buffering avoids any possibility of "getting behind" with the AI task just like it prevented the underflow error with the DO task. Part of the tradeoff is that the time interval between samples will be more erratic due to software timing under Windows. You'll probably find that much of the time the interval is quite close, maybe 50 +/- 2 msec. But occasionally it will be significantly different.

- Harder - there's a way to configure a buffered task such that you can request only the most recent samples. When you do this, the chunks of data you retrieve may overlap (so that some samples get read 2 or more times) or they may be discontinuous (so that some samples get skipped over entirely). Here's a posting I made some time back that illustrates the method.

-Kevin P

03-22-2018 11:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for the explanations. I think I'll implement the 'easy' way and see how it works.

Lab Technologist, Alto-Shaam Inc.

04-04-2018 10:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I ended up using the first set of solutions you mentioned. After about 10 hours of run time I would get an overflow error. I ended up choosing to read all of the available data each time, and then use array VIs to only read the most recent data, disregarding the rest.

Lab Technologist, Alto-Shaam Inc.