- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

FPGA <-> RT comms on multi-core CPU

05-20-2015 09:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Time to revisit an old question.

I am looking into ways to significantly feee up capacity on our RT controller. We currently have our controller limited to a single core for the boos in speed that it gives us (3.1 GHz instead of 2.5 GHz), a whopping 25% increase in speed. We're using a PXIe-8115 chassis with a PXIe-7965R FPGA card.

Our timed loop reads from FPGA, does some processing, writes to FPGA. Out loop rates are up to 20kHz (50 us). Nearly half of this is the FPGA <-> RT communications over DMA (20 from 50 us).

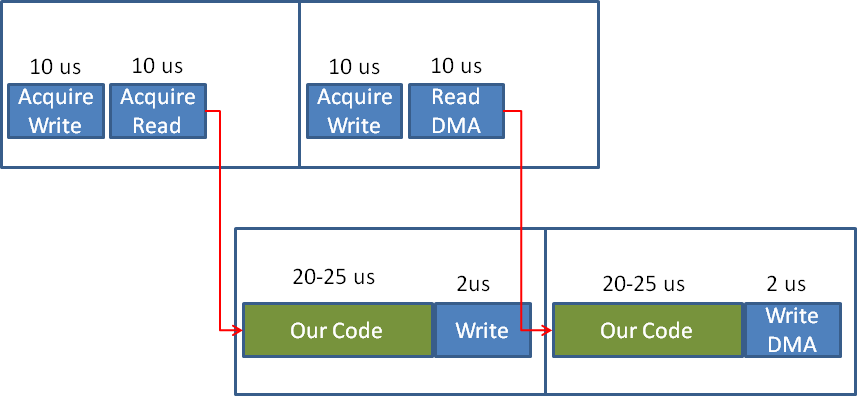

I have been contemplating outsourcing the FPGA communications to the second core of our CPU. The immediate drawback of course is that our code which currently takes 20us to execute suddenly takes 25us to execute. This means that any implementation will have to cut loop execution time by a minimum of 5us just to break even. But the fact that our load is split nearly 50:50 between FPGA comms and our actual control code makes me wonder if it's not still the best approach.

Does anyone have experience in this? I have some ideas but they get complex quite quickly. I envisage the following:

Loop 1:

Acquire WRITE region then Acquire READ region and send both to RT FIFO.

Loop 2:

Read RT FIFO, Process READ data, do control, fill WRITE buffer and destroy DVR.

In this way the two operations to acquire READ and WRITE regions (which according to my benchmarks take 12us each) are approximately equal in execution time to our control loop (20-25 us). Int his way the write buffer is passed along with the data from the read operation.

I am making the assumption here that the synchronisation between loops is minimal. I am also assuming that both loops would be timed loops targetted to different CPUs. I'm also assuming that I can acquire multiple write regions otherwise both loops are going to be competing for the only write buffer available.

Does anyone have experience with this kind of thing? Am I being completely blue-eyed about the possibilities here or is there some agreed way of achieving this?

Shane

05-20-2015 09:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

My goals are two-fold.

One is that this approach could open up the possibility to have higher clock speeds, although 25 kHz would likely be the limit.

The other is the ability to pack more code in our control loop. We're already looking at tripling out channel number. While most operations operate on only one channel at a time, I have recently added some "intelligence" to our channels to allow for slew rates, limit checking and so on. These require CPU time per channel per loop.

At present, assuming the second option above would be feasible, we could theoretically allow our code to require up to 43 us of CPU time to achieve similar performance to now (at 20kHz). This (even factoring in the drop in CPU speed) allows for a whopping 72% more time. I start salivating about what we could do with all that time. For us, 18 us is a huge amount of time.

Shane

05-21-2015 06:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

No responses so far, no problem.

I think I might be wrong in one sense. Is it even possible to have multiple DVR from a "Acquire Write Region" functions at the same time? I know I can write many times to a normal DMA node even if there's still data waiting to be sent, so I thought this would be similar but I'm not so sure because the available help on these functions is very vague on a lot of things.

What do the return values of "Number of elements" and "Number of empty elements" mean exactly? Is the "number of elements" the number of elements I just requested from the node (in my case 66) and "Number of empty elements" the number of total elements left in the DMA transfer buffer as I have initialised it from the FPGA reference (in my case 1M elements?

05-21-2015 07:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hmm, finally got to do some testing.

On my System I initialised the Write DMA channel to have 2048 elements in the transfer buffer.

I then tried sequentially to acquire Write Buffer Regions (without destroying any) and looked at the returned "elements in region" values.

I could get a total of 32 DVRs. The sizes were all 66 (as requested) except for the last one which was only 2.

(31*66) + 2 = 2048.

Clear as mud.

How does the FPGA driver handle the DVRs being destroyed. Does this signal a transfer, does the order of destroying the DVRs matter? Can I open all 32 and then fill and destroy them in a random order? Will the data arrive in the order I acquired the write regions or in the order I destroy them? All of these things are very unclear to me. I have limited time for testing, but it looks like I'll have to.

05-22-2015 01:22 PM - edited 05-22-2015 01:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hey Intaris,

Firstly, have you tested this application with the standard FIFO Read/Write API? Our experience is for smaller data sets, Read/Write comes out ahead over AcquireReadRegion/AcquireWriteRegion. At a high level, there is additional locking and other overhead in the driver when using ARR/AWR which exceeds the cost of the data copy in FIFO Read/Write. For 66 elements, I'd expect FIFO Read/Write to perform better.

To answer your questions about how ARR/AWR work. The specifics of this are mostly Host to FPGA centric, but the same logic applies in the FPGA to Host direction

- Number of elements is the size of the returned EDVR. This is necessary, because it may not be possible to return a region of the size requested. As in your example, when 66 elements are requested, but there are only 2 elements between the current pointer and the end of the buffer, only 2 elements can be returned because an EDVR must point to a contigous buffer. It's necessary to acquire another region of size 64 to get the rest of the data set from the start of the host buffer (assuming it's available of course). In general, it's recommended to size the DMA buffer and the region size so that the buffer size is an integer multiple of the region size to avoid this issue.

- Write data can only be transferred after the region has been released.

- Regions can be released out of order, but all of the preceding regions must be released before a given region can be transferred. This is because the hardware is configured to read sequentially from the host buffer. The RIO Driver takes care of tracking which regions have been released and granting the hardware permission to transfer data when it's safe to do so.

Does that answer your questions? Let me know if you need clarification on any of that or if you have any further questions.

Thanks,

Sebastian

05-22-2015 01:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I know that the dvr version of the dma reads takes longer, 9 us versus 12 us to be exact. But the advantage of the dvr version is clear when looking at moving the actual data transfer to and from FPGA to a parallel loop.

The sequential read order of the write buffers is kind of what i was expecting. But your answer clears any doubt. Thanks.

05-22-2015 01:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Intaris wrote:

I know that the dvr version of the dma reads takes longer, 9 us versus 12 us to be exact. But the advantage of the dvr version is clear when looking at moving the actual data transfer to and from FPGA to a parallel loop.

Are you referring to the additional copies that would be required to move the data to the processing loop in the non-EDVR case?

05-22-2015 02:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Not only that, even though that is a part.

The ability to acquire the regions in advance allow me to acquire the write region before the read region although they are actually used the other way round. This difference in scheduling allows for more efficient parallalisation.