- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Flatten To JSON Function changes the DBL digits length incorrectly [Bug?]

06-10-2023 08:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

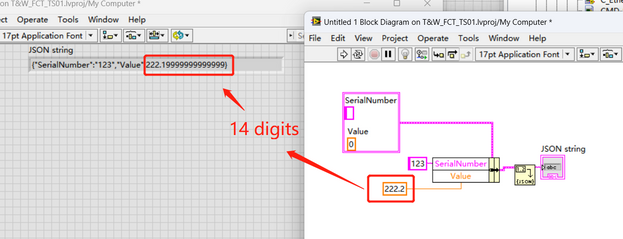

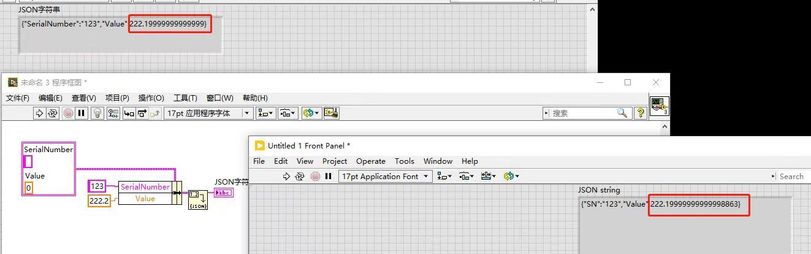

I found that the Flatten To JSON Function changes the DBL digits length incorrectly, is it a bug? I have already tested it on the different versions' platform.

Tested by LabVIEW2013 32bit Version

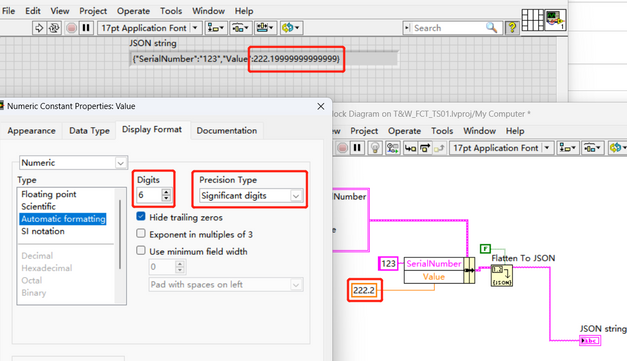

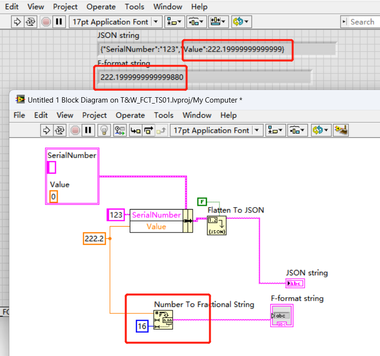

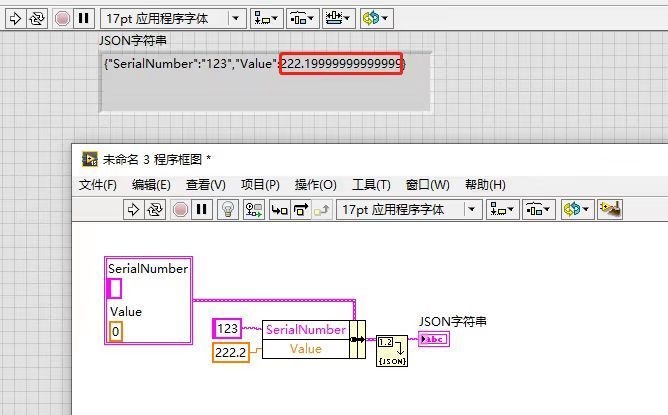

Actually, the input is only one digit, but the output is 14 digitals. Even modify the Display Format and change the default digit precision, but output still keep the same.

Tested by LabVIEW2013 32bit

Tested By LabVIEW2015 32bit

Tested By LabVIEW2020 32bit

Tested By LabVIEW2020 64bit

So, we can find that the LabVIEW2020 and LabVIEW2013/2015 have the different digit precision output.

------------------

LV7.1/8.2/8.2.1/8.5/8.6/9.0/2010/2011/2013/2015/2016/2020; test system development; FPGA; PCB layout; circuit design...

Please Mark the solution as accepted if your problem is solved and donate kudoes

Home--colinzhang.net: My Blog

ONTAP.LTD : PCBA test solution provider!

- Tags:

- JSON

06-10-2023 10:26 AM - edited 06-10-2023 10:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@colinzhang wrote:

Actually, the input is only one digit, but the output is 14 digitals.

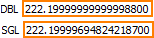

No, your input is not only "one digit" if you care to display all digits of the diagram constant! DBL precision values are quantized to a mantissa with a finite number of bits. and many simple decimal fractional numbers (e.g. 0.1, 0.2) don't have an exact binary representation.

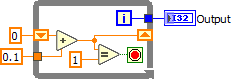

Trick questions: What is the value of the Output indicator after the VI stops?

@colinzhang wrote:

So, we can find that the LabVIEW2020 and LabVIEW2013/2015 have the different digit precision output.

I guess the underlying code has slightly changed, but the difference is way beyond the guaranteed resolution so probably irrelevant. 😄

If you want exact decimal representation, store it as string...

06-10-2023 11:07 AM - edited 06-10-2023 11:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

@colinzhang wrote:

Actually, the input is only one digit, but the output is 14 digitals.

No, your input is not only "one digit" if you care to display all digits of the diagram constant! DBL precision values are quantized to a mantissa with a finite number of bits. and many simple decimal fractional numbers (e.g. 0.1, 0.2) don't have an exact binary representation.

Trick questions: What is the value of the Output indicator after the VI stops?

@colinzhang wrote:

So, we can find that the LabVIEW2020 and LabVIEW2013/2015 have the different digit precision output.

I guess the underlying code has slightly changed, but the difference is way beyond the guaranteed resolution so probably irrelevant. 😄

If you want exact decimal representation, store it as string...

Unless it is "for display purposes only" I skip this step. The moment you need it to be a real number, you end up with the same problem - and potentially less precision than when the number went in. I guess for an exact representation you would store as bytes.

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.

06-10-2023 12:14 PM - edited 06-10-2023 12:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

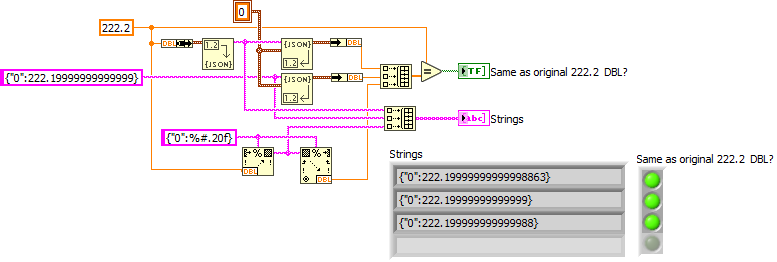

Well, the more interesting question would be if the fuzzy LS decimal digits even matter. If you read the json string back into the original datatype and do an equal comparison with the original value, does it match?

06-10-2023 12:59 PM - edited 06-10-2023 01:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

Well, the more interesting question would be if the fuzzy LS decimal digits even matter. If you read the json string back into the original datatype and do an equal comparison with . the original value, does it match?

Probably, but equal comparisons on floating point numbers without some range is ALWAYS wrong, as you have told so many times in these forums, Christian. 😀

A double has about 16 digits of significant data, which is not just incidentally what the JSON conversion uses as default width. That you showed only one decimal digit of the number in the front panel control has absolutely no meaning for the actual binary value in the wire. It only specifies how to display that number in the front panel control. The JSON function does its own interpretation and without an explicit width specification it simply does the most safe thing: Format as many digits into the string as the number possibly can have in significant data

06-10-2023 02:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@rolfk wrote:

@altenbach wrote:

Well, the more interesting question would be if the fuzzy LS decimal digits even matter. If you read the json string back into the original datatype and do an equal comparison with . the original value, does it match?

Probably, but equal comparisons on floating point numbers without some range is ALWAYS wrong, as you have told so many times in these forums, Christian. 😀

Of course! I was just thinking of the possibility that the change in the number of digits in the json string (as reported between LV versions, not verified independently!) does not actually change anything in the bit level when comparing the original and the one processed through json flatten/unflatten. If the decimal string contains a sufficiently high number of decimal digits, it should convert back to a binary identical value in most cases, so what is sufficient?

06-10-2023 03:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

First of all: Kudos for doing the tests and showing your work!

@colinzhang wrote:

So, we can find that the LabVIEW2020 and LabVIEW2013/2015 have the different digit precision output.

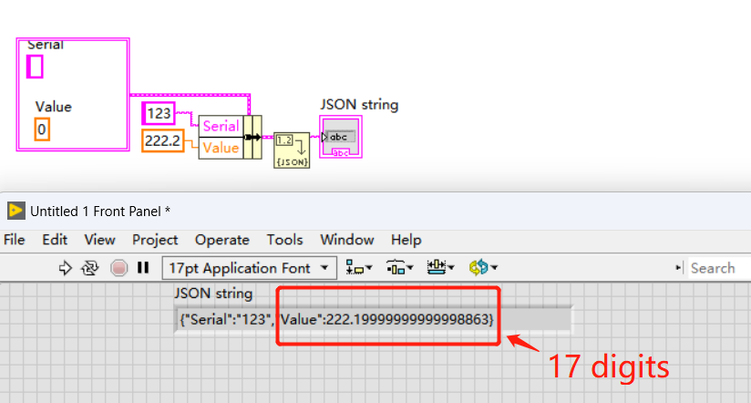

I guess they are perfectly allowed to do so. This made me look up the JSON standard document, which should be RFC 8259. The section on numbers suggests that you do IEE 754 double precision and give enough digits to let the receiving party know that you did. 17 digits (which both of your tests do, if you include the integer part) seems perfectly fine.

Also note: Floating point numbers map the infinite real number space to a finite number of possible values for binary representation. This mapping is standardized in IEE 754. So the number 222.1 maps exactly to the number

222.19999999999998863131622783839702606201171875 in double precision. Since the next smaller number is

222.199999999999960... (the distance between two double precision floating point numbers is roughly at a factor of 10-16) it is enough to give 17 digits to uniquely distinguish these two.

06-10-2023 04:24 PM - edited 06-10-2023 04:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

Of course! I was just thinking of the possibility that the change in the number of digits in the json string (as reported between LV versions, not verified independently!) does not actually change anything in the bit level when comparing the original and the one processed through json flatten/unflatten.

For this particular input (222.2) Three different decimal string representations return exactly the same DBL (for other values, YMMV, of course! :D)

Since format into string gives yet another decimal representation, I suspect that the JSON tools use some canned foreign libraries and LabVIEW has no direct control over it. That code base must have changed.

(Curiously, if we change the input to EXT, the json string is exactly "222.2000". 😄 )

06-10-2023 07:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for your analysis and discussion.

The first impression was also to consider the problem of DBL accuracy, but after trying different versions, I was even more confused after discovering the differences.

Yeah, I also know how to deal with it (pre-process the DBL number to a fixed digit, convert to inter-number or string), just feel interesting.

While it is safer to keep all the precision/digits, it seems more practical to leave one option to set the width of digit which is similar as the Number to Fractional String function.

It is interesting, I compare the Flatten To JSON function with the Number to Fractional String function, but still a different result. Of course, they are very closed.

------------------

LV7.1/8.2/8.2.1/8.5/8.6/9.0/2010/2011/2013/2015/2016/2020; test system development; FPGA; PCB layout; circuit design...

Please Mark the solution as accepted if your problem is solved and donate kudoes

Home--colinzhang.net: My Blog

ONTAP.LTD : PCBA test solution provider!

06-11-2023 02:12 AM - edited 06-11-2023 02:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

OP, you might want to look into JSONtext. JSONtext tries to preserve decimal numbers, rather then floating point representations and so will output 222.2.

The inbuilt JSON make the alternate choice of trying to exactly preserve floating point values (though it has a known bug in treating EXT as if it were SGL).