- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Howto convert a floating point number to an array of ASCII bytes without converting to string

10-30-2013 03:49 PM - edited 10-30-2013 03:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

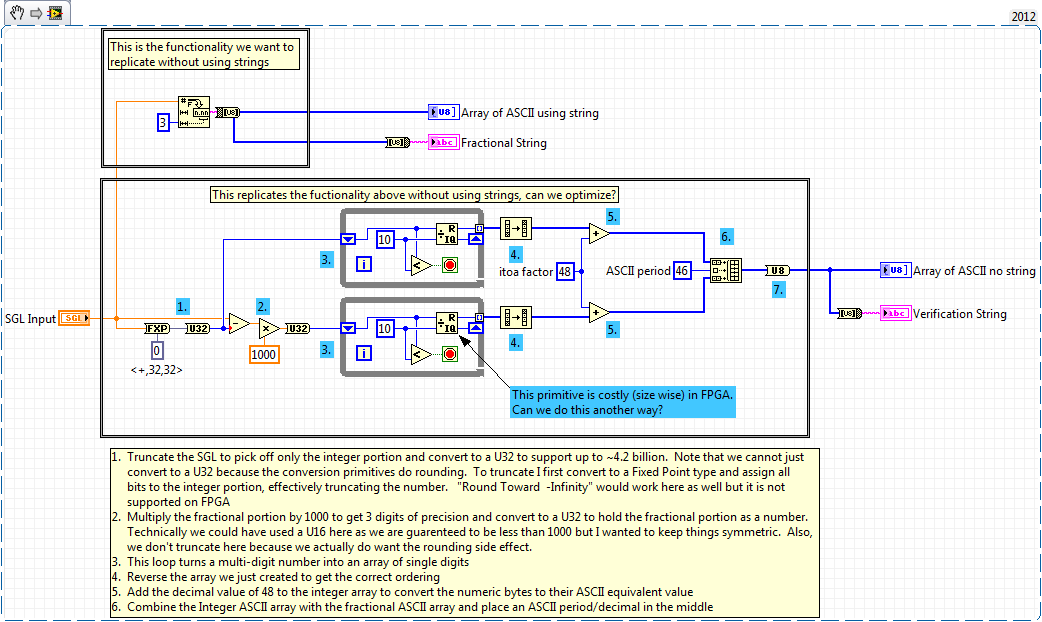

I'm writing a LabVIEW FPGA application that talks to a 3rd party cRIO module which can output to a 2x16 ASCII LCD display. Using this module, I would like to be able to dynamically output floating point numbers that I am acquiring. To output a message to the display, I need to send it an array of U8 ASCII bytes. This task is trivial in LabVIEW for Windows, but LabVIEW FPGA does not support the string data type as everything in FPGA must be a fixed length.

I've come up with a working solution that I wanted to share with the community but also I would like to see if people can offer suggestions to further optimize. In particular, my solution makes use of the "Quotient & Remainder" function which is a VERY costly primitive (size wise) to synthesize in FPGA. Can we do better?

P.S.

The current solution supports numbers up to 2^32 with three digits of precision. Even though this is a small portion of what a SGL floating point type can support, it is more than adequate for this application.

10-31-2013 02:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

One thing you could try is replacing the Q&R code by repeatedly subtracting 10^N until the number is smaller than 0 and then you know how many units of 10^N are in the number, which is your digit. Once you get the digit, you decrement N and do the next digit. That's essentially converting the division to subtraction operations.

A coupld of obvious problems is that you might end up with a similar amounts of gates (I have no idea what algorithm Q&R uses) and that you need to start from a specific N, so you have a limit on how large the number can be (although you could use log to determine the N dynamically, but I don't know how many gates that would take).

Alternatively, you could look for other algorithms for converting a binary number to a decimal number, but I don't know if any exist which would be more efficient.

___________________

Try to take over the world!

10-31-2013 10:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If FPGA space is at a premium, how about letting the host processor do the conversion? If this is for user display, then you don't need the fast update rate that the FPGA can deliver. Instead, write the floating-point value to a front panel indicator. Add a separate loop to your FPGA that does nothing but monitor a boolean on the front panel, and whenever it's true, read a U8 value from the front panel and write it to the display and then clear the boolean. The conversion is easy on the host processor and you gain the ability to write other messages as well without recompiling the FPGA.

10-31-2013 11:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I second Nathan on this one. Just have the RT do the conversion and write to a control on the FPGA which then is output. Just curious, I'm probably missing something, but how does the FPGA know the fixed size of the output array in those while loops, allowing it to compile?

10-31-2013 03:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Here's another algorithm you could try after splitting the number at the decimal point (it's based off of this😞

It's much (about 10x) slower than the quotient/remainder algorithm on my PC though (no FPGA to try it on). A good thought exercise in any case...

Best Regards,

11-01-2013 02:33 PM - edited 11-01-2013 02:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi all, thank you for the great responses.

First to answer some questions:

1) Why not just have the host do the calculations? Good question, the reason I can't do this for this application is that this FPGA application is actually an emergency shutdown monitor that needs to perform completely headless.

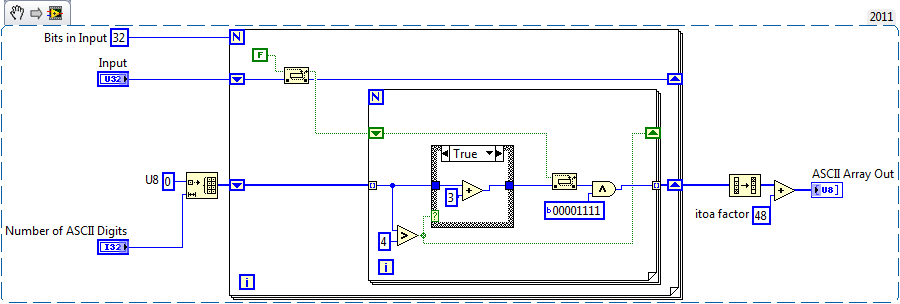

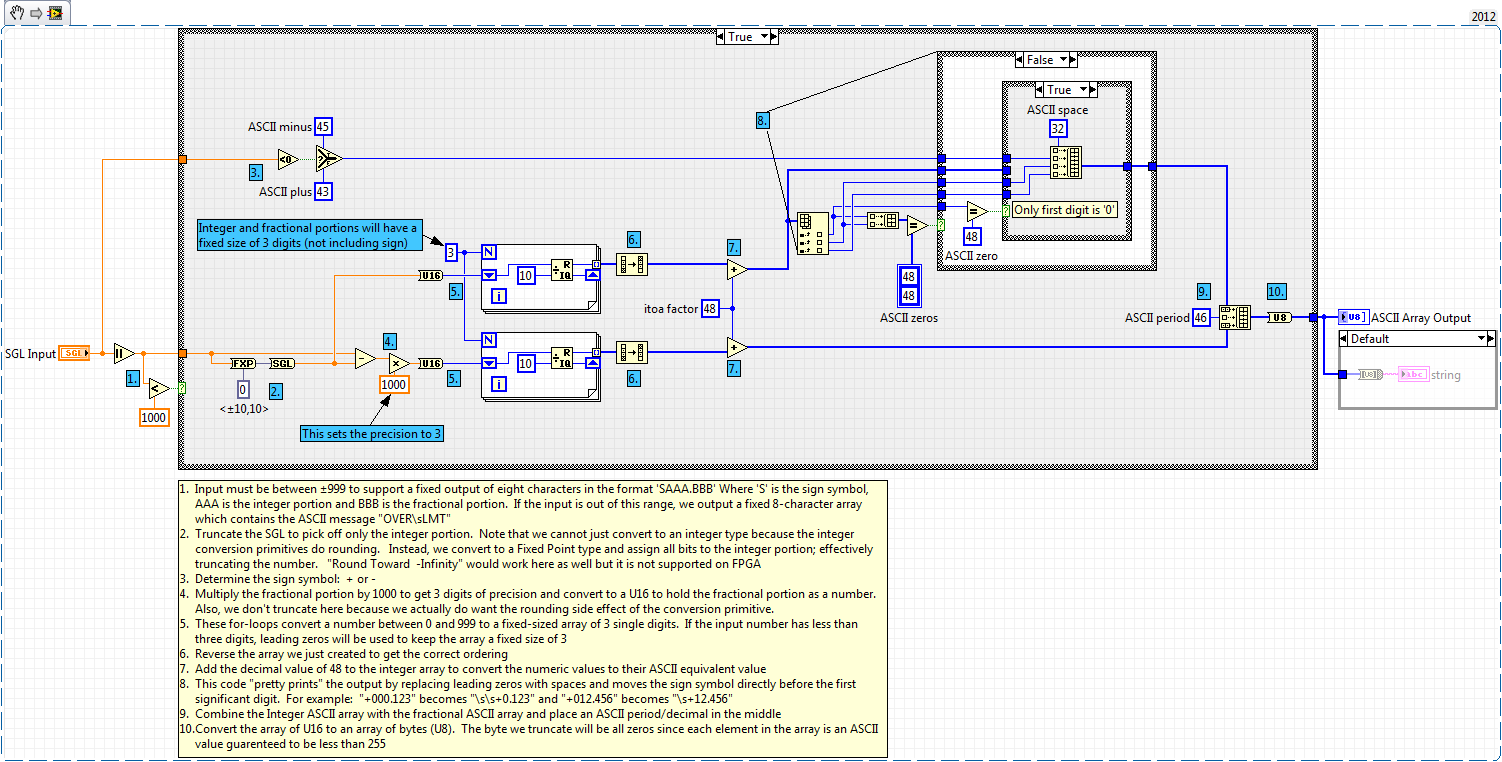

2) How can my orginally posted code compile on FPGA when a while loop is indexing the array? Oops, it can't, good catch! I was actually testing this code on LabVIEW for windows because the dev environment is a lot more friendly but I should have done one test compile before I posted it, sorry about that. The code has been modified and now tested to work on FGPA. The caveat is that I now have to preset how many digits the output array can hold. For my application i'm allowing 3 digits for integer and 3 for the fractional.

3) Is the double-dabble algorithm more efficient space wise than the Q&R equivalent? First, thank you for posting this, I was not aware of this algorithm. Now to answer the question, it is....but only just barely. I compiled two test VI's, one with just the double-dabble and the other with just the Q&R and the former was only 2% smaller in slice utilization. Because the code is a lot more complicated and the utilization savings is pretty minimal, I've decided to stick with the Q&R primitive.

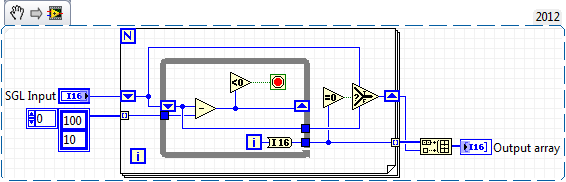

I also implemented what I'll call the "Loop and subtract" algorithm that was suggested and as it turns out, this one is almost exactly equivalent to the Q&R method in terms of space utilization. That was quite surprising to me and it suggests that the 2012 FPGA Module does a great job on optimizing the Q&R primitive, it was not always this way. I've posted the "Loop and subtract" code below as well.

08-06-2024 05:28 PM - edited 08-06-2024 05:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@SeanDonner wrote:

I'm writing a LabVIEW FPGA application that talks to a 3rd party cRIO module which can output to a 2x16 ASCII LCD display. Using this module, I would like to be able to dynamically output floating point numbers that I am acquiring. To output a message to the display, I need to send it an array of U8 ASCII bytes. This task is trivial in LabVIEW for Windows, but LabVIEW FPGA does not support the string data type as everything in FPGA must be a fixed length.

I've come up with a working solution that I wanted to share with the community but also I would like to see if people can offer suggestions to further optimize. In particular, my solution makes use of the "Quotient & Remainder" function which is a VERY costly primitive (size wise) to synthesize in FPGA. Can we do better?

P.S.

The current solution supports numbers up to 2^32 with three digits of precision. Even though this is a small portion of what a SGL floating point type can support, it is more than adequate for this application.

Why are you using floating point numbers at all? You should be using Fixed Point! Seriously, that is why fixed point numbers have been invented! ( by people smarter than all of the others who are trying to help you get around using them)

"Should be" isn't "Is" -Jay