- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Imaq: Incoherences when casting in png. Auto-scaling or bad VI??

05-08-2015 07:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello every one.

I have an issue than is driving me crazy as I can't figure out what is going on.

I am creating a VI to take pictures with a camera. Among the things it does, it allows recording a picture from an average of many, show it on the screen and saves it.

The camera is a basler scout 1400gm. The subVI is attached.

The camera takes pictures in 12bit depth U16. Thus, on the direct camera visualization (first display), the pixels values are between 0 and 4094. The averaging subvi divides each picture and sums them in sgl, then casts it into 16bits img. The result is sent to a second display, and can be saved, i.e. in the range 0 to 2^16 (65536). So far, so good (or please correct me).

Now here comes the mystery. The second display (.i.e. the output of the subvi) shows a picture in 12bits, with values corresponding to the first display (which does not seems logical). But when I try to save it, sometimes the scale is completely messed up.

Say, I have a 2d gaussian curve with a normalized maximum of 0.6 in the first display (12bits value). When I take a picture, the second display is the same with the same max (12bits value) but the resulting png shows the same curve with a normalized max of 0.8-0.9. So, it seems that when saving Labview takes the liberty of adapting the scale. It seems to happen mainly with pictures with a normalized max below 0.6-0.5.

Is it really due to an auto-scaling or my VI is just bad? can I change this behavior ? since my goal is to compare intensities between pictures, it is just useless now...

The second display's behavior (i.e. not displaying the picture it should) is not really an issue here, more a curiosity 🙂

Hope you can help me before I tear the remnants of my hair out !

Best regards,

G Leveque

05-11-2015 11:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

There are some strange things going on in your code. First, you seem to be saving images from your camera in Grayscale (Sgl) format, not U16. Actually, that is a good thing, as it lets you do arithmetic with less worry about overflows. Next, you divide before adding, which (if you were really dealing with integer data) means you are decreasing the precision. If you really do have Sgl buffers, do the adds inside the loop, and do the (one) division outside. Note that you want to make the accumulating buffer (Img dst) the same type as the Camera. Also, it's not clear (to me) what happens when you wire Img Dst In on the Add function -- as I read the Help, it seemed (to me) that you should leave this unwired (forcing the output buffer to be the same as Src A).

Something that you can also do to test this is to take a single image and run it through your averaging algorithm. Right now, you have (variable) images from your camera -- what happens if you take the Acquire Image (camera) function out and substitute a fixed image? You know averaging the same thing 100 times gives you the same thing -- could serve as a Reality Check.

Bob Schor

05-19-2015 08:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Indeed, I started by dividing the picture to avoid overloading the pixels. I did not get that sgl allowed not only to have non integers but was removing the upper limit too! thanks for the tips.

Nevertheless, my issue here is with the final scale of my picture. Why is the picture is re-scaled if the max value is under the half of the scale? is it a default NI behavior and can it be set ?

Gael

05-19-2015 08:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Gael,

Did you try creating an image, averaging 100 copies of it, and comparing Image and Average? Are the scales different? Can you post that code so I can take a look at it (and try it out here)?

Bob Schor

05-21-2015 07:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Bob, here is a sample of code similar to mine which is producing similar issues. (I know, I am messy :-p)

You can try it with a picture I sent before.

Basically it loads a picture, shows it, averages it and shows the average. Then this average is divided by 2 and shown, too.

Pictures shown should all be in Uint 16, but the 3 screen are in uint12. Ok, no big deal.

But strangely, even though the two screens show the good values (i.e. the same picture as the initial image and its half), when saved (by right clicking on the screen) and opened with ImageJ, the values are in uint16 BUT equal...

What is it that I don't get ?

Thanks!

Gael

05-21-2015 07:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

And here is a picture that I did not send before...

05-21-2015 11:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Gael,

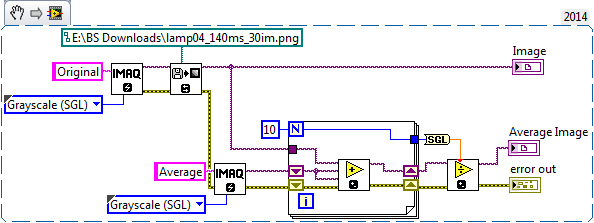

This Snippet illustrates averaging of the image you posted. Of course, if I average 10 copies of the same image, I should get the same image, so how can you tell if it works? The answer is to disconnect the "Divide-by-the-number-of-images" input to the final Image Divide, and let it divide by 1. Now look at the images. When you put your cursor near the bright spot of the image, it reads something like 3000, but when you place it over the same area of the Average Image (without dividing), it shows 30,000, 10 times larger.

Notice that I opened your PNG directly as Grayscale (SGL), which allows me to do arithmetic without worrying about overflows as long as the number of images is "reasonable" -- your image data is probably 8 bits (judging from the brief sample I noticed), and SGL floats have 23 bits of precision, so you can average thousands of images without losing precision.

Bob Schor