- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

LVOOP: Objects sizes

Solved!08-24-2012 07:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello

I have few questions regarding LVOOP. I'll be refering to this whitepaper: http://www.ni.com/white-paper/3574/en, especially the part where it says:

LabVIEW allocates a single instance of the default value of the class. Any object that is the default value shares that pointer. This sharing means that if you have a very large array as the default value of your class there will initially only be one copy of that array in memory. When you write to an object’s data, if that object is currently sharing the default data pointer, LabVIEW duplicates the default value and then perform the write on the duplicate. Sharing the default instance makes the entire system use much less memory and makes opening a VI much faster.

- What is the size (in bytes) of LV Object? Whis question can be splitted into two: what is the size of actual object, which is stored somewhere in memory, and what is a size of a pointer to this object?

- If I create class, which has no data, just some methods, what is the size of the object of this class? According to the whitepaper fragment above, there should be only one instance of this class in the whole application, and everything would point to it.Therefore the answer to this question would be exactly the same as the answer to question 1?

- Folowing question 2: if the class methods are not reentrant, there is also one and only one instance of each in the whole memory?

- And all of this leads in fact to single question: if I use such pattern, I only "pay" once for allocating all my "Worker" objects in memory, and then LV simply reuse them?

Solved! Go to Solution.

08-25-2012 12:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I can't answer your questions with the authority of knowledge (and I usually don't bother myself with these issues anyway), but here's an attempt:

- The size of the default value of the class is determined by the data it has - if it has a DBL[] with a million elements, it will be ~8 MB. Each instance of the class is actually a pointer (either to the default data or to its own copy of the data which it can modify), therefore each object is 4 or 8 bytes in size (depending on the bitness of the system)*, but if its data is different from the default, it will also require space for that data.

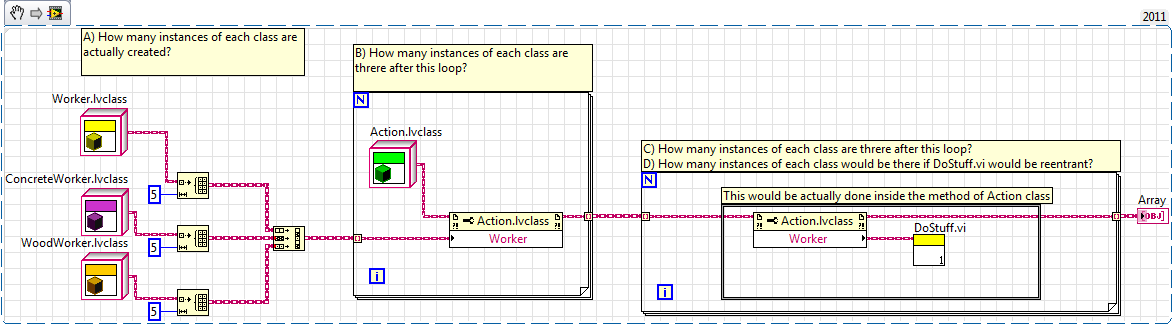

- Again, the answer to 2 is that there is only one instance of the default value of the class. There would still be N pointers pointing to that data. That also answers the questions in the image - there are N instances of the class (objects), but there is only one copy of the data.

- Yes, there would only be one instance of each VI (you were asking about the methods), and I assume only one instance of each object. I'm assuming that the same also applies if the VI was reentrant and that the place where the actual copy of the default data is made is only when you actually modify it. At least that's the way I understand that document.

- Presumably the answer is yes.

* At least, I'm assuming that a 64 bit OS only uses 64 bit pointers and simply allocates memory to 32 bit programs in a dedicated area of memory. If the design of the OS is smart (and it probably is), they can probably also have a dedicated 32 bit area for each 32 bit program and let it use the full 4 GB.

___________________

Try to take over the world!

08-27-2012 03:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for your answer, tst.

I'm aware that class data size is determined only by the contents of class private data cluster - that's what I can easily evaluate. Other things in objects that take my memory away are more interesting 😉 However, when I think of it, I believe this might be negligible in any applications (even RT).

I'm still unsure about question 4. I've run some tests using Profile Performance and Memory, nad I've got some surprising results, which made me wonder, when does LV actually decide to instantinate another object (data cluster, to be precise) of the class instead of using shared instance in memory. Is it possible that it happen every time I'm trying to mess with data (i.e. unbundling object inside its method)? I'll post my results when I re-check and confirm them.

P.S. I'm sure some guys over here are using the same (Strategy/State) pattern I'm talking about - have you ever considered the questions I've raised, or am I thinking too much?

08-28-2012 02:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

No, it's a concern I've had also.

I've recently been attmepting changing a classic enum-based state machine to a LVOOP implementation which alcso receives data from a RT target. Of course wrapping the return response from the RT in a message object would simplify things greatly and I also am unsure about the object size overhead. The larger the message from RT the less this is a factor but some of our messages are essentially 4-byte in size. I think the Object overhead will be significant.

But I haven't done any measurements yet.

Shane.

08-28-2012 08:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Longer answers:

The answers are different between RT and desktop (and FPGA, but I'll skip that for now). All the following is true for LabVIEW 8.2 through 2012. It may change in the future or not.

On desktop, there is one and only one default value object per class which is shared and has copy-on-write semantics. On RT, every instance that LabVIEW needs is separate. This logic is the same whether the object has data fields or not.

The size of an object in memory in is the size of the data + three pointers. No more, no less, on both desktop and RT.

I have no idea what you mean when you ask what the size of a pointer to the object is. Obviously a pointer to any data is the size of an address on your system, so I'm guessing that isn't what you meant.

Allocation of objects for VIs follow the same rules as all other LabVIEW data as far as when it is allocated, deallocated and reused by memory manager.

Reentrant methods are the same as any reentrant VIs in LabVIEW. The number of instances is based on call sites and pool size and has nothing to do with object instantiation.

All of which leads to your question number 4, and my answer is that you can write such zero realloc programs in LabVIEW depending upon the code you write. Objects do not have independent lifetime in a dataflow environment the way they do in procedural environs. You pay for data in LabVIEW depending upon the functions in your VIs. The rate at which new data is allocated depends upon what you do with it, and objects follow the exact same rules as strings or arrays. Memory that is no longer needed is freed when it is no longer needed (meaning without waiting for a garbage collector to come along). If that freed space Is the same size as the next block we need to allocate, the block gets reused. So if a program needs exactly the same space every time it iterates, it will use exactly the same space every time it iterates. In fact, memory won't even be released as unused in the first place if LabVIEW expects the code to iterate, leading to an allocate-at-launch-deallocate-at-overwrite pattern for all subroutines in your entire application.

Helpful?

08-28-2012 03:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Starting from the end:

@Aristos Queue wrote:

Helpful?

Yes it is 🙂

And now into some more clarifications:

@Aristos Queue wrote:

On desktop, there is one and only one default value object per class which is shared and has copy-on-write semantics. On RT, every instance that LabVIEW needs is separate. This logic is the same whether the object has data fields or not.

So, in my picture from the first post (let's assume that DoStuff.vi does not modify Worker data in any way, only read it): on desktop there would be one instance of data for each class: Worker, ConcreteWorker and WoodWorker. And on RT there would be 5 of each. Am I understanding this right (I think I can see good reasons for such approach for RT)?

@Aristos Queue wrote:

I have no idea what you mean when you ask what the size of a pointer to the object is. Obviously a pointer to any data is the size of an address on your system, so I'm guessing that isn't what you meant.

I'd have no idea either, now that I'm reading my question ![]() I meant the size of everything other than data itself. And the answer to that is: the size of three pointers. Ok.

I meant the size of everything other than data itself. And the answer to that is: the size of three pointers. Ok.

@Aristos Queue wrote:

All of which leads to your question number 4, and my answer is that you can write such zero realloc programs in LabVIEW depending upon the code you write.

So far I understood that if I don't mess with the class data in any way other that reading it*, LV won't create another instance of data for this class objects (at least on desktop, RT is my main concern now). And this is useful information, especially if I want to create hundreds, maybe thousands of objects of the same class in my application.

*which would obviously create copy of the read "variable" itself, by branching the wire, but not the copy of class data itself.

08-28-2012 05:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

PiDi wrote:So far I understood that if I don't mess with the class data in any way other that reading it*, LV won't create another instance of data for this class objects (at least on desktop, RT is my main concern now). And this is useful information, especially if I want to create hundreds, maybe thousands of objects of the same class in my application.

On desktop, you're correct. All the wires will point at the single default value.

On RT, the instances are independent, but that doesn't mean you're ever creating hundreds or thousands of the same object. If you created an array of 1000 objects, yes, you would have 1000 instances. But if you forked one object wire to two different accessor functions, and neither of them modified the object, they would both be sharing the same object.

Everything that follows is a rough guess because the LV compiler is at this point smarter than any human at doing this analysis and I reserve the right to yield to its computational superiority. I did not take the time to construct your example and put it under a debugger.

More important safety tip than that: Everything below is true only of LV 2012. The compiler and its optimization systems were massively different in 2009, slightly different in 2010, somewhat different in 2011, and should be vastly different in 2013.

In the picture shown:

When you first hit the run button, there are 4 objects on the block diagram, one in each of the four constants. The Action object has a worker object inside of it (its default value). I am ignoring anything on the front panel on the assumption that if you were using this in an application the panel would be closed. Total: 5

After section A, there are 6 objects of each of the Worker classes (5 in the array and one in the constant) and 1 Action object (in the constant) and another worker object inside that. In this particular case, I think that toggling debugging has no effect on the count, but in many cases, any interim steps would evaporate when debugging is turned off. Total: 20.

Running B will copy each element of the array into the Action object, leaving the array behind. Shouldn't those objects be moved into the property node? No. LV leaves the array in place -- it is already allocated if this entire VI gets called a second time as a subVI. So you now have 34 worker objects and 16 Action objects. Total: 50

C is curious... C will not make any further copies of the Action objects as it runs, but because you used the Property Node, you're guaranteed to make a copy of the Worker object when you read it. Because the property VI will only conditionally fire based on the error cluster, the property VI forces a copy. An unconditional VI call may or may not make a copy, depending upon many many factors, but mostly depending upon what happens downstream. Since you say that the square region would be done inside a method of Action, put that inside an Inplace Element Structure and use the Unbundle/Bundle pair and you remove the guarantee that there will be a copy made. There might still be a copy depending upon what you do in that subVI, but the probability shifts in your favor. I can't think of a scenario where an unmodifiable object would be copied going into a local subVI call (even if the subVI is reentrant or dynamic dispatch) but the LV compiler might know of a case, so I won't rule it out -- there might be something involving UI thread priority or something weird that I haven't thought of.

BUT, there will only be one object at a time, so after the loop runs, there will only be 51 objects total -- that new one is the final copy made inside the loop which remains allocated. 50 if you switch out for an inplace element structure.

When you call this subroutine a second time, those allocations are already made, and they get reused. So assuming you use the inplace element structure, there is not a continuous memory bloat when you call this multiple times in succession -- your memory level holds dead flat.

- Tags:

- Data_Allocation

- LVOOP

08-29-2012 12:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Aristos,

Feel free to stop by and do that more often. Your insight are invaluable to those us in the trenches fighting with the weapons you and your produce.

Paraphrasing myself re: an SKS

"Why didn't anyone tell me there wa a cleaning kit built into the butt stock?"

Ben