- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »

Memory management for custom buffer

03-14-2023 11:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Kevin_Price wrote:

Um, the code as shown isn't going to populate the buffer. You start with an empty array of strings, and trying to replace an element of an empty array is just gonna result in an empty array still.

It's actually an array of empty strings of size "buffer size". Notice the initialize array that replaces the default cluster element.

03-15-2023 02:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yes, Get Queue Staus has of course to create a copy of the element as the original remains in the Queue.

So factually you do not really want that. Instead it would almost certainly be more efficient to simply retrieve the elements normally and process them and if your analysis determines that they are not yet ready to be thrown away to enqueue them again, possibly at the head in reverse order if order of elements is important (to account for new elements arriving during reinsertion to remain at end of the queue.

This”might” be a case to use. Intelligent Globals aka enhanced LabVIEW 2 style globals. But I would first try above queue solution and benchmark it. Queue operations are very optimized as long as you do normal enqueue and dequeue operations. The prepend at front is slightly less for large numbers of elements but is for sure still much better than the peek through Get Queue Status.

03-15-2023 05:21 AM - edited 03-15-2023 05:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

@Kevin_Price wrote:

Um, the code as shown isn't going to populate the buffer. You start with an empty array of strings, and trying to replace an element of an empty array is just gonna result in an empty array still.

It's actually an array of empty strings of size "buffer size". Notice the initialize array that replaces the default cluster element.

Yep, sure enough. Oops, never mind. Despite looking at the other stuff closer, I looked at the empty array in the big ol' cluster constant and skimmed right past the fact it was only being used as a type specifier.

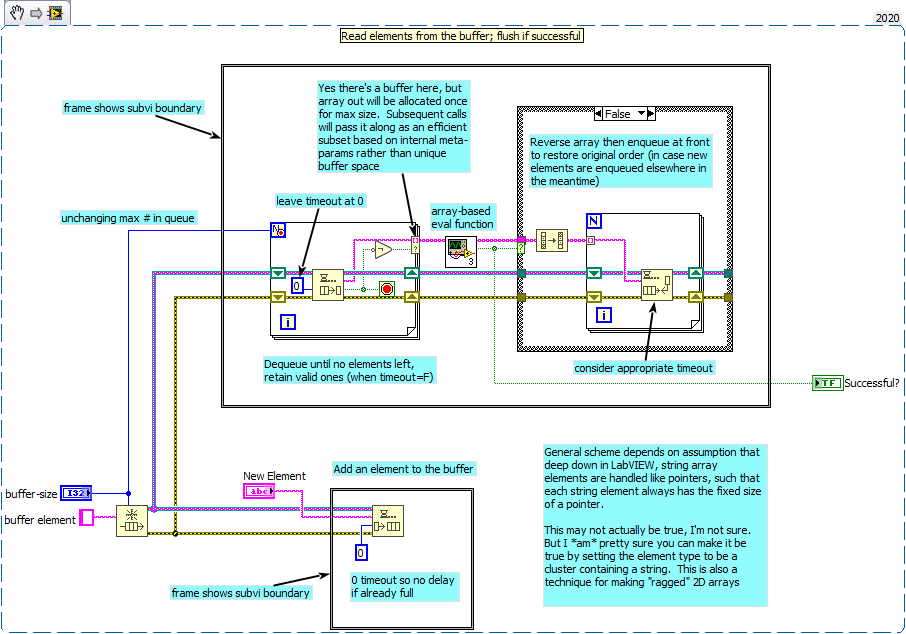

As penance, here's a quick sketch of one possible queue method. Consider it a (hopefully) decent starting point, not a final product. One key built-in assumption is that dequeue and enqueue with timeout set = 0 will succeed and not time out whenever logical (enqueue succeeds if queue not full, dequeue succeeds if queue not empty).

This has seemed to be true under relatively benign conditions, but I haven't tried this under conditions of massively parallel simultaneous access.

-Kevin P

03-15-2023 06:21 AM - edited 03-15-2023 06:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Kevin_Price wrote:

This has seemed to be true under relatively benign conditions, but I haven't tried this under conditions of massively parallel simultaneous access.

There were some problems with parallel access to queues, semaphores and mutexes where the code wasn't fully multithreading safe and there was sometimes a small window of opportunity to grab the objects content while another operation was already busy to grab it. The result however was rather sooner than later a good old and hard crash. This got fixed in one of the releases after the initial introduction of these primitives.

Other than that I'm not aware of any problems with parallel access even if it is massive. Of course if you really push the massive parallel access you should not be surprised if your operation takes much more time! That mutexing for these object takes time and while one site holds such a mutex all the other sites trying to grab it simply have to wait, and while that wait is asynchronous, meaning that LabVIEW can do other things that occur parallel to the function trying to access that object, this only works as long as there are any other things in that diagram to do!

03-15-2023 07:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

FWIW, the *relatively* low overhead of the mutexing for queue accesss turned out to be a very useful solution to a big RT problem I faced quite some time ago.

<Sea Story>

The overall framework had, at its core, a non-reentrant shared-access FGV. It contained *all* the state variables of a complex system; we referred to it as our "data soup."

We were generally on 10 msec loop timing, but kept getting occasional timing blips that hit 12. We eventually traced it down (with NI's help) to the mechanism involved with resolving "priority inversion".

If a normal priority process was accessing the FGV when the time-critical process *wanted* it. LabVIEW would manage things by halting the normal process' access and granting it to the time-critical one. Whatever method was used to handle this priority inversion added a 2 msec blip to the timing whenever that kind of access contention happened. I no longer recall whether the interrupted process would then get back in line for access, whether an error was asserted, or whether the call was simply skipped.

The solution that greatly helped timing jitter was to rework the FGV to store the data in an internally-named single element queue. And then the FGV was set to be reentrant (weird, right? That's why the queue needed a constant internal name, so multiple instances of the FGV would reference the one and only queue.)

The single-element queue guaranteed atomic access to the data for whoever was accessing the queue at that instant. The time-critical process would *not* interrupt the normal one, but it was a fast access process so it also never needed to wait very long. As I recall it was always under 100 microsec, <1% of the nominal loop time.

The *typical* timing jitter did increase just a bit, but the worst case got a heckuva lot better. That was a good trade off for our purposes. And it depending on the queue access mutexing being at least *relatively* efficient.

</Sea Story>

-Kevin P

03-15-2023 01:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Once memory is allocated in a Queue, releasing may be more difficult than you think. This is an old post and I don't know what has changed since, but clearing the queue does not necessarily remove memory.

That being said, the problem here does seem over engineered. I am guessing a FGV with a string input where new lines are appended/prepended or removed is probably an efficient way to store a string. You could count new line tokens to determine how/where to split the string for processing.

03-16-2023 10:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@rolfk wrote:

Yes, Get Queue Staus has of course to create a copy of the element as the original remains in the Queue.

So factually you do not really want that. Instead it would almost certainly be more efficient to simply retrieve the elements normally and process them and if your analysis determines that they are not yet ready to be thrown away to enqueue them again, possibly at the head in reverse order if order of elements is important (to account for new elements arriving during reinsertion to remain at end of the queue.

I quite agree with this solution! I was thinking about something similar.

- « Previous

-

- 1

- 2

- Next »