- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Shared Network Variables too slow

03-20-2014 03:39 AM - edited 03-20-2014 03:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Here's my situiation:

- On the one end I have a simple LabVIEW VI that sets a shared network variable (int32) in a loop.

- On the other end there is a small C++ application I wrote, which measures the time between variable updates.

- Both are running on the same computer (which is pretty fast BTW)

The problem is that I can't get the update rate any faster than 10ms. This seems quite slow and is not enough for my planned use case.

When I add more variables the cycle time still stays at 10ms. This makes me believe that this not a bandwidth issue, but rather something else. Thus a better performance should be possible...

What I tried:

After reading all the documentation and lots of threads I tried the following things:

- Use a small delay in my VI sending loop (1ms). Without this LabVIEW seems to "choke"

- Use the Flush-Block right after the write block to send immediately

- Open and close the variable connection outside of my sending loop

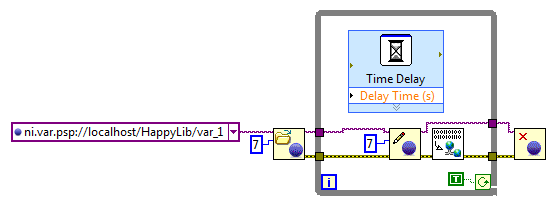

Here's what my VI looks like:

03-20-2014 04:48 AM - edited 03-20-2014 04:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Boris,

LabVIEW temporarily stores the data that you write to a shared variable in an 8 kilobyte buffer. LabVIEW sends that data over the network when the buffer is full or 10 milliseconds have passed. Because you incur overhead each time you send a data packet over the network, this design increases throughput by decreasing the number of data packets you send.

However, this design increases latency when you write less than 8 kilobytes of data to a shared variable. To eliminate the 10 millisecond delay and minimize latency, send all shared variable data over the network immediately by using the Flush Shared Variable Data VI after you write to the shared variable,[...]

So far from the LV help regarding the Flush function in your code. It seems that Flush does not perform as described in your use case.

I am wondering about your Time Delay ExpressVI. Why dont you include it in the execution chain e.g. between write and flush (use error cluster for this!).

You loop never exits. So your close connection will NEVER execute. Please change that. Stopping a VI with the "Abort" Button is a no-go!

Your code does not include any benchmarking. How do you know that this loop executes with 10ms delay each iteration?

Is it possible that your C++ application encounters the 10ms delay during reading?

Norbert

----------------------------------------------------------------------------------------------------

CEO: What exactly is stopping us from doing this?

Expert: Geometry

Marketing Manager: Just ignore it.

03-20-2014 05:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Norbert,

I changed the VI according to your suggestions (they make total sense).

- The Delay (1ms) is now in the execution chain

- The VI is stopped via a button

But the slowish behavior (10ms cycle time) still exists.

My C++-Code is as simple as it gets. It sleeps all the time and only reacts when the callback from the NI-Thread comes in. In that case the current time is taken and compared to the last time.

Regarding the VI: I guess the VI runs faster than 10ms. But that doesn't mean that the variable engine transmits at the same speed right? So benchmarking wouldn't help much here... (would it?)

03-20-2014 05:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

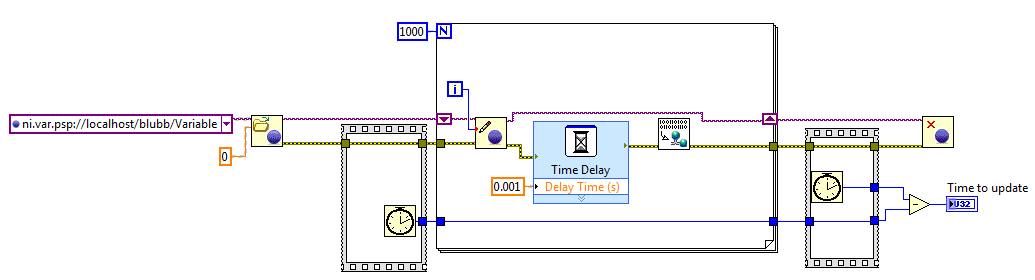

Hmm, i tested this using LV 2013 and the Distributed System Manager 2013.

I cannot see any delay when using a shared variable which is not buffering (double scalar in my test).

The iteration time of the loop was 1ms and it took the DSM to read 1000 values only a little more than 1s.

Here a screenshot of the code, which is quite similar to yours:

So obviously, the SVE (Shared Variable Engine) does not wake up your C++ code in the time pattern you expect. Have you checked for CPU load? Thread locks?

And also please verify the speed with DSM as well....

Norbert

----------------------------------------------------------------------------------------------------

CEO: What exactly is stopping us from doing this?

Expert: Geometry

Marketing Manager: Just ignore it.

03-20-2014 07:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The CPU load is < 2%, so that shouldn't be an issue.

DSM

How can I test the performance with the DSM? I just took a look at the tool, but I only can see the current value of the variable and the timestamp (with 1s resultion).

LabVIEW

I will try to recreate your cycle time measurement VI you have shown in your last post. lets see if LabVIEW really runs my VI faster than 10ms...

My C++ Test application:

The app seems to work fine. For example when I set the send-delay in LabVIEW to 13ms, my app measures exactly 13ms. This works perfectly for all values >= 10ms.

DWORD gEventCounter = 0;

// Callback for data received events

void CVICALLBACK EventCallback(void* handle, CNVData data, void* callbackData)

{

gEventCounter++;

CNVDisposeData(data);

}

int _tmain(int argc, _TCHAR* argv[])

{

// Activate the variable

CNVSubscriber subscriber;

int retVal = CNVCreateSubscriber("\\\\127.0.0.1\\HappyLib\\var_1", EventCallback, NULL, NULL, CNVWaitForever, 0, &subscriber);

// Count how many events get in in 5 seconds

Sleep(5000);

std::cout << "Event count: " << gEventCounter << "\n";

std::cout << "Average time per event: " << (5000 / gEventCounter) << "\n";

CNVFinish();

return 0;

}

03-20-2014 07:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Addition:

I have just tested with a VI similar to yours: LabVIEW sets the variable 5000 in 5 seconds with a 1ms delay.

Perfect 🙂

Still in my C++ app I only see an event every 10ms...

03-20-2014 07:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hm,

obviously, you are using CVI, which is ANSI C. Or did you replace the CVI compiler?

In CVI, "Sleep" suspends the thread which calls that function for that time (add a little jitter because of Windows). I don't know, how the callback mechanism hooks up in there esp. considering the sleep policy......

The problem of your code is that LV has to be started first; otherwise, you have no chance to get near 5000 updates in the 5s sleeping (given that thread suspension isn't an issue). Again, i see no benchmarking in the code, so why do you state that 13ms delay is OK while 1ms isn't?

Regarding DSM: It does not provide an update rate close to 1ms as most displays and the human eye don't "respond" to an update rate of less than 50-100ms. So you will never see each individual value. This holds true for CVI code (and display) as well.

So the only option to prove that each value was transmitted correctly is to collect the complete data set and display it on a graph element once it was transmitted (e.g. 1000 values as i did). If overall "acquisition" of that data is about 1s, you know that each data point, in average, was sent with 1ms delay.....

Norbert

----------------------------------------------------------------------------------------------------

CEO: What exactly is stopping us from doing this?

Expert: Geometry

Marketing Manager: Just ignore it.

03-20-2014 07:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

In CVI, "Sleep" suspends the thread which calls that function for that time (add a little jitter because of Windows). I don't know, how the callback mechanism hooks up in there esp. considering the sleep policy......

It works as follows: the main thread sleeps for 5 seconds. A second thread (LabWindows CVI Thread) then calls the Callback whenever new data arrives. This is independant from the main Thread.

The problem of your code is that LV has to be started first; otherwise, you have no chance to get near 5000 updates in the 5s

Thats right. But why is this a problem? That's the way I do it: start LV (endless sending loop), then start the tool which records for 5 seconds.

Again, i see no benchmarking in the code, so why do you state that 13ms delay is OK while 1ms isn't?

What do you mean? I count the sysvar updates I get in the variable gEventCounter. After 5 seconds I can divide the time by the number of events. This gets me the average cycle time.

In this case: 5000 ms / 384 events = 13 ms per event

If I make LabVIEW go at 1 ms cycle time, I get exactly 500 events in 5000 ms, meaning each event takes 10 ms.

03-20-2014 08:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Booya!

I just made a very funny observation:

- When I send a constant number from labVIEW with 1ms cycle time, I get an update every 10ms in my test app. (That's the problem I was describing).

- But when I send a changing number (!) I get 1ms cycle time all the way. Everything is working fine then.