- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Size of binary and csv files

Solved!05-05-2013 03:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi all,

I want to save my data in a CSV and binary file (.DAT). The VI appends 100 new double data to these files every 100 ms.

The VI works correctly but I noticed that the binary file is bigger that the CSV file.

For example, after several minutes, the size of the CSV file is 3.3 KB, while the size of the binary file is 4 KB.

But.. shouldn't binary files be smaller than text files?

Thank to all

Solved! Go to Solution.

05-05-2013 03:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

All depends on how you're creating your binary file and what you're putting in to it.

What is the data you want to store? Example of code? Data?

- Bjorn -

Have fun using LabVIEW... and if you like my answer, please pay me back in Kudo's 😉

LabVIEW 5.1 - LabVIEW 2012

05-05-2013 03:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

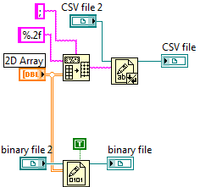

I save data in this way:

The data are a 2D array (2 X 50 double precision). The previous code is placed in a while loop, the writing is repeated every 100 ms. In this way, the VI appends 100 new double precision data (2 X 50) every iteration.

05-05-2013 12:53 PM - edited 05-05-2013 12:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

How are you measuring the instantaneous file size? Notice that whetever you see in windows explorer is probably too small until you flush the file and refresh the window.

In any case, the binary file will have 8 bytes/value while the number of characters for the csv file depends on the formatting. How big are the numbers? You configure only 2 decimal digits, so if the values are small, you might use less than 8 bytes/value (including delimiters, etc.) of course you are losing a lot of precision at the same time.

You are also prepending the array size for each chunk, adding more overhead in the binary case.

05-05-2013 05:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You're not conducting a fair test, because you're not doing the same thing in both cases.

The bottom portion stores DBLS, a 64-bit floating point number representation capable of 16-digit precision.

The top portion stores a string which you have cut off at two decimal places.

If you store 1/3 as a string with 2 DecPlaces, you'll get "0.33;" - 5 bytes.

Store 1/3 as a DBL and it's 8 bytes. Every time.

On the other hand if you store 1 trillion / 3 as a string with 2 DecPlaces, you'll get "333333333333.33;" = 16 bytes

Store it as a DBL and it's 8 bytes. Every time.

Culverson Software - Elegant software that is a pleasure to use.

Culverson.com

Blog for (mostly LabVIEW) programmers: Tips And Tricks

05-06-2013 06:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You are also prepending the array size for each chunk, adding more overhead in the binary case.

Should I avoid the use of prepending for each storage? How could I read the binary file without creating a header for it?

So..

For example, the number 110.99 stored in CSV format has a size of 6 byte [(5 digits + comma) = 6 byte ]

In the binary case, it occupies 8 byte.

Is it correct?

I need only 5 digits (hundreds, tens, units and 2 decimal digits) . I could save data in Single-precision floating-point format (4 byte). In this way, the file size should be half compared with the double-precision format.

Isn't it?

Thanks

05-06-2013 07:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You have several options.

The first (and easiest) is to not worry about it. If you use DBL and then decide next month you want six digits of resolution, then it's there. If you use STR and decide next month, well, you're out of luck. Storage is cheap, Maybe that works for you , maybe it doesn't.

The comment about prepending the header is accurate, but it's a small overhead. That's adding 4 bytes for each chunk of 100*8 = 800, that's 0.5%.

If you don't like that, then avoid the prepending. Simply declare it as a file of DBL, with no header, and be done.

You store nothing but DBLs in it.

That means that YOU have to figure out how many are in the file (SizeOf(file) / SizeOf(DBL)), but that's doable.

You have to either keep the file open during writing, or open+seek end+write+close for each chunk.

If you want to save space consider using SGL instead of DBL. If it's measured data, it's not accurate beyond 6 decimal digits anyway.

Or consider saving it as I16s, to which you apply a scaling factor when reading.

Those are only if you seriously need to save space, though.

Culverson Software - Elegant software that is a pleasure to use.

Culverson.com

Blog for (mostly LabVIEW) programmers: Tips And Tricks

05-06-2013 07:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you Coastal.

My VI acquires 100 new data every 100 ms -> 3.6 E+6 data every hour.

As the VI has to work for several hours, I would make the file size as little as possible. I think I will use SGL data.

The comment about prepending the header is accurate, but it's a small overhead. That's adding 4 bytes for each chunk of 100*8 = 800, that's 0.5%.

Actually, the header is negligible.

Thanks