- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Subtraction between timestamps

Solved!04-03-2024 07:35 AM - edited 04-03-2024 07:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Good morning.

Working with subtraction between timestamp data, I'm encountering seemingly nonsensical behavior, which is becoming quite a headache for the algorithm I'm trying to develop.

Specifically, given that the subtraction between 2 timestamps gives me a double as a result, which effectively represents the number of seconds that elapse between the 2 instants, if I try to convert this number into a new timestamp, the result I get is invariably a hour more than the actual difference between the 2 times.

What is it that's slipping away?

Thanks to everyone who can give me a hand

Solved! Go to Solution.

04-03-2024 08:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

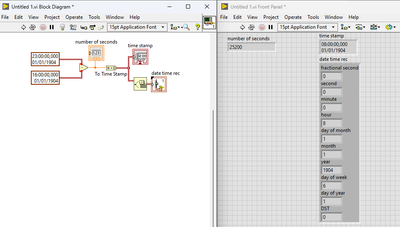

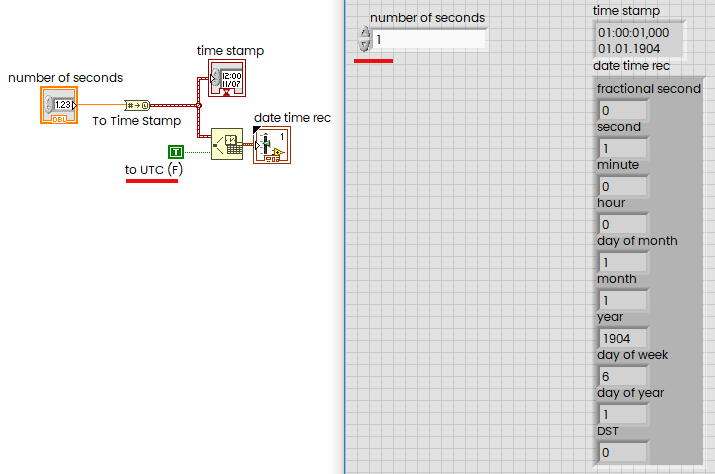

Try to convert just 1 second with and without UTC, this should explain everything for you:

04-03-2024 08:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The Time Stamp control/indicator/constant display based on your system's time zone. I'm pretty certain I saw a nugget about having the Time Stamp display in UTC, which would display the 7 hour that you are looking for. I just don't remember how to do it at the moment.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

04-03-2024 08:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Great point, Andrey Dmitriev.

Indeed, checking the documentation of the "To Time Stamp Function " it is specified that "time stamp is a time-zone-independent number of seconds that have elapsed since 12:00 a.m., Friday, January 1, 1904, Universal Time [01-01-1904 00:00:00]."

Thak you so much

04-03-2024 08:33 AM - edited 04-03-2024 08:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I just found the trick. Right-click on the timestamp and choose "Display Format". Choose the "Advanced editing mode" on the bottom of the dialog and then change the Format String to "%^<%.3X %x>T". The %^<>T format is described as "Universal time container". So by just inserting that carrot to the front will make the display use UTC instead of your local time zone.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5