- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Timestamp to milliseconds

Solved!08-28-2012 02:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I am trying to compute elapsed time from Timestamps datatypes with millisecond (or better) resolution.

If I use a feedback node in a loop and subtract the previous iteration Timestamp value from the current iteration Timestamp value, I get a deltaT, but only to 1 second resolution.

I think that timestamps are a composite of a I64 (for seconds) and U64 (for subseconds), but cannot figure out a way of breaking the timestamp up into those two components.

Rick

PS: I need to do this with Timestamp data types as the data is coming via a datasocket from another system downstream that is actually doing the timestamping and I can't change that.

MIT Kavli Institute for Astrophysics and Space Research

Solved! Go to Solution.

08-28-2012 02:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Rick,

Please show what you are doing. I get resolution to much smaller than millisecond resolution (although the accuracy is probably not that good).

Lynn

08-28-2012 04:21 PM - edited 08-28-2012 04:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

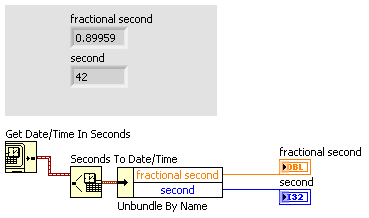

seconds is an I32 and fractional seconds is a DBL..

08-29-2012 06:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Try to capture the timestamp values from the remote system into an array and see if the individual values contain fractional seconds.

My guess is that the remote system is reducing the precision to seconds before publishing via DataSocket.