- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Wait functions in while loops and its relation to timed measuremens

07-21-2016 10:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello all,

My question stems from something rather common - I want to make a measurement (in this case from a multimeter) at a defined time interval. My first thought is to use a wait function set at say 1000 ms.

But my question is does the wait, and the actual code which collects the data, start at the same time? Or does it end up being:

code compilation time + the wait function time.

Finally in the case that both the wait and the labview code are executed at the same time, to what resolution/accuracy can we say that the time between measurements is 1000 ms?

Thanks!

07-21-2016 11:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Determined by data flow.

If there is no dependency between the two execution paths of wait and multimeter read (I don't know what you mean by "code compilation time"), then they will run in parallel and start a the same time.

If you have it wired up so that the multimeter read is waiting for the wait to finish (perhaps that is put in a case structure and you have an output wire of the wait going to the case structure), then they will happen in series.

For waits, you are dependent on the computers internal hardware timer. But you should be good within a millisecond.

07-21-2016 11:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for your reply!

In my curent case I have the section of code that acquires the data point from the multimeter and the wait function all inside the loop structure. No sequence structures or dependency. In this case I can assume the wait starts at the same time as the data aquistion bit then?

Is there a more elegant way of ensuring that the time between two meaurements is exactly say 1 second (within some error of course)?

And sorry, by code compilation time I was refering to the data aqusition section of code on it's own - not including the wait.

Thanks

07-21-2016 11:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Even you can use elapsed timing function with once second as target time and read multimeter value once the time is elapsed and reset elapsed timer once the data read so that it will wait for next cycle...

Many different ways to achive this!

Palanivel Thiruvenkadam | பழனிவேல் திருவெங்கடம்

LabVIEW™ Champion |Certified LabVIEW™ Architect |Certified TestStand Developer

Kidlin's Law -If you can write the problem down clearly then the matter is half solved.

-----------------------------------------------------------------------------------------------------------------

07-21-2016 11:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The first suggestion will complete the loop within 1 second including acqisition and wait time.....

Palanivel Thiruvenkadam | பழனிவேல் திருவெங்கடம்

LabVIEW™ Champion |Certified LabVIEW™ Architect |Certified TestStand Developer

Kidlin's Law -If you can write the problem down clearly then the matter is half solved.

-----------------------------------------------------------------------------------------------------------------

07-21-2016 12:18 PM - edited 07-21-2016 12:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Don't get in the habit of using the Wait (ms) or Wait Until Next ms Multiple

I know it's not a big deal taking one measurement from one instrument, but later on you might have to take multiple measurements from multiple instruments while keeping the time interval equal. For instance I have a few power analyzer sthat only have 9600 baud serial connection. If I am taking all the standard AC power measurements from a few of these (I have had four of them will all three channels loaded plus a data logger with 30 temperature probes in one test before) It can take several seconds to pull all of the data out of all thoes instrument. That polling time needs to be accounted for in your polling timer.

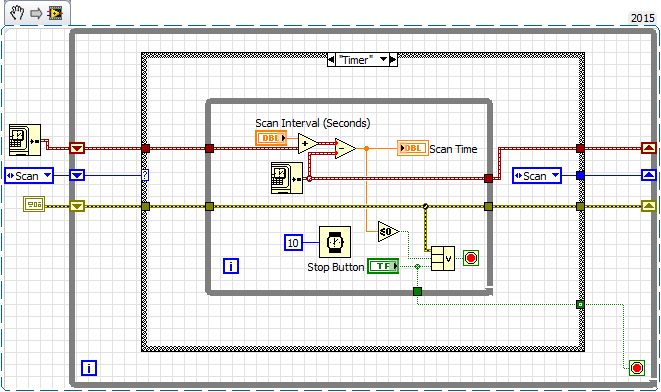

Here is what I do. This is a simplified state machine I am showing just the timer and a "scan" case and normally I would use a TypeDef in place of the enum.

What I want you to see is I am using the time now and "doing the math" to see if it's time to take a measurement.

Notice that when it is time to take a measurement, a new time stamp is generated. I use that time stamp on my data and using shift registers that time stamp is now the "start time" for doing the math next time

Now the time it takes to actually take all the measurements is accounted for in the measurement timer.

=== Engineer Ambiguously ===

========================

07-21-2016 12:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@quantisedpenguin wrote:Is there a more elegant way of ensuring that the time between two meaurements is exactly say 1 second (within some error of course)?

How accurate do you want that 1 second rate to be?

If software timing is good enough, then you are on the right track already (put the wait in parallel with the code that does the actual read, all within a loop). If you need more precise, then we should talk about getting a counter to trigger the DMM at the specified rate and then you read the last triggered value from the DMM.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

07-21-2016 01:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks I will try out your solution!

07-21-2016 01:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well this is the thing. For my current purpose I think the wait is probably accurate enough. In reality the multimeter is being used as a thermometer for a cryogenic set up and it is just so I have a rough idea on how long the systm takes to heat up and cool down.

But I was asking for in future cases where I need the time interval between a measurement to be exactly known. Also I think its just good practice to be as accurate as you can especially if it requires only a little extra effort.

07-21-2016 01:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@quantisedpenguin wrote:Well this is the thing. For my current purpose I think the wait is probably accurate enough. In reality the multimeter is being used as a thermometer for a cryogenic set up and it is just so I have a rough idea on how long the systm takes to heat up and cool down.

But I was asking for in future cases where I need the time interval between a measurement to be exactly known. Also I think its just good practice to be as accurate as you can especially if it requires only a little extra effort.

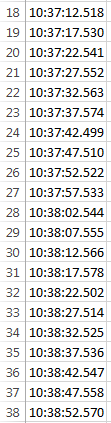

Here's a sample of my time stamps in Excel.

As you can see the 5 second measurment inverval varies by <100mS

=== Engineer Ambiguously ===

========================