- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »

Wait functions in while loops and its relation to timed measuremens

07-21-2016 01:30 PM - edited 07-21-2016 01:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@quantisedpenguin wrote:

But my question is does the wait, and the actual code which collects the data, start at the same time? Or does it end up being:

code compilation time + the wait function time.

Finally in the case that both the wait and the labview code are executed at the same time, to what resolution/accuracy can we say that the time between measurements is 1000 ms?

You already got some good answer, so let me just clarify again some terminology.

- Code is always compiled. There is no "compilation" at run time. Even the wait is part of the compiled code.

- Dataflow dictates that all code inside the while loop needs to come to completion before it can go to the next iteration. All independent code fragments will run in parallel, so the loop time is determined by the slowest element. Assuming that everything else is fast (1s is almost infinitely long from a computer perspective, but if communication with an instrument or file IO is involved there are other outside factors to consider).

- The slowest part of the loop code is probably the wait, so that will determine the loop rate.

- For utmost regularity (sound like a tv commercial!) the "wait next ms multiple" is preferred, but you need to be aware that the first iteration will have a random duration (0..1s) unless you take certain precautions.

- At this point I would still implement it as a producer-consumer design pattern so you can isolate the instrument related code from the UI. A 1s wait makes the UI rather sluggish if your code is monolithic.

07-21-2016 01:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@RTSLVU wrote:

@quantisedpenguin wrote:Well this is the thing. For my current purpose I think the wait is probably accurate enough. In reality the multimeter is being used as a thermometer for a cryogenic set up and it is just so I have a rough idea on how long the systm takes to heat up and cool down.

But I was asking for in future cases where I need the time interval between a measurement to be exactly known. Also I think its just good practice to be as accurate as you can especially if it requires only a little extra effort.

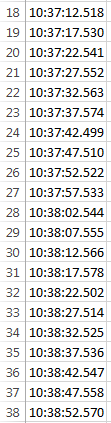

Here's a sample of my time stamps in Excel.

As you can see the 5 second measurment inverval varies by <100mS

Oh wow okay so it is pretty accurate. Is this possible to extend to reduce the error bars even further? Like I said I think this has more than enough accuracy for my original purpose but eventually I willl need measurements that have a very clear time interval, OR at least a way of accurately reporting the time it took to make that particular loop iteration.

07-21-2016 01:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@quantisedpenguin wrote:

Oh wow okay so it is pretty accurate. Is this possible to extend to reduce the error bars even further? Like I said I think this has more than enough accuracy for my original purpose but eventually I willl need measurements that have a very clear time interval, OR at least a way of accurately reporting the time it took to make that particular loop iteration.

Well you could by shrotening the 10mS wait in the timer loop.

BUT you do NOT want to use 0 wait in a loop, because LabVIEW will grab all the computers resources and peg the CPU at nearly 100% just to spin that loop. Placing a dealy even as small as 1mS allows Windows to do its time sliceing and multitasking without making everythign else sluggish.

Remember though that Windows is NOT a realtime operating system so timing is never going to be super accurate to begin with.

=== Engineer Ambiguously ===

========================

07-21-2016 01:40 PM - edited 07-21-2016 02:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@RTSLVU wrote:Placing a dealy even as small as 1mS allows Windows to do its time sliceing and multitasking without making everythign else sluggish.

Actually, even a 0ms wait will allow a thread switch and will make things more fair. (Still the CPU will be high, of course ;))

07-21-2016 02:04 PM - edited 07-21-2016 02:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

For acurately spaced samples use hardware timed acquisitions.

If you have a clock source available, timed loops are impresive even on a Winodws machine (provided you don't ahve anything silly going on in the background).

Re: Wait Unitl next ms multiple

I never use them because in larger applciations, they may not start timing when we would hope. Say you use 1000 ms to oncea second. Then due to something else going on in the PC, the code does not aget at the CPU for 1001 ms. The next multiple would be two seconds not one second.

And while I am at it...

Unless you are using all unique prime number delays the "...ms multiple" in multiple loops will result in all of the threads waking up and competing for the CPU at the same time.

Re: 0 ms waits

As Christian wrote, they will allow the CPU to be released to let other thread to run. The CPU will get hammered but it will be getting hammered by multiple threads and not just one.

If I have to do heavy number crunching and I need all of the CPU I can get but ath the same allow other things to progress, I will drop a 0 ms wait in the number crunching loops.

Just my 2 cents,

Ben

- « Previous

-

- 1

- 2

- Next »