- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Weird timing peaks in Timed Loop on RT

Solved!09-20-2019 08:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

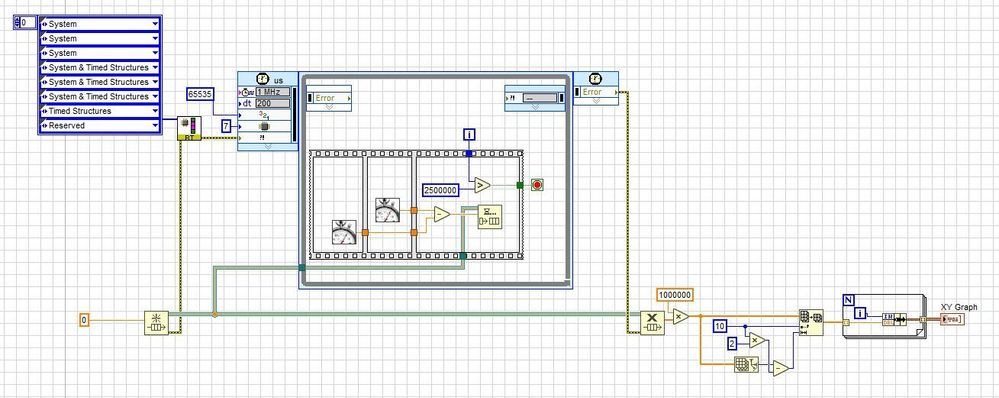

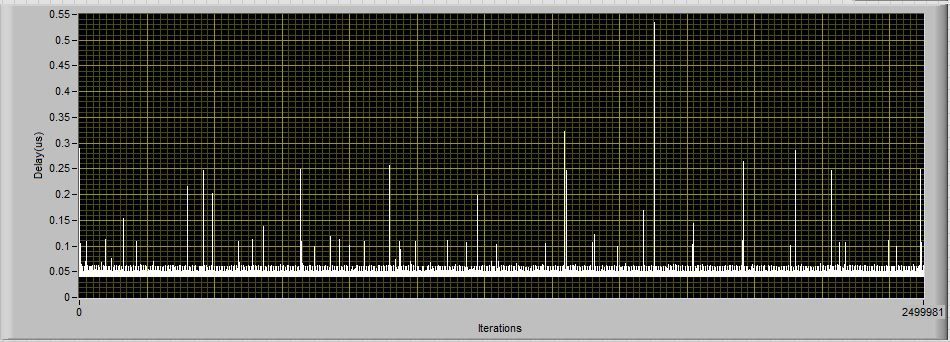

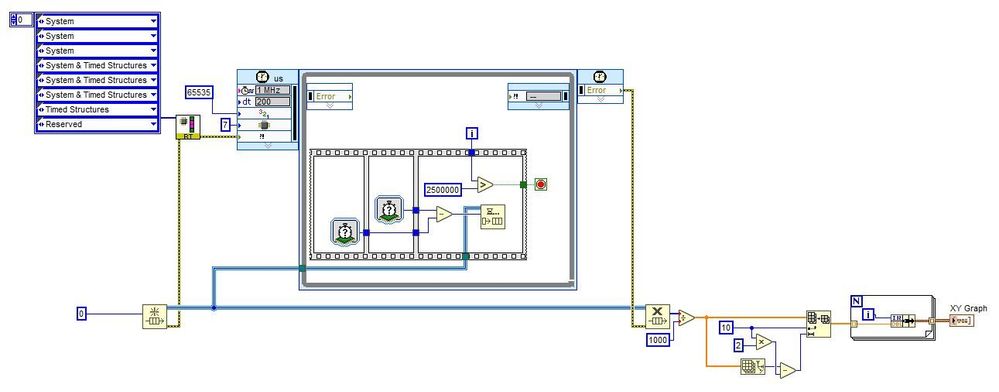

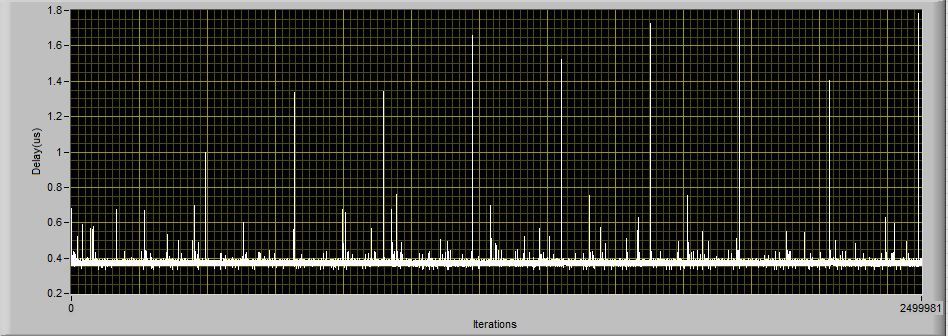

For a project we're doing, where delay is very important, we see this weird behavior in our timed loop. We're measuring the execution time of our business logic but we see this periodic peaks in the timing which are adding up the longer our program is running. In the loop shown below(which is the stripped down version of what we're actually trying to achieve) we simply measure the time which our business logic needs to execute for each iteration, in this example we removed the business logic to pinpoint our problem. Every 2^18 iteration (262144) we see this peak, which is increasing every iteration.

We did 2 different tests, one with the high resolution relative seconds and the tick count (ticks). but we still see the same behavior.

This is the one with the High Resolution Relative Seconds

And this is the one with the Tick Count (ticks)

Can anyone explain where these peaks are coming from? and how to eliminate them, because this problem is currently blocking the project.

This project in developed in LV2019 running on a PXI 8880 with Linux RT.

Solved! Go to Solution.

- Tags:

- Linux

- RT

- timed loop

09-20-2019 09:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You may want to use a fixed size queue (and pre-fill it then empty the queue BEFORE you start the timed loop.)

As it stands now LV has to allocate space for your queue whenever the existing memory allocation is filled.

Ben