- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Why is C code so much faster? (Image Processing)

Solved!04-07-2016 11:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello!

I am working on bigger application but I reduced it to show You a problem which I encounter at the beginning. I attach all the files needed to run.

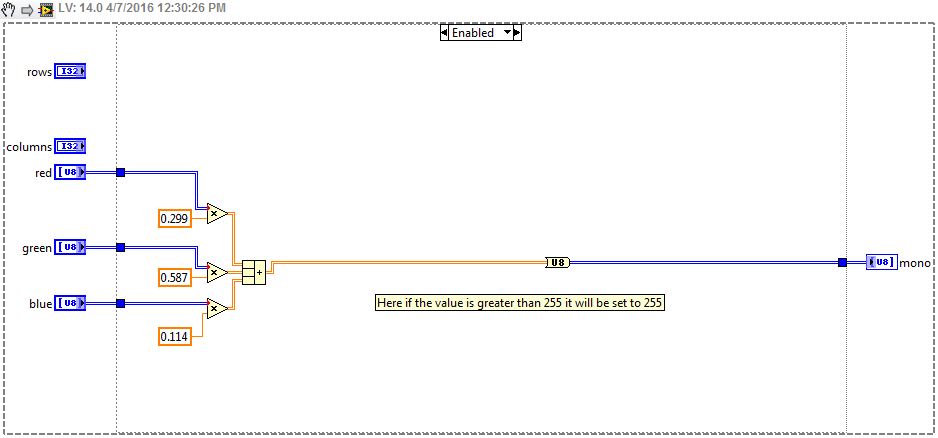

This is simple rgb2monochrome algorithm which I implemented in C code and LabVIEW code. I don't now why C code is 4 times faster.

In C code I transfer arrays with pointers so I am working on certain area of memory. I thought that LabVIEW may be slower because it creates some unnecessary copies of arrays. I tried to solve this with In Place Element Structure and Data Value Reference but no effect.

I also tried to change tunnels into shift registers in some places (read in the Optimizing LabVIEW Embedded Applications). I changed options in Execution Properties of subVIs and I built EXE application to see if it will be faster. Unfortunately I still can't reduce diffrence between execution times.

I know that my algorithm may be better optimized but this is not main problem. Now both algorithm are implemented in the same way (You can check in cpp file) so they should have similar execution times. I think I did some mistake in LabVIEW code, maybe something with memory management?

And I have one more idea... Maybe nothing bad with LabVIEW code but something too good in C code :P? It is 64b library, implemented in normal way without forcing parallelism. Moreover i mark run in UI thread in CLFN. But maybe even so CPU handles this function with multi cores? I have 4 cores so then the difference in the execution times would be okay :P. But it is impossible, right?

I know opinions that LabVIEW sometimes is slower but about 15%, not 4 times. So I had to make some mistake... Someone knows what kind of :)?

Regards,

ksiadz13

Solved! Go to Solution.

04-07-2016 11:30 AM - edited 04-07-2016 11:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well here's a few things that will make the LabVIEW code faster, there are other improvements that could be made but I'd need to do more testing to see if they are better based on your input data. First I'd make the VI run inlined, not subroutine. I'd also work with the arrays of data instead of the scalar values. Also you know the number of rows and columns because it is the array size, why keep that information? Oh and if you were working with for loops you may want to try parallel for loops to work on multiple CPUs at once.

And also I'm not sure all of this work is necessary anyway. If you have a double and turn it into a U8, it will be 255 if the value was greater than that to start with. Attached is an updated version with mutliple possible options to try.

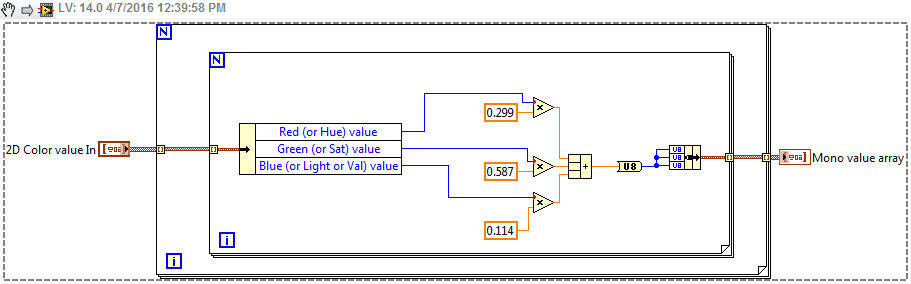

Oh and another improvement, why are you even unbundling the data in Unbundle_imageCPU1.vi? Why not just work with that 2D array of Red Green Blue? I realize that same VI is called in both the C and LabVIEW code options but having LabVIEW deal just with the other data might make it faster overall. Especially given the fact that you bundle it back after you are done anyway.

Unofficial Forum Rules and Guidelines

Get going with G! - LabVIEW Wiki.

17 Part Blog on Automotive CAN bus. - Hooovahh - LabVIEW Overlord

04-07-2016 11:39 AM - edited 04-07-2016 11:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Here is the simplified version, which just takes the 2D array of RGB and turns it into the 2D array of Mono. Doesn't IMAQ have functions that does this?

EDIT: Forgot to turn on parallel for loops, it might or might not help in these cases since each cycle of the loop is going to be very quick.

Unofficial Forum Rules and Guidelines

Get going with G! - LabVIEW Wiki.

17 Part Blog on Automotive CAN bus. - Hooovahh - LabVIEW Overlord

04-07-2016 12:06 PM - edited 04-07-2016 12:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

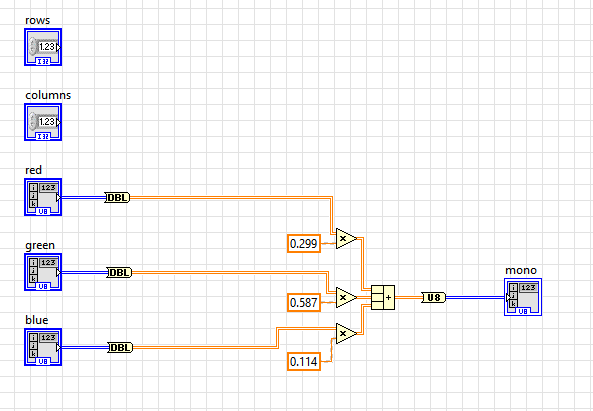

My guess is the C code optimizes the data types during compilation. In your conversion subroutine, if you move the type conversions to outside the for-next loops and do the whole array at once, the times would be more comparable. To be honest you would not need the for-next loops at all in LabVIEW

04-07-2016 01:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The non-loop code shown by Hooovahh and Randall Pursley is more than 8 times faster than the code in your VI. I cannot run your C code but based on your speed estimates, this LabVIEW code should beat the C code.

Lynn

04-08-2016 06:01 AM - edited 04-08-2016 06:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank You all for being interested in helping me! There is a lot of information. I will try to comment them all but I will start with the most important which solved the problem.

Almost all of You said about removing double for-loops and using arrays. So I had right... I made some basic mistake :P. Now code is more faster! Almost as fast as c code, and still may be better with the rest of your advices. Thank You!

I attached comparison for the curious because johnsold said that it is impossible to run my dll library.

But please say me why difference is so big? What with algorithms which cannot be implemented without access to single elements? For example some filters with square mask? Have You some advices how to implement them with good optimization?

Without for loops algorithm is faster than with parallelism for loops using 2 cores (I tested it earlier). So in which way I can use multi cores to make calculation faster? Is it good idea to change one matrix into X matrices where X is number of cores? And then place without loops algorithm in one for loop in which I use parallelism option?

Oh and if you were working with for loops you may want to try parallel for loops to work on multiple CPUs at once.

I know about it. I didn't tell that my whole application will be used to compare efficienty of single core CPU, multi core CPU and GPU.

First I'd make the VI run inlined, not subroutine.

I tried this option earlier. It was not good idea because my application is typical research application and foor loop in main.vi is needed only to reduce inaccuracy. When I want to use inline execution i must also use reentrant option. Then the execution time is extremely short but because of fact that CPU use multithreading to execute all tasks. It means that loop iterations are not handle one by one so average time is not true. Am I right?

And also I'm not sure all of this work is necessary anyway. If you have a double and turn it into a U8, it will be 255 if the value was greater than that to start with.

I was thinking that in case of changing types of values, values outside the range get to the beginning (for example 256 will change into 0). This happens when the calculation result exceeds the capacity of the type (for example 16^2 while using uint8). If in this case value is always truncated indeed I can remove this condition.

After removing I made comparison. Without this if statement result images are the same. Thanks!

Doesn't IMAQ have functions that does this?

Maybe it has but for me it is just simple algorithm to make first research.

Here is the simplified version, which just takes the 2D array of RGB and turns it into the 2D array of Mono.

Unbundling without double for loops should be faster because then transferring 3 matrices isn't necessary. I should say why I made it in different way. Finally I will be comparing efficiency of algorithms of pattern detection, not image processing. In pattern detection final image isn't necessary, I want only coordinates of the pattern. It is why I didn't want to measure time of bundling final image. I want to show that GPU may be really useful in pattern detection. While using this device sending picture between CPU memory and GPU memory is very slow.

But I still can use your idea just to unbundling. Thanks!

The non-loop code shown by Hooovahh and Randall Pursley is more than 8 times faster than the code in your VI.

So much faster? I got 4 times faster. I did exactly what I see at pursley's picture. May it be some hardware issues?

04-08-2016 07:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I am running on a Mac which is why the .dll code does not work. It may also partly explain the speed differences. The arrays I used for testing were smaller. I would expect this code to scale with the size of the arrays but some non-linearities may exist.

Lynn

04-08-2016 08:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@ksiadz13 wrote:

First I'd make the VI run inlined, not subroutine.

I tried this option earlier. It was not good idea because my application is typical research application and foor loop in main.vi is needed only to reduce inaccuracy. When I want to use inline execution i must also use reentrant option. Then the execution time is extremely short but because of fact that CPU use multithreading to execute all tasks. It means that loop iterations are not handle one by one so average time is not true. Am I right?

Not entirely. Basically if a VI can be inlined, then doing so will provide the best performance. When a subVI is called there is some overhead for passing data to, and then passing data back from the function call. An inlined VI works as if the code in the subVI was actually in the calling VI so that overhead is gone. Generally it works best for small VIs that are called often, and return quickly, so maybe it isn't all that important here.

http://zone.ni.com/reference/en-XX/help/371361H-01/lvconcepts/vi_execution_speed/

@ksiadz13 wrote:And also I'm not sure all of this work is necessary anyway. If you have a double and turn it into a U8, it will be 255 if the value was greater than that to start with.

I was thinking that in case of changing types of values, values outside the range get to the beginning (for example 256 will change into 0). This happens when the calculation result exceeds the capacity of the type (for example 16^2 while using uint8). If in this case value is always truncated indeed I can remove this condition.

After removing I made comparison. Without this if statement result images are the same. Thanks!

Just test it, given 256 it turns into 255 not 0. When I'm unsure how a function works with a set of inputs I test it, and read the documentation.

Basically experiment. I've often made a test VI for speed execution then change one thing and see if it gets better or worst, with my typical input. LabVIEW's compiler is really smart, and as a result may optimize things in ways you don't expect, which can result in faster code execution than other languages. Of course having it be a higher level language this isn't always the case, and to get these fast executions you need to know a few things about what makes the compiler work better.

Some of that is doing things like using the native functions on arrays instead of pulling out each item one at a time. Some of it is tribal knowledge, knowing what things make LabVIEW work faster or slower, and some is just experimenting with different ways of doing this. Which is why my VI had a disabled case, with 3 different ways to do the same thing. I wasn't sure what would be faster with your typical input.

Unofficial Forum Rules and Guidelines

Get going with G! - LabVIEW Wiki.

17 Part Blog on Automotive CAN bus. - Hooovahh - LabVIEW Overlord

04-10-2016 03:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I understand what inline execution is. But to use this option i also must use Preallocated clone reentrant execution option which works as I already said. With this option average execution time for 1024x1024 picture is about 26,5ms for one iteration and 0,3ms for 100 iterations :P.

Thank you for solving my problem and all extra advices which may be really helpful for me. I accepted first reply as solution because this tip helped me the most in reducing execution time.

04-10-2016 07:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

One of the big expenses is the operation on DBLs. All values are quantized to 256 possibilities so instead of all these mutiplications, all you need is a tiny LUT (lookup table) for each color that give an U8 result for all possible 256 multiplications. This keeps everything in U8. I am sure it would be faster.