- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

search array for negative values and offset by single value that changes

Solved!03-17-2023 03:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi there, been struggling with this all day. I'm sure there are multiple ways to do this, but I can't figure it out.

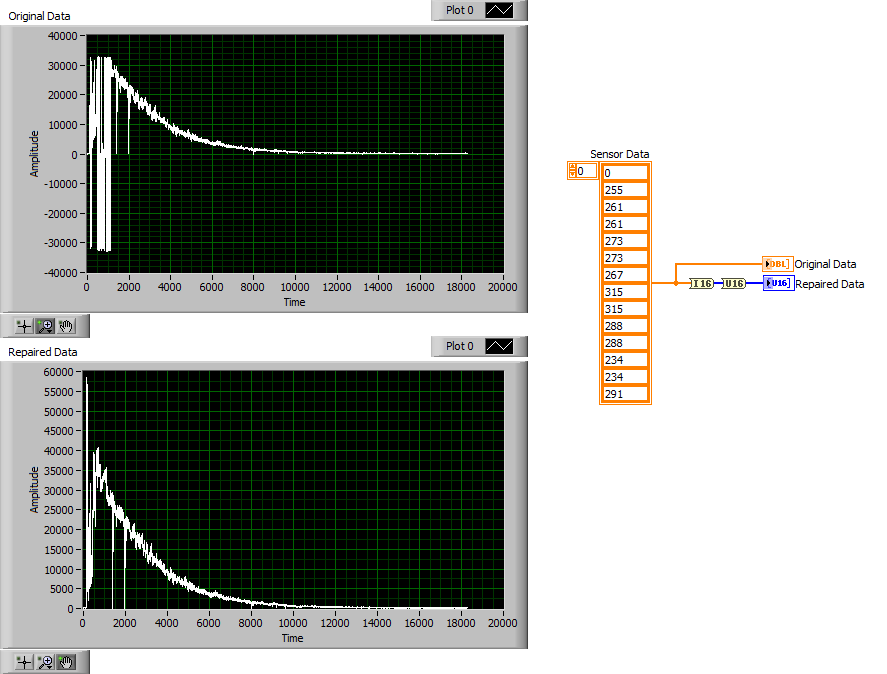

I have a 1D array of data (raw particle counts) that were collected from an air particle detector after discharge of a firearm. This is related to some forensics and gun shot residue research being done at the National Institute of Standards and Technology (NIST). The detector is collecting a sample every second. The data shows a very strange offset in some parts of the array.....almost like it flipped over the x-axis, but it didn't flip - it just offset by a value and continued collecting data, then jumped back up above the x-axis and continued. I believe the shockwave generated by the firearm is goofing up the sensor, but that is besides the point. We have to deal with the data we have. It is very clear what is happening if you zoom in around the 120 - 220 seconds mark.

I've been trying to write a code that scans thru the array, identifies any negative number, and then fixes this issue by adding an offset based on the last positive data point before the points go negative. I can visualize what I want to do, but cannot figure out how to implement it. I'm close, but the problem I can't get around is locking in the last positive data point before the points go negative (the "pre-negative" value), applying that offset to a few points, and then moving on to the next chunk of points that go negative (often with a different pre-negative value).

The sensor sometimes drops out for a second and gives a zero value and I know to remove those.

Thanks so much for your time. I'd appreciate any comments that help push me in the right direction.

Many thanks!

-Matt Staymates

National Institute of Standards and Technology

Solved! Go to Solution.

03-17-2023 03:39 PM - edited 03-17-2023 03:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It looks like your data is U16, but you were reading it as signed integer (I16) before converting to DBL.

Try the following:

03-17-2023 03:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The Good News is that there are a number of experienced LabVIEW Developers at NIST in Gaithersberg (send me a Private Message if you don't know any of them) -- one of the best and fastest ways of learning LabVIEW is to show and discuss Code That Doesn't Work.

I think this topic is an example of Engineers vs Scientists. My first reaction to your situation is "Something is broken in the Data Acquisition -- how were these data acquired, and how do we know all the values are "signal", not "noise"?

Something that will really help us to understand your situation and come up with reasonable hypotheses for "Where's the Problem?" (or, as some say, "Where's the Problem At?") is to attach a compressed (Zipped) copy of the Folder holding your entire LabVIEW Project (so we can see all of the code), and also to attach a copy of the data you are trying to analyze. The easiest way to do this is to stop your program at the point where you have an indicator filled with your data (which I assume you can "accumulate" in an Array somewhere). When you have such an Array with data, select the Array and in the Edit menu, choose "Save Selected as Default", and tell us which sub-VI has this Array-with-data.

Bob Schor

03-17-2023 04:08 PM - edited 03-17-2023 04:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

[To the surprise of no one, altenbach was fastest and most concise with a solution. Here's a slower and less concise one I was in the process of writing when your other responses came through.]

Before you start "correcting" raw data, it's really *really* important to have a rock-solid reason for it. Especially when you're working at NIST!

Here's a solid-as-one-year-old-bread reason: somewhere in the chain of things, there's an improper treatment of 16-bit integers.

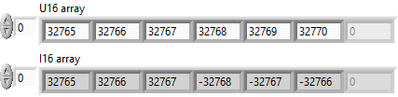

I noted the suspicious proximity to I16_min (-32768) and I16_max (32767) in the graph so I pumped your original array into a simple Array Min & Max function.

Max value in dataset: 32745

Min value in dataset: -32767

Pretty suspiciously close to I16 limits, don't you think?

A 16-bit signed integer interprets the MSB as a sign bit, 0 for positive and 1 for negative.

A 16-bit unsigned integer interprets the MSB as +32768.

If you increment a U16 from +32765 to +32770, but interpret the resulting 16-bit patterns as I16, you'll get the sequence +32765, +32766, +32767, -32768, -32767, -32766. That kind of "mirroring" is exactly the kind of shape distortion evident in your graph.

It looks for all the world (and a loaf of year-old-bread) like somewhere in the data processing chain, a 16-bit integer that *should* have been interpreted as being an *unsigned* int was instead interpreted as *signed * int.

-Kevin P

P.S. Try the attached code and see for yourself.

03-20-2023 08:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you all very much for the support. Yep, the culprit was signed vs. unsigned 16-bit integer. Ugh.

Many thanks!

-Matt