- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

DispayMapping16 bit in LabVIEW NXG

Solved!01-17-2019 11:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Dear colleagues,

not sure if this question shall be placed in LabVIEW NXG thread or in Vision, let start here.

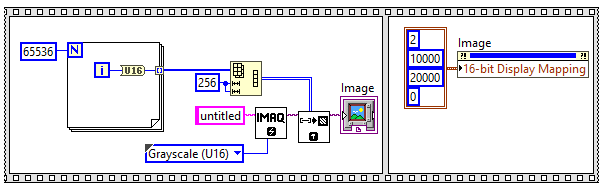

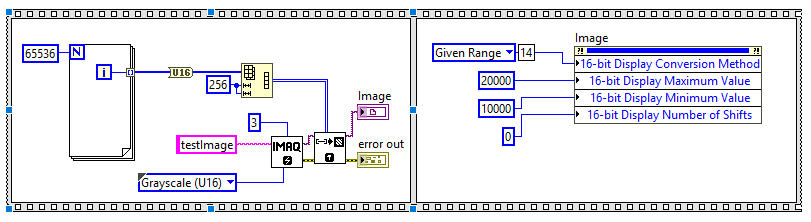

Using DisplayMapping16Bit property I've got pretty strange result. For example, for the gradient 0...65535 I mapping the image to 10000...20000 range:

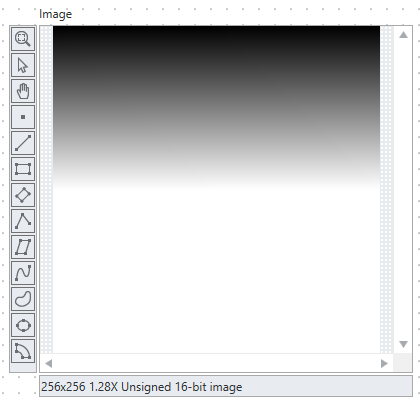

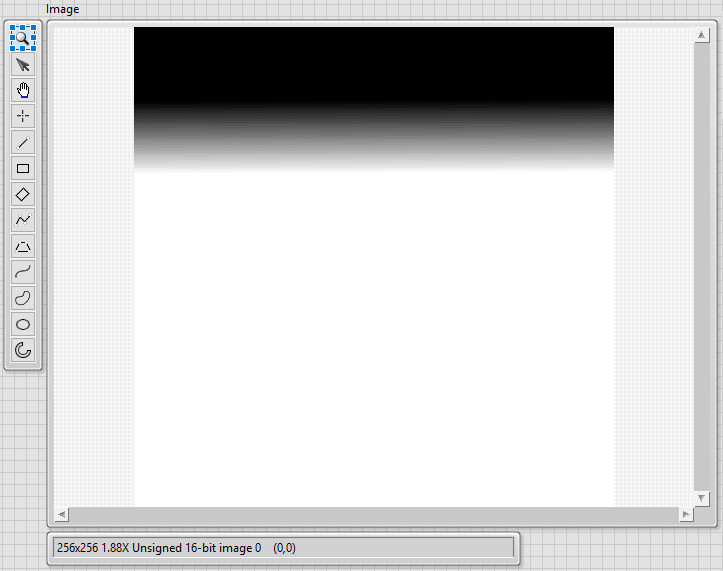

and here is the result from LabVIEW NXG:

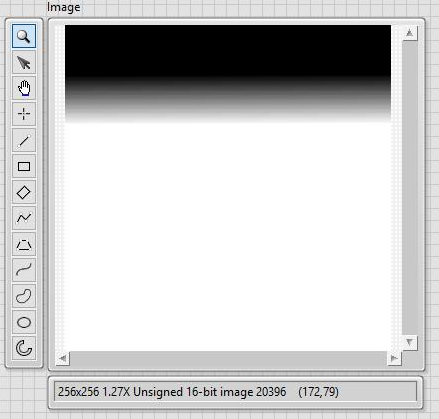

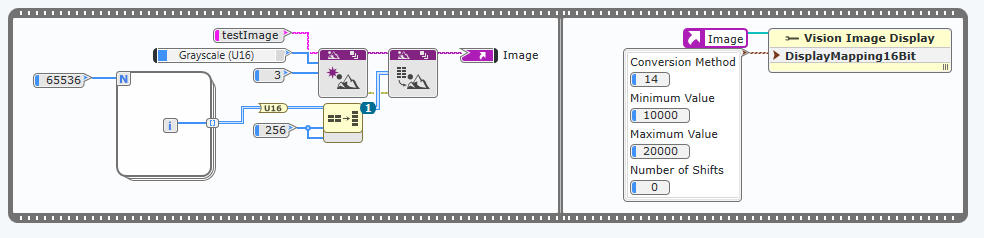

But expected result is the following:

checked with LabVIEW 2018 / VDM 2018

Could be a bug in assumption that the conversion method should work in the same way in both Development Environments. Anyway I was unable to get the same result with different conversion methods.LabVIEW NXG Project in attachment.

Any idea?

Solved! Go to Solution.

02-05-2019 06:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Dear Andrey_Dmitriev,

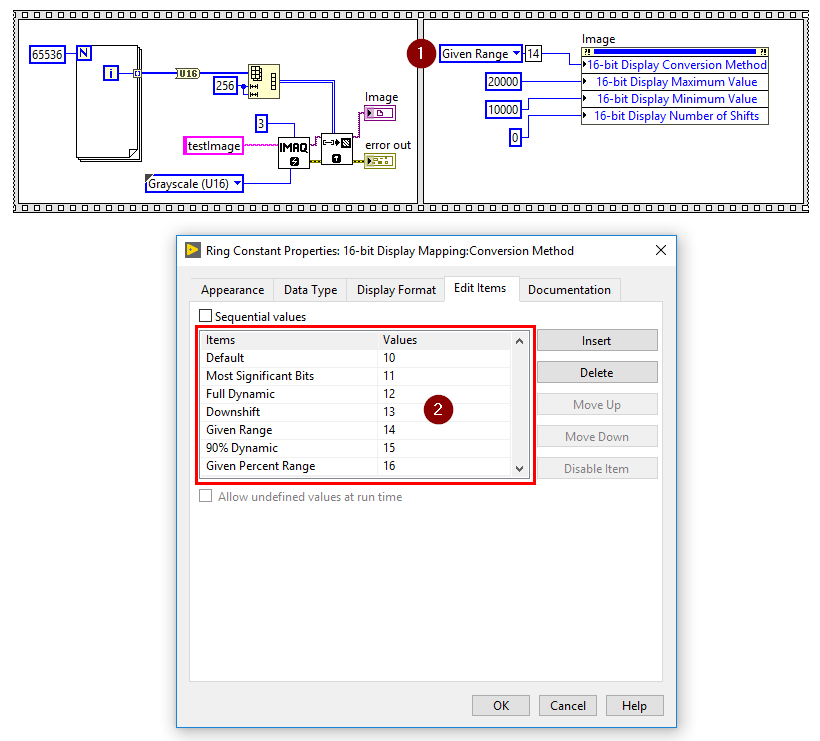

I did some research on the issue you described and here are my observations/solution. There is missing documentation of Conversion Method parameter when using Vision Image Display Property Node in LabVIEW NXG. Documentation in LabVIEW 2018 seems to be slightly better - you can create Enum Constant from 16-bit Display Mapping:Conversion Method input, which will give you known and expected values. As you can see on the image below, value 2 provided by you to this input is not defined. I believe this is the main reason for seeing different results - both LabVIEW 2018 and LabVIEW NXG has a different Default value defined internally (I guess).

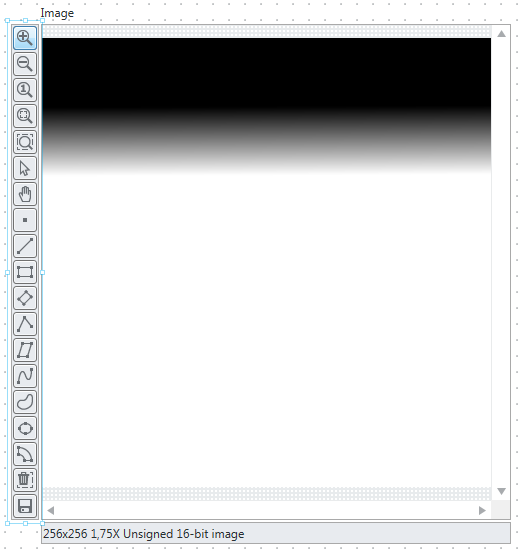

As you specified Minimum Value and Maximum Value for DisplayMapping I thing you want to map values to those boundary value. It means that the Conversion Method parameter will have a value 14 (Given Range). The final result will be the same in both - LabVIEW 2018 and also LabVIEW NXG as you see on the picture below.

LabVIEW 2018

LabVIEW NXG

I hope this explanation will help you.

Kind Regards,

Michal