- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

GPS Conversion ETSI LIP

Solved!07-07-2014 12:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I am writing a program where I am receiving an ETSI LIP string and need to convert it to decimal. I currently don't understand how it is converted.

I receive the following in HEX, which coverted to binary for ease, but can't figure out the conversion.

LONG:0110011100110110010011000 or in hex: 67 36 4C 00 (25 bits)

LAT:110010100001100101011001 or in hex: CA 19 59 (24 bits)

The above converts into this somehow (Taken from a logging program);

0110011100110110010011000 Longitude = 0x0CE6C98 (145.142012)

110010100001100101011001 Latitude = 0xCA1959 (-37.899131)

Does anyone have any idea how I go about converting the binary/hex to decimal using ETSI LIP?

Solved! Go to Solution.

07-07-2014 07:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi JayPinder

Seems that your question is more of a protocol question instead of a Measurement Studio question, is there a specific issue you are having with measurement studio?

Still I hope this links helps you:

http://www.etsi.org/deliver/etsi_ts/100300_100399/1003921801/01.03.01_60/ts_1003921801v010301p.pdf.

http://www.springfrog.com/converter/decimal-degrees/

regards

07-07-2014 08:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I guess it is a protocol question - I seen another user post http://forums.ni.com/t5/LabVIEW/GPS-Position/td-p/1863217 but was old so thought I'd start my own, and seeing as I am using VB, thought I'd give it a shot, as I'm sure I'll have plenty more questions.

The first document is the one I've been referencing - In there it states;

Longitude

Longitude information element shall indicate longitude of the location point in steps of 360/2^25 degrees in range

-180 degrees to +(180 - 360/2^25) degrees using two's complement presentation. Negative values shall be west of zero

meridian and positive values shall be east of zero meridian.

Latitude

Latitude information element shall indicate latitude of the location point in units of 180/2^24 degrees in range

-90 degrees to +(90 - 180 / 2^24) degrees using two's complement presentation. Negative values shall be south of equator

and positive values shall be north of equator.

Now I know the following is correct;

0110011100110110010011000 Longitude = 0x0CE6C98 (145.142012)

110010100001100101011001 Latitude = 0xCA1959 (-37.899131)

I just need to find how they got the decimal lat/long

I can use the following for the Long (Although, referencing the above, this is the Lat formula)

0x0CE6C98 = 13528216

(180/(2^24))* 13528216=145.142011642

Do you know how I can find -37.899131 with Hex:CA1959/Dec:13244761?

07-08-2014 02:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

07-08-2014 07:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

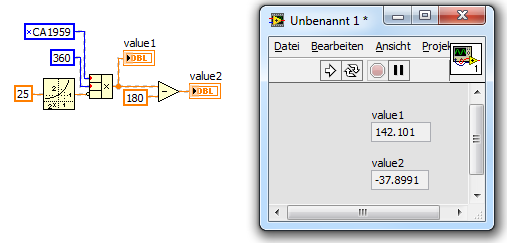

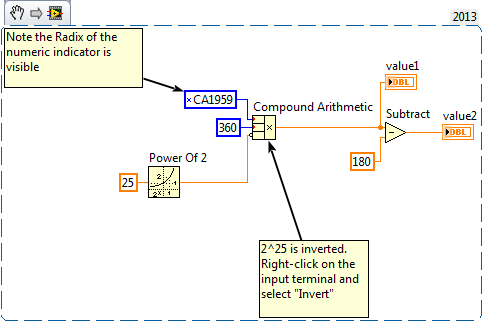

Thanks GirdW - Can you write what the logic diagram is doing? Then I manipulate it and feed it into VB to get the correct answers.

07-09-2014 01:46 AM - edited 07-09-2014 01:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Jay,

it's applying the formula given in the specs of that standard. Just read the page I told you in the post above.

It's just some multplication/divison - the symbols should be quite clear…

As you were asking the same question in the LabVIEW discussion forum too I expected you be able the "read" LabVIEW.

07-09-2014 08:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello Jay,

I recreated the code again here with some comments. I also made it a VI Snippet so you can drag and drop the PNG file into LabVIEW. I made the code by looking at the posted code and p. 65 of the PDF that Chris posted. Hope this helps.

National Instruments

07-10-2014 01:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You beauty - Thank you for all your assistance. My first exposure to LabView and I like it.