- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Individual Sample Duration - NI-6220 and NI-6723

Solved!04-10-2014 09:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

We are acquiring data through NI-6220 and NI-6723 DAQs in our laboratory. The data acquisition process runs through Matlab's Data Acquisition Toolbox, where we have set the sampling rate of the analog inputs to 10KHz - or 0.1s per sample. We are now wondering what is the time of the sampling process, i.e. the duration during which an individual sample is taken. Would there be an averaging process during the full period of the sample (0.1s), or during a much shorter window? In the latter case, how could we know the exact duration, and would it be possible to control it?

In our specific case, we are seeing resistance jumps in our system. Although we get a range of resistances, we suspect that those jumps may actually be between two discrete levels; it is possible that the averaging process done during the sampling results in getting intermediate values between those resistances. Having a shorter sampling window would be useful in further interpreting the results.

It seems like this could be a trivial question, or that it could be a Matlab thing rather than a card thing. Bear with us in those cases; we've made the effort to research the issue on the forums and elsewhere 🙂 . Thanks!

Solved! Go to Solution.

04-10-2014 10:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

04-11-2014 02:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for your answer, Dennis! However, it seems like my question was not totally clear in my message - we're requesting for the duration of individual samples, as each sample never is an instantaneous measure.

In our case, samples come at 0.1s intervals - we're wondering if the acquisition of a single sample is done over the full 0.1s, or if it's done in a much shorter period during that window.

04-11-2014 06:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The sample time is some fraction of the sample rate. I used to have a bookmark that explained this in detail but I've lost it. Give me some time to try and find it again.

04-14-2014 04:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks, it's good to know. If you could even have an order of magnitude for that fraction, it would be quite helpful!

04-14-2014 12:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

There is a sample and hold circuit on the device. The convert clock marks the beginning of the sample interval while the AI hold complete signal marks the end.

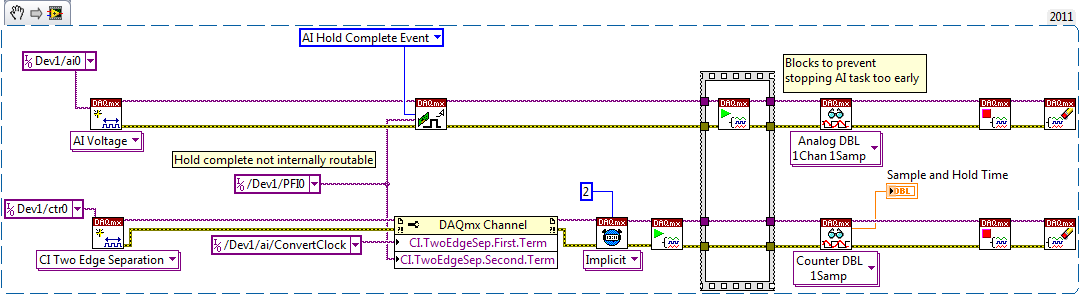

I'm not sure if the hold time is specified anywhere, but you should be able to measure it like this (LabVIEW example... I don't have MATLAB or the Data Acquisition Toolbox unfortunately):

On my PCIe-6351 I get a hold time between 440 and 450 ns. The 6220 might be different but hopefully this gives you an estimate. This parameter is not adjustable.

Having said all of that I don't think it is relevant to your issue. If your input signal is varying between discrete levels and you want to sample only after it has settled to a new value, you'll need to ensure that the AI task is synchronized to whatever is generating the signal.

Best Regards,

04-15-2014 06:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi John,

Thanks for the very thorough reply! I will have to whip up something similar in Matlab or to find a computer with a LabVIEW licence to test this. If the hold time is on the microsecond timescale (or less) no matter what and is not adjustable, we can safely interpret our results.

We do not actually know if our signal goes between discrete values, and what we are investigating are unwanted resistance switches. Ideally, those switches would be between two discrete levels, but we are observing intermediate values which we thought could be due to a long hold time relatively to the time scale of the switching. If the hold time is on the microsecond scale, that would not be the case.

Thanks again!