- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

USB 6251 memory underflow error 88709

03-21-2017 05:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

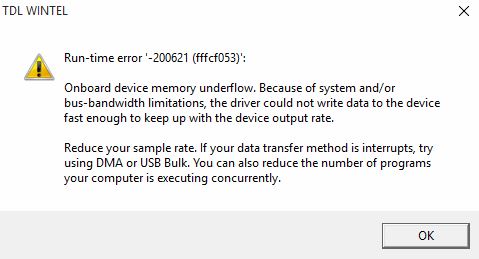

I'm communicating with a USB-6251 and USB-6221. The 6251 reads a continuous stream of data from a light detector. Sometimes the program needs to move from 1Hz processing to 10Hz processing, and so the sampling increases 10 fold as well. When we do this, sometimes the program crashes with a memory underflow error.

What's odd is that this problem doesn't occur on our older computer model, but does on the newer one. The newer one has a similar CPU but is running Windows 10. In most aspects, the computer runs much more smoothly, but for some reason we keep getting this underflow error that never used to occur.

03-22-2017 09:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Need to see the code, probably something can be improved there so that both systems will be reliable.

However, it's a little odd to hear about underflow errors for an app that sounds like it's doing input measurements. Underflow errors tend to go along with output signal generation and a failure to feed the buffer with data as fast as the task needs to generate it. Input tasks are prone to overflow errors where the task writes to the buffer faster than the app retrieves data out of it.

-Kevin P

03-22-2017 12:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Kevin,

Thank you for your response. Yes, I also thought it was odd that it's getting underflow and not overflow. The way I understand it, it's programmed such that it sets the output signal once, and then the DAQ continuously generates it without needing a constant output from the computer itself. That's what makes me think it's not an output error.

I've attached the code. The "Fast" one is to communicate with the 6251, which mostly deals with the continuous signal we care about, and the "Slow" one is to communicate with the 6221, which mostly deals with peripheral sensors like temperature and pressure data. The extensions are actually ".bas" but the uploader doesn't allow that so I changed it to txt files.

03-22-2017 01:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Are you sure about the error # and text? Searching here for that error number brings up discussions about other distinct issues that don't seem related to underflow.

As to the code, I really only program in LabVIEW and wrongly assumed your code would be LabVIEW as well. I tried to scan through quickly and have some suspicion about the way you set up sample clock, convert clock, and timebase. The variable ClockFreqHz keeps showing up, but it wouldn't be normal for these items to share the same frequency. Timebase would typically be fastest, sample clock slowest, convert clock somewhere between.

The other, entirely different line of thought is suspicion about Win10 power-saving modes. I've read a number of stories of pain with USB devices, many of them related to the OS doing some power-saving stuff with the USB ports that interrupts DAQ or disconnects the device entirely. I take heed and try to avoid USB devices as a general policy, at least for tests that need to run for for long unattended stretches of time. The other discussions about error -88709 sounded *possibly* related to this kind of situation.

-Kevin P

03-22-2017 03:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I tried disabling USB power saving settings and that hasn't done anything.

You're right, I'm mixing up my errors, the error I'm getting is 200621.

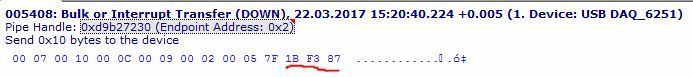

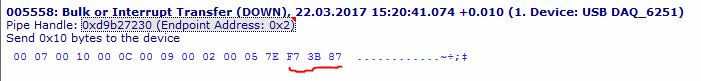

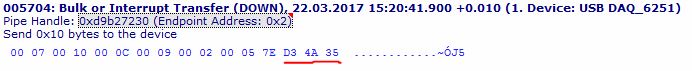

I have a USB analyzer that allows me to see all the packets going between the devices. I've noticed that the fast card will send 32KB of data every 10ms, and then periodically, the computer will send a 16 byte packet to the fast card.

Most of it is the same every time except for the last 4 bytes, which seem to count down (you can see it go down from 7F1___ to 7EF___ then 7ED___). When the program is running at 1Hz (meaning it processes the sampled signals once a second), it sends this packet out to the DAQ almost exactly every 100ms. When the program kicks up to 10Hz, it sends this packet out less often, starting every 500ms, but sends it out less and less often as time goes on, eventually getting to every 1200ms until the error occurs. Snapshots of the 16 byte packets below

What is that 16 byte packet that's being sent to the DAQ? What is it counting down? It seems to be the key in this, and it's possible the DAQ isn't receiving that packet fast enough, and that's causing a memory underflow of some kind?

03-23-2017 01:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The underflow error you're getting sure *sounds like* an indication that you haven't actually gotten your output task configured to regenerate from the on-board buffer. Either way it seems quite clear that it is indeed an error in an output task not an input task.

I see a line in your posted code that starts with 'Call DAQmxSetAOUseOnlyOnBrdMem'. This also seems to be one of the few config calls that don't start with a 'DAQmxErrChk'. Maybe that's allowing the attempt at config to fail without you knowing it? I'd try to query that property somewhere after the task has started as a debug step, assuming there's a query function like 'Call DAQmxGetAOUseOnlyOnBrdMem'.

As to all the USB traffic, I have no specific knowledge at all. When you go from 1 Hz to 10 Hz processing, are you increasing the hardware sampling rate by 10x while reading the same size packets? Or are you reading 10x smaller packets at a time but reading them 10x as often for an overall equal data throughput?

Whatever the 16 byte packet may mean, I find it odd that (all else being equal) it is transmitted significantly less often as your processing rate increases. It seems like a clue. If your data rate increases with your processing rate, it could be that the driver is overwhelmed by demand to transfer data from device to PC, getting in the way of periodic but ultimately important 16 byte packets that want to go the other way. Absolutely just speculating here though.

I would do some targeted troubleshooting. What does the USB analyzer show when you do AO but no AI? Do you ever generate the underflow error when you do fast AI with no AO? Stuff like that...

-Kevin P

03-23-2017 05:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

That's what I'm thinking as well, that the increase in CPU utilization is preventing the program from sending the 16 bytes to the device on time.

If that command failed, it wouldn't output a waveform at all, which it definitely does and that has never been an issue, so I don't think it's the execution of that line per se.

I did see that using the Only On Board Memory command stores the output signal in the same place as the input signal FIFO buffer. It's possible that that is causing a conflict.

03-24-2017 08:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The sixteen-byte packet is part of the implementation of NI Signal Streaming. It's part of a FIFO synchronization mechanism for the data channel. (Those 16-byte messages and your output data occur on different USB endpoints.)

I did see that using the Only On Board Memory command stores the output signal in the same place as the input signal FIFO buffer. It's possible that that is causing a conflict.

This is not correct; the input and output FIFOs use separate memory.

Data transfer to a USB-6251 device usually involves two buffers:

- A FIFO onboard your device. For the 6251's analog output subsystem, the FIFO memory is enough for 8K samples.

- A circular memory buffer maintained by NI-DAQmx on your PC. This buffer's size is chosen automatically based on your sample rate. (This can be overridden with the buffer size properties.)

The Use Only Onboard Memory property disables the use of the second buffer.

Disabling that second buffer is useful in the case where you want to continuously regenerate a fixed waveform that can fit in the onboard buffer-- this lets you transfer the waveform only once, instead of it being continuously streamed from the DAQmx buffer, thus reducing the amount of bus traffic necessary.

Is the task that gives you the -200621 error the same task for which you've configured Use Only Onboard Memory? (I've tried looking through your code but between it not being a reduced test case and my inexperience with Visual Basic I couldn't quite follow it.)

03-24-2017 01:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

That's really interesting info, thank you! So if I understand correctly, the 16 bytes is happening as intended to synchronize the data stream, thus why right after the 16 bytes, the next packet is under 32KB, followed by a dozen 32KB packets.

Yes, we originally had the program output a waveform constantly, but we found that a couple times an hour, something would go wrong and it would miss sending out a waveform, and then start again. It didn't throw a fatal error but it did mess up the system, and we figured turning on the Use Only Onboard Memory property would fix that issue, which it did but we now face a new one, and this time it crashes the program.

Now I have two followup questions:

1) How do I go about finding which task is throwing the error in the first place?

2) Is it possible to catch the error in code, and program it such that it can say "Oh, it's just error 200621, keep running the program".

03-24-2017 01:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

How do I go about finding which task is throwing the error in the first place?

DAQmxErrChk() is a function that accepts a integer error code and creates a dialog if that error code is a negative value. You get this function by adding NIDAQmxErrorCheck.bas to your project. I see a couple options:

- Use the Visual Basic debugger to set a breakpoint in the DAQmxErrChk function, then, when the breakpoint is triggered, look at the call stack to see which function is calling it.

- The example DAQmxErrChk function only uses DAQmxGetErrorString, which gets an error description from an error code. The function DAQmxGetExtendedErrorInfo returns additional dynamic information about the error state, which usually also indicates the task name. It may be useful to extend DAQmxErrChk to retrieve this and display it as part of the dialog.

- (You'll probably also want to provide a meaningful name as part of DAQmxCreateTask; presently all your calls use an empty string, which means you get an automatically generated name.)

Is it possible to catch the error in code, and program it such that it can say "Oh, it's just error 200621, keep running the program".

The DAQmx functions just return an integer value as an error code. Instead of using DAQmxErrChk(), you can write your own error-checking function that can have different behavior for different error codes. DAQmxErrChk also uses Err.Raise to issue the dialog box, you could also use Try...Catch and handle the error appropriately.

Note that a -200621 error is fatal for the particular DAQmx task, so you will need to have a means to stop and restart it.