- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

cDAQ-9184 AI-AO data delays

Solved!08-15-2013 09:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

I have cDAQ-9184 chassis with two modules installed: NI 9215 (AI) and NI 9263 (AO).

AO generates 5kHz signal and AI acquires it. In the beginning everything is fine, but after 20-30 seconds it has delays (up to 30 seconds!) between generation and acquisition.

Time lag depends on DAC rate. If it 20*5000=100kHz, delay=15sec, if it 10*5000=50kHz the delay is 30sec.

It works with PCI-DAQ version, but i can't understand why it has such delays with ethernet chassis.

I tested it on gigabit ethernet with directly connected chassis to host. But i don't think problem is in network, cause net load is about 0,55% and ~ 1Mb\s data transfer. CPU load is also low.

And i think trouble is in generation, not acquisition, because it flushes all samples in read buffer ("availsamplesperchan" is near number per channel in DAQmx read) and because changing DAC rates influence on signal updates.

Also, it works good without such big delays at lower signal rates, for example, 50Hz sine wave generating.

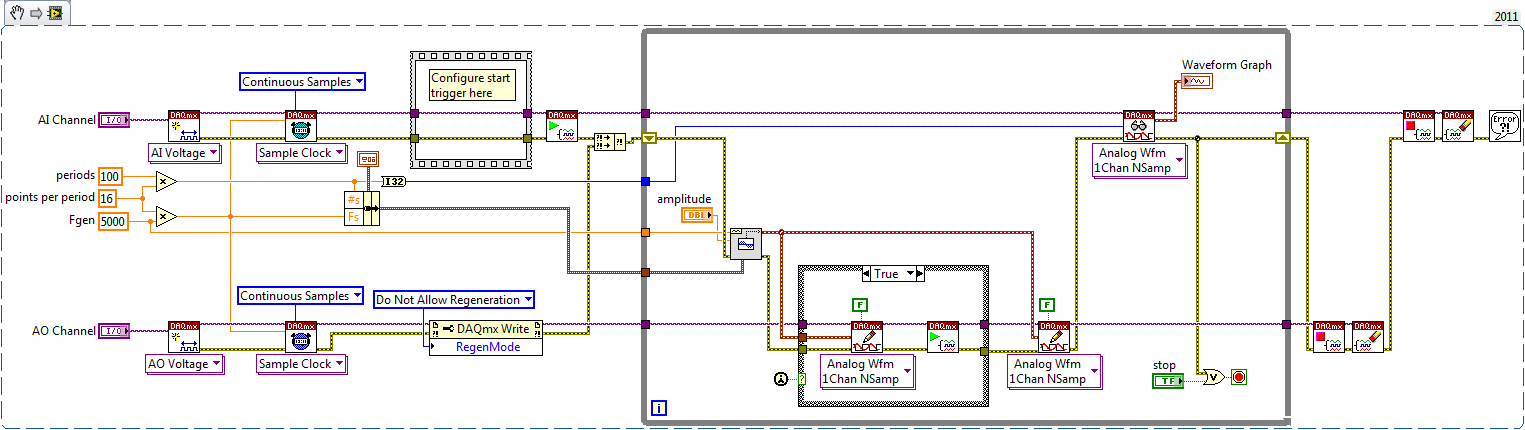

Example code is in attach. I'm reading 100 periods of 5khz-sine wave, 16 points per period (update rate=80kHz, 1600samples). And have 5khz sine generation of 100 periods with 20 points per period (rate is 100kHz, 2000samples).

Solved! Go to Solution.

08-19-2013 07:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

zlavick,

Have you considered using a single while loop rather than two seperate ones?

RF Product Support Engineer

National Instruments

08-19-2013 08:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Checked it now (also with "do not allow regeneration"), but result is the same: 20 seconds of delay.

I have tried a lot of variants. For example, generating wave signal in my program and acquiring this signal in MAX. Have the same fixed delay everywhere.

Maybe it is default way how DAC's buffers work in compactDAQ?

08-19-2013 03:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This probably has to do with data being buffered on the 9184.

A sample isn't "Available" until it has been transferred back to your host. Making a read call with a specified number of samples (NOT -1) will force the transfer, otherwise the data is just going to sit on the 9184 until its transfer request condition has been met (DAQmx Channel Property Node=>AI.DataXferReqCond).

Conversely, on the generation side, as long as there is space in the output buffer you will immediately write new data. What ends up happening is your generation loop will run several times at the very start of the program and then you will have a huge buffer of data that you have to clock out before you get to any updated results later on (e.g. in your case the user can change the amplitude while the task is running). Try using a workaround such as this--unfortunately I don't have an ethernet chassis to try this out myself at the moment.

These weren't issues on your PCI device because transfers are much more frequent and buffers are much smaller.

I'm not sure what your overall application is, but in addition to what I have mentioned above you'll probably also want to add actual hardware synchronization to your tasks (i.e. trigger one task off of the other one).

Best Regards,

08-20-2013 01:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for your reply, but it doesn't really help me.

1. How generation depends on <b>AI</b>.DataXferReqCond? AI reads all samples from 9184 without any problem and growing "AvailSampPerChan". I read it as fast as i can.

2. I saw and tried your example with increasing delay, but it's not my case. Delays in my test not "2 buffer sizes". In my program one buffer == 20msec. Two buffers == 40msec. Not 20 seconds! That means, the buffered data is about 2000 buffers or 2000*2000 samples.

3. AO FIFO size for PCI-device is 8191 samples (PCI-6221, for example). Buffer output size for cDAQ-9184 is also 8191 samples (in "non-regeneration mode"). And even in "allow regeneration" it has smaller buffer sizes (127 per slot) than in PCI version. I can't understand where it can hold "4000000" samples.

Also, both clocks in PCI and cDAQ are also 80Mhz. Where is the difference?

4. In my general project i'm starting AI-AO tasks synchronously. I just remove any unnecessary parts of code to localize the problem. In my main program i also have such delays.

08-20-2013 12:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'll re-iterate that I don't have an ethernet cDAQ chassis to verify any of this so I'm merely speculating (I've used them pretty extensively in the past though).

How are you measuring the delay between generation and acquisition? I'm assuming you are updating the amplitude of your generated signal (from software) and then it takes ~20-30 seconds to see the updated result on the measured input, in which case we'll have to determine if the delay is coming from the analog input or the analog output (or if you could measure the signal with some other instrument you could verify the source of the delay).

1. If AvailSampPerChan is growing continuously then data is being transferred back to the host. If that's the case, then the analog input probably isn't your issue--check that off the list.

2. You may be writing 20 msec of data per call to DAQmx Write, but it would be possible for your write loop to run thousands of times as the program is starting and fill up the buffer on the 9184. The "2 buffer sizes" delay mentioned on the example program is referring to the behavior after the workaround.

Unfortunately, I don't know the size of this buffer on the 9184 off the top of my head and I don't think it is in the specifications (the buffer is also shared between multiple tasks). This is not the same thing as the 127 sample per slot AO buffer which is present on all cDAQ chassis--the ethernet/wireless chassis controller contains an additional buffer that isn't really mentioned as far as I can tell in the published specifications (apparently it's 12 MB on the wireless cDAQ chassis).

A simple way to check for this is to probe the iteration counter on your write loop. If the problem is what I think it is, you'll see the loop counter updating quickly at the beginning of the program until the buffer is saturated, and only then would it slow down to the rate that you are actually generating data.

3. 4000000 samples is ~8 MB, which actually sounds about right for the ethernet controller buffer given that the wireless chassis uses 12MB (I really wish NI would publish this specification).

4. Makes sense to me. The lack of a synchronized start certainly wouldn't explain the delay you are seeing, I was just pointing it out.

Best Regards,

08-20-2013 02:52 PM - edited 08-20-2013 03:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

> I'm assuming you are updating the amplitude of your generated signal (from software) and then it takes ~20-30 seconds to see the updated result on the measured input,

Yes, that's right. Input works correct, but output has delays. I change amplitude and measure output with other devices (for example voltmeter) - and as i can see, values have been updating only after 20-30 seconds.

And yes, it looks like there is internal buffer with size about 8-12MB. And you right with "buffer saturation".

There is one moment. When i check property "Total Samples Per Channel Generated", it is not equal to "Current Write Position" (DAQmx write). If i understand correctly, these properties should have the same values. With PCI-6221 they really have close values.

In the beginning "Total Samples Per Channel Generated" is near "current write position", but after some seconds it starts growing and stabilized. But, anyway, not all samples have written and it looks like they generating from "12MB buffer". Also i found the dependence of "AO Update Rate". If rate is bigger, then much more samples was generated. And if it is smaller, the gap between "TSPСG" and "CWP" grows faster.

But knowing that not all the samples were written, can't solve my problem. How can i fix this? Example with decreasing delay doesn't work for me..

It says, TotalSampPerChanGenerated=2006, but it is never bigger than 4000. (2000*2+2006<8000). It stops writing samples, "2006" is the last value. Looks like samples going to nowhere... Maybe i'm doing something wrong?

08-20-2013 04:03 PM - edited 08-20-2013 04:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The example here didn't work?

The general idea is to use non-regeneration so the module will output each sample you write to it one (and only one) time. Then, you find some method of regulating how quickly you write data to the hardware to ensure you write data only as often as necessary.

If you are able to run the AO and the AI at the same loop rate (Sample Rate / Number of Samples written or read per loop), you could even use the AI task to regulate the speed at which you write data to the AO task. Does this VI work (wish I had a 9184 myself)?

The behavior you're describing on the property nodes doesn't make too much sense to me to be honest, but I can't replicate it without a 9184 of my own. The linked example seems to be using TotalSampPerChannelGenerated with success (and there is a single comment on the page that indicates the example worked for at least one other person).

Best Regards,

08-21-2013 02:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yes, screenshot from my previous message exactly done with this (9188 AO.vi) example and it didn't work.

Your example (AOAI_Feedback.vi) works faster for me - amplitude changing in 1-3secs (maybe faster), but in "allow regeneration" mode it has 20secs delay.

In my project i need regeneration mode with rewriting FIFO once-twice a day, i wanted to update DAC FIFO only when it is really need (because i don't know how is stable and deterministic network). Also in my case AI-AO running at two different loops...

Anyway, thanks for your help, i'll see how your advices can be useful for me.

08-22-2013 03:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Now it works in two separate loops with checking "TotalSampPerChanGenerated".

Problem was with too small sending buffer to cDAQ. 8k samples and 4 caching buffers works pretty stable.

Also, i've checked the difference between "CurrWritePos" & "TotalSampPerChanGenerated" and maximum value is 1 500 000. If 1 sample is DBL, it really means that buffer size is 1.5M * 8 bytes = 12MB.

Thanks for thread.