- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Memory leak/fragmentation related to creating lots of files even though they are closed?

03-11-2024 10:16 AM - edited 03-11-2024 03:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

We have an Linux RT application that seems to behave differently when built/run in the LabVIEW 2020 environment (Firmware 8.5) than in LabVIEW 2022 (Firmware 8.8) on various targets (cRIO-9030,53,63,41). In the 2022-environment it will crash after a few days, whereas in 2020 it runs fine.

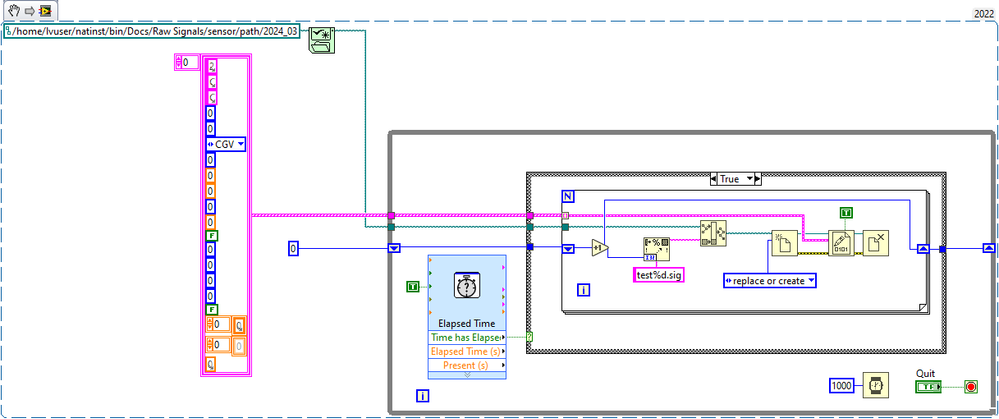

One of my prime suspects for the crash (but one which does not so far display any obvious difference between 2020 and 2022) is a very simple operation: every 2 hours the application creates, writes *and* closes 100 unique 62 KB files. If I disable this operation (it is an optional logging feature so it can be turned off without rebuilding the app), or make it write to the same 100 files over and over instead, the application is stable (i.e. runs beyond test period..). It seems the file operation has an issue, and I am thinking it might be fragmenting/leaking memory.

NI Max will report, even just before the crash, that there is plenty of memory available and free disk when the crash occurs (and low (normal) CPU activity as well). The LVRT process is reported by the OS to use a stable amount of memory, around 26% of it...But if I use cat /proc/meminfo and cat /proc/buddyinfo I can see that every file created, written and closed shrinks MemFree, and the number of larger memory blocks goes down while the number of smaller ones go up until they also diminish. The crash seems to occur around the time when MemFree will reach 0.

If the application is reduced down to just this file operation and it is run it at an accelerated pace its effect on memory is easily observable (here I create 36 unique files (21 KB each) every 2 minutes):

At the start of the test the memory reports on a cRIO-9030 e.g. typically look like this:

Meminfo:

MemTotal: 890824 kB

MemFree: 657132 kB

MemAvailable: 748736 kB

Buffers: 8644 kB

Cached: 121480 kB

SwapCached: 0 kB

Active: 48432 kB

Inactive: 70172 kB

Active(anon): 472 kB

Inactive(anon): 26684 kB

Active(file): 47960 kB

Inactive(file): 43488 kB

Unevictable: 55184 kB

Mlocked: 55168 kB

SwapTotal: 0 kB

SwapFree: 0 kB

Dirty: 4 kB

Writeback: 0 kB

AnonPages: 43704 kB

Mapped: 59992 kB

Shmem: 1004 kB

KReclaimable: 18596 kB

Slab: 32064 kB

SReclaimable: 18596 kB

SUnreclaim: 13468 kB

KernelStack: 3824 kB

PageTables: 2892 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

WritebackTmp: 0 kB

CommitLimit: 864096 kB

Committed_AS: 91060 kB

VmallocTotal: 34359738367 kB

VmallocUsed: 10024 kB

VmallocChunk: 0 kB

Percpu: 456 kB

HardwareCorrupted: 0 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

Hugetlb: 0 kB

DirectMap4k: 58276 kB

DirectMap2M: 872448 kB

Buddyinfo:

Node 0, zone DMA32 3 3 3 2 0 1 1 1 0 2 156

24 hours into the test the numbers have changed to:

Meminfo:

MemTotal: 890824 kB

MemFree: 8720 kB

MemAvailable: 746548 kB

Buffers: 26240 kB

Cached: 670276 kB

SwapCached: 0 kB

Active: 78944 kB

Inactive: 607252 kB

Active(anon): 504 kB

Inactive(anon): 27896 kB

Active(file): 78440 kB

Inactive(file): 579356 kB

Unevictable: 55212 kB

Mlocked: 55196 kB

SwapTotal: 0 kB

SwapFree: 0 kB

Dirty: 0 kB

Writeback: 0 kB

AnonPages: 44912 kB

Mapped: 60300 kB

Shmem: 1036 kB

KReclaimable: 98472 kB

Slab: 113892 kB

SReclaimable: 98472 kB

SUnreclaim: 15420 kB

KernelStack: 3712 kB

PageTables: 2848 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

WritebackTmp: 0 kB

CommitLimit: 864096 kB

Committed_AS: 92044 kB

VmallocTotal: 34359738367 kB

VmallocUsed: 9912 kB

VmallocChunk: 0 kB

Percpu: 456 kB

HardwareCorrupted: 0 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

Hugetlb: 0 kB

DirectMap4k: 58276 kB

DirectMap2M: 872448 kB

Buddyinfo:

Node 0, zone DMA32 186 102 50 25 24 8 1 0 0 0 0

So, Memfree is approaching 0, but MemAvailable is still just slightly down. The number of large memory blocks is replaced by many small though, which eventually disappear as well. The latter seems less healthy...(?)

In the actual application there is surrounding logic that checks if the disk is getting full or the number of files in a given monthly folder is getting high and if any of these occur a decimation process kicks (deleting every 4th file e.g.). So for a split second I thought I had found the culprit when I saw this comment about a leak in Get Volume Info...but that was on VxWorks: https://forums.ni.com/t5/Real-Time-Measurement-and/Detecting-external-USB-drive-mounting/td-p/182048...

During testing the decimation logic only kicks in on the smallest cRIO the 9063 due to its limited disk size, and unlike the other targets it does not crash. If I manually delete files on the other devices I can see that MemFree goes up again. It seems files hold memory even though they are closed, but this memory is freed if the files are deleted. Buddyinfo also indicates that larger blocks return.

For the Linux RT experts out there; Is this expected behaviour? Or have you seen similar crashes related to creation of many files on RT and know what might address the issue? The fact that disabling these file writes in the application eliminates (or at least significantly delays) the crash makes it look like I am on a good track, but then I would have expected to see a difference between 2020 and 2022, which I have not yet (still running a comparison test).

03-12-2024 01:56 AM - edited 03-12-2024 02:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'll be intrigued to research more. Off the top of my head the available memory is the important metric rather than free. I need to refresh my mind on looking at fragmentation but sounds like you are ahead of me there. The buddyinfo output does seem to support a fragmentation issue but I'm not experienced enough to say how big of an issue that seems.

The other interesting test is if file handles are being kept open. These would often show in memory but sometimes not if they are small enough.

On Linux you can use the `lsof` command, either on the process itself or probably just the whole system would work in this case.

`lsof | wc -l` will give you the whole system. See https://www.cyberciti.biz/tips/linux-procfs-file-descriptors.html for more details

========

CLA and cRIO Fanatic

My writings on LabVIEW Development are at devs.wiresmithtech.com

03-12-2024 07:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for the tip James👍

The number of file handles is stable at 758 though so that is OK.

Most of the MemFree reduction is just Linux caching everything I see now. I can get it more less back to its starting point by dropping the cache:

sudo sync; echo 3 > /proc/sys/vm/drop_caches

Why the file logging seems to be involved in the crash remains a mystery... 😟

06-11-2024 08:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Just as a follow-up; after months of testing we still have not figured out why this application crashes on Linux RT. It works fine when built in/for LabVIEW/Linux RT 2020, but on x64 targets (9030, 9041, 9053) the same source code will eventually crash in the 2022-versions of LabVIEW/LinuxRT (9053 in just a day or two, 9041 might pass 40 days). For some reason the smaller ARM-based 9063 cRIO runs fine though. The suspected file storing does not crash on its own, but seems to seriously accelerate the crash if active.

For now we'll just have to stick with 2020 for this application. (With 2024Q3 we hope to at least be able to work with the code in a newer version and just build it on a 2020-machine when needed :-O.)