- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

LabVIEW Database Parameterized Query prepends array sizes to binary data

06-24-2022 07:04 PM - edited 06-24-2022 07:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm storing and retrieving small images in a database using the database connectivity tool kit and parameterized stored procedures. The data type is set to 'Binary'.

When I send up a jpg and look at the bytes stored, 4 bytes are prepended - A little investigation determined it was the size of the byte array. Probing the wires showed me that it WAS in fact LabVIEW adding the size, in the "Set Parameter Value" function. In the file functions, you can choose to prepend the array size or not. But this functionality seems to have not been included in the "Set Parameter Value" function of the DB toolkit.

I tried [u8], binary string. I always get the array size added. So when I pull it down from the DB and attempt to open it as an img file, it will not. I have to chop off the first 4 bytes to get to the actual data. I would like to simply insert and select binary data 1:1, no modification. Can it be done?

06-27-2022 04:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@jmerrill wrote:I tried [u8], binary string. I always get the array size added. So when I pull it down from the DB and attempt to open it as an img file, it will not. I have to chop off the first 4 bytes to get to the actual data. I would like to simply insert and select binary data 1:1, no modification. Can it be done?

It would help to see some code...

You can try to the conversion yourself. If you use Flatten to String, you have an option to add the size.

Of course, if whatever toolkit you use adds the size to that binary data, you're back to square 1.

07-11-2022 02:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This is the LabVIEW Database Connectivity Toolkit.

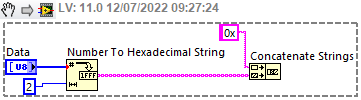

I can't show you the database connection, but this image gets the idea across. It's a parameterized query, the parameter is defined as binary. The data is currently [U8] and it gets prepended in "Set Parameter Value" (I do not see the added bytes up to that point.)

Of course, all the toolkit functions are locked so I cannot help troubleshoot this farther.

The toolkit should not prepend array size to binary parameters.

It seems this technique of parameterizing a call to a stored procedure will not work with binary data. I will try flattening and storing that way.

07-11-2022 05:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

That's a pretty ugly problem. I have no idea how to get around this.

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.

07-12-2022 01:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I don't know if you can use the parameterized query VIs for this, as LV flattens the data in the binary case and that includes the prepended length of the array. One option is to create a copy of the VIs, which doesn't do this, but I expect this might complicate you in the future, if NI changes the DB library to restrict access to the VIs.

Another option is to build an explicit binary string version of the data and pass that directly into the full query, rather than using a parameterized query. I'm assuming you're using SQL Server and the column type is defined as binary or varbinary, where I know this works. It might work differently in other cases. This would like something like CALL store_img (@image_in=0x01030498fg...). The 0x indicates this will be binary data and then each two chars is the hex value of the byte. This is easy enough to do in LV using Number to Hex String with a width of 2 and then wire the array into a concatenate primitive:

___________________

Try to take over the world!

07-12-2022 03:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@billko wrote:

That's a pretty ugly problem. I have no idea how to get around this.

Seems like a bug to me. If I write binary data, I want that binary data in the database, not modified binary data.

Glad I have my own DB\SQL code...

LabSQL (jeffreytravis.com) worked well for me too, and is open source.

03-05-2023 10:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Dear tst!

If storing it via a explicit binary in a full query string, can you read out the data again using the DB toolkit api, or do you need to do some custom'ness to get it back out?

I know I am just "screaming into the void" here, but how is it that the database toolkit from NI, still in 2023, have not fixed its binary data implementation and enforcing a silly AF 8kB (or 7996 bytes + "size prefix") limit?

I guess it is a sign that not many people interface and interact with databases using LabVIEW?

Instead of focusing on table design and architecture, I got to take a detour and think about how I can work around or fix low level basic functions for data base interactions. 😞

... but to be solution oriented, now that LV support Python to a fair extent, perhaps doing the db interactions via Python is a viable way to go?

-------------

CLD LabVIEW 7.1 to 2016

03-05-2023 04:04 PM - edited 03-05-2023 04:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@QFang wrote:

If storing it via a explicit binary in a full query string, can you read out the data again using the DB toolkit api, or do you need to do some custom'ness to get it back out?

Of course. The writing has to match with the reading and if you do mess on the storage side with things, you have to mess on the query side too!

I guess it is a sign that not many people interface and interact with databases using LabVIEW?

More correctly there are about a dozen different database libraries out there that you can download, some using ODBC, some using ActiveX ADO or Database .Net (and being Windows only), some using database specific shared libraries and a few directly communicating with the database server through TCP/IP. A few of them are implemented as lean and mean interface around the underlying driver interface, others try to be the kill all solution for multiple database systems and some have just their own specific philosophy.

And then there are the many never released company internal variants that do their own thing too. I have two of them too, one is a ODBC based interface that works on Linux and Windows and the other is a class based interface that implements various sub interfaces to MS-SQL, MySQL/MariaDB, Oracle DB, MS Access and IBM DB2 based on an ADO interface low level interface and a possibility to plugin other low level implementations such as ODBC or direct TCP/IP communication.

Instead of focusing on table design and architecture, I got to take a detour and think about how I can work around or fix low level basic functions for data base interactions. 😞

BLOB data storage in databases is by definition fraught with many problems. Some databases don't like it at all, others do their own vodoo magic on data that can vary in result depending on which interface you use to access the data and every database has its own rules and limitations. It's always custom data by definition and as such there is simply no standard that you can violate, despite your feelings that there are.

03-06-2023 03:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@QFang wrote:

If storing it via a explicit binary in a full query string, can you read out the data again using the DB toolkit api, or do you need to do some custom'ness to get it back out?

I think this is one of the rare cases where I might disagree with Rolf. The issue with writing is that the DB toolkit's parser prepends the size. On the reading size I assume this is not relevant, as you get an OLE variant anyway and you don't have to directly unflatten it into the original LV datatype.

That said, I'm not sure I can answer this from experience. In cases where I had to deal with this, I think it was just passing Unicode text to the DB and I don't remember offhand if I had to read it back. Even if I did, Unicode in LV is enough of a pain that it would probably require some work anyway.

@QFang wrote:

... but to be solution oriented, now that LV support Python to a fair extent, perhaps doing the db interactions via Python is a viable way to go?

As Rolf said, there are various DB APIs available. I suspect that LV to Python to DB would not be a great experience, but that's just a guess.

___________________

Try to take over the world!

03-06-2023 04:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@tst wrote:

As Rolf said, there are various DB APIs available. I suspect that LV to Python to DB would not be a great experience, but that's just a guess.

Right! To paraphrase a popular meme:

I had a database access problem in my LabVIEW program. Because of that I replaced it with a Python module called through the Python node. Now I have at least two problems!

Python is not a magic tool that does make everything childs play. It is simply another programming language with its own specific challenges including a considerable performance problem for large data processing.