- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

every n samples transferred from buffer

Solved!12-04-2014 01:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello all,

I have a question about the Every N Samples Transferred from Buffer DAQmx event. Looking at the description of it and the very limited DevZones and KBs relating to it, I'm led to believe that the name is perfectly descriptive of what its behavior should be (i.e., every N samples transferred from the PC buffer into the DAQmx FIFO it should signal an event). However, when I put this into practice in an example, either I have something configured incorrectly (wouldn't be the first time) or I have a fundamental misunderstanding of either the event itself or of how DAQmx buffers work with regeneration (would definitely not be the first time).

In my example, I am outputting 10k samples at 1k rate -- thus 10 seconds of data. I've registered for the Every N Samples Transferred from Buffer event with a sampleInterval of 2000. I've changed my Data Transfer Request Condition to Onboard Memory Less than Full with the hopes that it will continuously refill my buffer with the regenerated samples (from this link ). My expectation would be that after 2000 samples had been output by the device (i.e., 2 seconds elapse), and thus 2000 less items in the DMA FIFO, it would have transferred 2000 samples from the PC buffer to the DMA FIFO and the event would fire. In practice it is... not doing that. I have a counter on the event which shows it pretty much immediately fires 752 times, then fires periodically after that in spurts of 4 or 5. I'm at a loss.

Could anyone please shed some light on this for me -- both on my misconception of what I'm supposed to be seeing if that's the case, and also explain why I'm seeing what I'm currently seeing?

LV 2013 (32-bit)

DAQmx 9.8.0f3

Network cDAQ chassis: 9184

cDAQ module: 9264

Thanks

Solved! Go to Solution.

12-04-2014 04:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

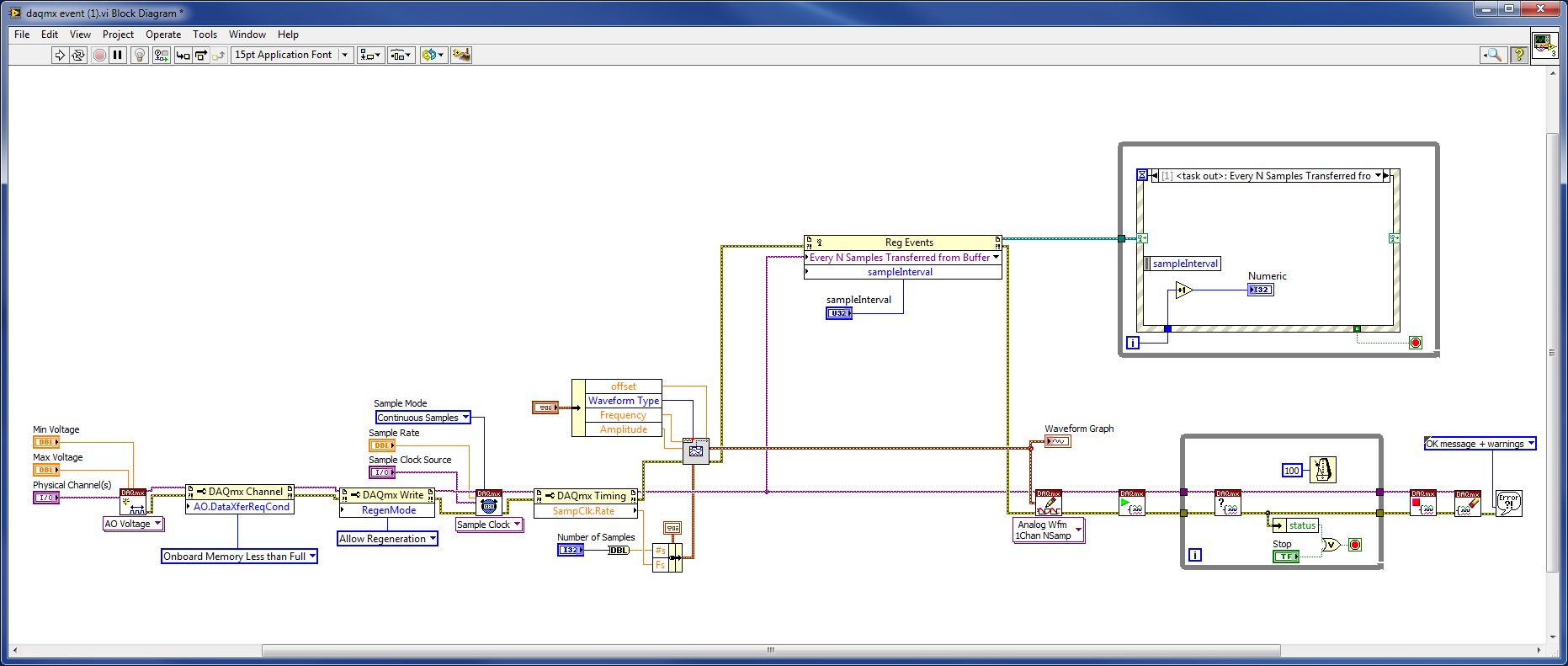

Not really sure why I can't edit my original post, but I just wanted to add a screenshot of the BD.

12-08-2014 12:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Anyone have any insight?

12-08-2014 01:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello Heiso,

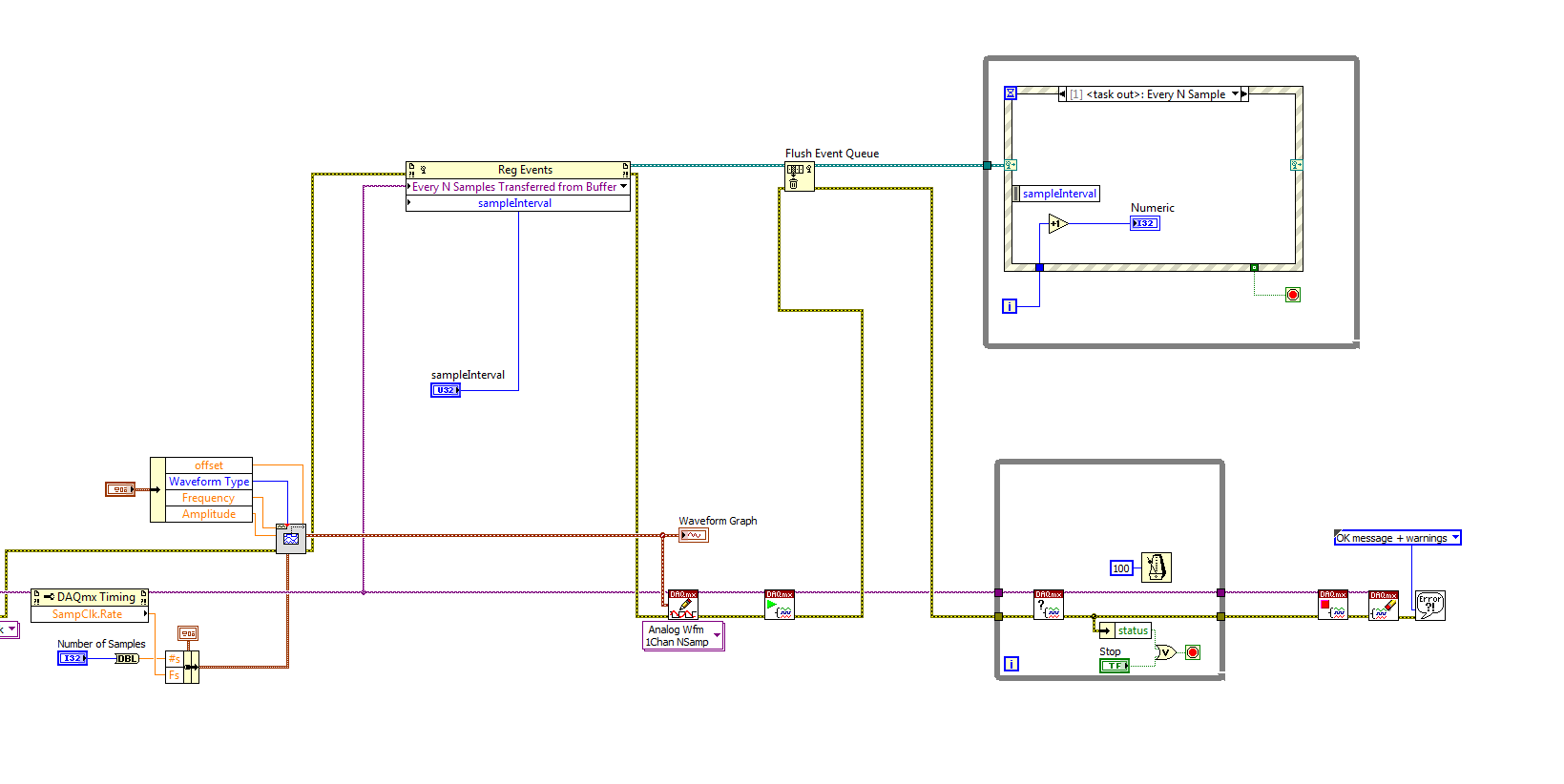

The reason this behavior is happening is probably to the fact that the process needs to stabilize. It is recommended then to clear the event information each time the program runs, making sure the task is ready to start, for cleaning the event list from any trash information. For this I would recommend to use the function Flush Event Queue ash shown on the image.

12-08-2014 02:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

There is a large (unspecced, but on the order of several MB) buffer on the 9184. This has come up a few times on the forum, here is a link to another discussion about it. Quoting myself:

Unfortunately, I don't know the size of this buffer on the 9184 off the top of my head and I don't think it is in the specifications (the buffer is also shared between multiple tasks). This is not the same thing as the 127 sample per slot AO buffer which is present on all cDAQ chassis--the ethernet/wireless chassis controller contains an additional buffer that isn't really mentioned as far as I can tell in the published specifications (apparently it's 12 MB on the wireless cDAQ chassis).

The large number of events fired when you start the task is the buffer being filled upon startup (if the on-board buffer is less than full, the driver will send more data--you end up with multiple periods of your output waveform in the on-board buffer). So in your case, the 752 events * 2000 samples/event * 2 bytes/sample = ~3 MB of buffer allocated to your AO task. I guess this seems reasonable (still would really like a spec or KB from NI... I'm not sure how the buffer size is chosen).

The grouping of events is due to data being sent in packets to improve efficiency since there is overhead with each transfer.

The large buffer and grouping of data are optimizations used by NI to improve streaming throughput but can have some odd side-effects as you have seen. I might have some suggestions if you describe what it is you need to do.

Best Regards,

12-09-2014 07:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks John, that clears up the behavior I was seeing. I was unaware that it would fill more than the bare minimum amount required of the buffer on the device with multiple periods of the same data, but it does seem like that would be more efficient when having regeneration enabled, so that makes sense.

High level, what I'm trying to do is output a very large data file with the best performance possible. Currently in my actual program I'm chunking the data and calling DAQmx Write every loop iteration with the new data, but it seems unnecessary to do that if I could just turn on regeneration but overwrite the buffer in chunks so that it's continuously pulling from memory instead of recalling DAQmx Write all the time. From what I've seen, this used to be the approach used when TDAQ was prevalent, and there were (perhaps? I'm unclear about this...) interrupt events you could register for when you'd pulled off half of the buffer and the last point of the buffer so that you could overwrite half of it while the other half was outputting. My current thought would be that I'd watch for when I'd pulled off some subset of data (e.g., 2000 of 10k samples), look at the current position in the buffer, and overwrite the 2k samples that I just output from the device. However, with the behavior I've seen from Every N Samples Transferred from Buffer, this may not be something I can do.

I've tried to do my due diligence in searching the forums and NI site, but I never really found anything useful. This post alludes to the half interrupts that I vaguely recall, but nothing about whether there's a substitute for them now -- just a comment that everything's been abstracted away. This post describes an example that could be useful, but it looks like this example was rewritten sometime between 2009 and DAQmx 9.8.

If you have any tips, I'd love to hear them. DAQmx is the reason I was on VisMo instead of High Speed ![]()

Drew

12-09-2014 05:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If the data from the file is too large to fit into memory at 2 bytes / sample in the DAQmx output buffer (or if you want to reduce memory usage in general) then you should use non-regeneration and write smaller sections of the file continuously as the task is running. This keeps the DAQmx buffer small (the size of the first write by default) but the on-board FIFO will be filled as space is available so you shouldn't underflow. The downside of course is that you have to continuously read from your file and continuously write to the DAQ task.

If memory usage isn't a problem, one option is to just write the whole buffer at once before the task starts. The data is stored in the DAQmx buffer as 2 bytes / sample, but assuming you're writing DBL values it will use 4x the memory in LabVIEW (5x intermittently after the DAQmx buffer is allocated but before the LabVIEW array is deallocated).

It sounds like you want a combination of the above two ideas so that you limit your memory to what is allocated to the DAQmx buffer (plus your intermittent memory used while reading the smaller segments of data from the file) but you don't want to have to read from the file continuously as your data is being regenerated. The solution to that would be to set the DAQmx buffer size to however many samples are in your file, and then write the smaller chunks of data before starting the task (allowing regeneration). Once the data is read / written once, it will be in the DAQmx buffer and will be regenerated continuously without requiring any further file I/O or DAQmx writes.

Best Regards,

12-10-2014 07:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for shedding some light on this for me, John. My initial concern had been the potential of a couple clock cyles of noise everytime the For loop iterates due to overhead from calling DAQmx Write over and over, but it sounds like since the task itself is already started there shouldn't be much/any delay between outputting the previous chunk of data and the next chunk as long as the next chunk of data is already available (i.e., read into memory and is available as an input) the next time DAQmx Write is called. That concern is what led me to start exploring writing directly to the buffer instead, but it sounds like my current chunking strategy will work just fine. Thanks again for all your help.

Drew