Community Browser

-

NI Community

- Welcome & Announcements

-

Discussion Forums

- Most Active Software Boards

- Most Active Hardware Boards

-

Additional NI Product Boards

- Academic Hardware Products (myDAQ, myRIO)

- Automotive and Embedded Networks

- DAQExpress

- DASYLab

- Digital Multimeters (DMMs) and Precision DC Sources

- Driver Development Kit (DDK)

- Dynamic Signal Acquisition

- FOUNDATION Fieldbus

- High-Speed Digitizers

- Industrial Communications

- IF-RIO

- LabVIEW Communications System Design Suite

- LabVIEW Electrical Power Toolkit

- LabVIEW Embedded

- LabVIEW for LEGO MINDSTORMS and LabVIEW for Education

- LabVIEW MathScript RT Module

- LabVIEW Web UI Builder and Data Dashboard

- MATRIXx

- Hobbyist Toolkit

- Measure

- NI Package Manager (NIPM)

- Phase Matrix Products

- RF Measurement Devices

- SignalExpress

- Signal Generators

- Switch Hardware and Software

- USRP Software Radio

- NI ELVIS

- VeriStand

- NI VideoMASTER and NI AudioMASTER

- VirtualBench

- Volume License Manager and Automated Software Installation

- VXI and VME

- Wireless Sensor Networks

- PAtools

- Special Interest Boards

- Community Documents

- Example Programs

-

User Groups

-

Local User Groups (LUGs)

- Aberdeen LabVIEW User Group (Maryland)

- Advanced LabVIEW User Group Denmark

- ASEAN LabVIEW User Group

- Automated T&M User Group Denmark

- Bangalore LUG (BlrLUG)

- Bay Area LabVIEW User Group

- British Columbia LabVIEW User Group Community

- Budapest LabVIEW User Group (BudLUG)

- Chicago LabVIEW User Group

- Chennai LUG (CHNLUG)

- CSLUG - Central South LabVIEW User Group (UK)

- Delhi NCR (NCRLUG)

- Denver - ALARM

- DutLUG - Dutch LabVIEW Usergroup

- Egypt NI Chapter

- Gainesville LabVIEW User Group

- GLA Summit - For all LabVIEW and TestStand Enthusiasts!

- GUNS

- High Desert LabVIEW User Group

- Highland Rim LabVIEW User Group

- Huntsville Alabama LabVIEW User Group

- Hyderabad LUG (HydLUG)

- Indian LabVIEW Users Group (IndLUG)

- Ireland LabVIEW User Group Community

- LabVIEW LATAM

- LabVIEW Team Indonesia

- LabVIEW - University of Applied Sciences Esslingen

- LabVIEW User Group Berlin

- LabVIEW User Group Euregio

- LabVIEW User Group Munich

- LabVIEW Vietnam

- Louisville KY LabView User Group

- London LabVIEW User Group

- LUGG - LabVIEW User Group at Goddard

- LUGNuts: LabVIEW User Group for Connecticut

- LUGE - Rhône-Alpes et plus loin

- LUG of Kolkata & East India (EastLUG)

- LVUG Hamburg

- Madison LabVIEW User Group Community

- Mass Compilers

- Melbourne LabVIEW User Group

- Midlands LabVIEW User Group

- Milwaukee LabVIEW Community

- Minneapolis LabVIEW User Group

- Montreal/Quebec LabVIEW User Group Community - QLUG

- NASA LabVIEW User Group Community

- Nebraska LabVIEW User Community

- New Zealand LabVIEW Users Group

- NI UK and Ireland LabVIEW User Group

- NOBLUG - North Of Britain LabVIEW User Group

- NOCLUG

- NORDLUG Nordic LabVIEW User Group

- North Oakland County LabVIEW User Group

- Norwegian LabVIEW User Group

- NWUKLUG

- Orange County LabVIEW Community

- Orlando LabVIEW User Group

- Oregon LabVIEW User Group

- Ottawa and Montréal LabVIEW User Community

- Phoenix LabVIEW User Group (PLUG)

- Politechnika Warszawska

- PolŚl

- Rhein-Main Local User Group (RMLUG)

- Romandie LabVIEW User Group

- Rutherford Appleton Laboratory

- Sacramento Area LabVIEW User Group

- San Diego LabVIEW Users

- Sheffield LabVIEW User Group

- Silesian LabVIEW User Group (PL)

- South East Michigan LabVIEW User Group

- Southern Ontario LabVIEW User Group Community

- South Sweden LabVIEW User Group

- SoWLUG (UK)

- Space Coast Area LabVIEW User Group

- Stockholm LabVIEW User Group (STHLUG)

- Swiss LabVIEW User Group

- Swiss LabVIEW Embedded User Group

- Sydney User Group

- Top of Utah LabVIEW User Group

- UKTAG – UK Test Automation Group

- Utahns Using TestStand (UUT)

- UVLabVIEW

- VeriStand: Romania Team

- WaFL - Salt Lake City Utah USA

- Washington Community Group

- Western NY LabVIEW User Group

- Western PA LabVIEW Users

- West Sweden LabVIEW User Group

- WPAFB NI User Group

- WUELUG - Würzburg LabVIEW User Group (DE)

- Yorkshire LabVIEW User Group

- Zero Mile LUG of Nagpur (ZMLUG)

- 日本LabVIEWユーザーグループ

- [IDLE] LabVIEW User Group Stuttgart

- [IDLE] ALVIN

- [IDLE] Barcelona LabVIEW Academic User Group

- [IDLE] The Boston LabVIEW User Group Community

- [IDLE] Brazil User Group

- [IDLE] Calgary LabVIEW User Group Community

- [IDLE] CLUG : Cambridge LabVIEW User Group (UK)

- [IDLE] CLUG - Charlotte LabVIEW User Group

- [IDLE] Central Texas LabVIEW User Community

- [IDLE] Cowtown G Slingers - Fort Worth LabVIEW User Group

- [IDLE] Dallas User Group Community

- [IDLE] Grupo de Usuarios LabVIEW - Chile

- [IDLE] Indianapolis User Group

- [IDLE] Israel LabVIEW User Group

- [IDLE] LA LabVIEW User Group

- [IDLE] LabVIEW User Group Kaernten

- [IDLE] LabVIEW User Group Steiermark

- [IDLE] தமிழினி

- Academic & University Groups

-

Special Interest Groups

- Actor Framework

- Biomedical User Group

- Certified LabVIEW Architects (CLAs)

- DIY LabVIEW Crew

- LabVIEW APIs

- LabVIEW Champions

- LabVIEW Development Best Practices

- LabVIEW Web Development

- NI Labs

- NI Linux Real-Time

- NI Tools Network Developer Center

- UI Interest Group

- VI Analyzer Enthusiasts

- [Archive] Multisim Custom Simulation Analyses and Instruments

- [Archive] NI Circuit Design Community

- [Archive] NI VeriStand Add-Ons

- [Archive] Reference Design Portal

- [Archive] Volume License Agreement Community

- 3D Vision

- Continuous Integration

- G#

- GDS(Goop Development Suite)

- GPU Computing

- Hardware Developers Community - NI sbRIO & SOM

- JKI State Machine Objects

- LabVIEW Architects Forum

- LabVIEW Channel Wires

- LabVIEW Cloud Toolkits

- Linux Users

- Unit Testing Group

- Distributed Control & Automation Framework (DCAF)

- User Group Resource Center

- User Group Advisory Council

- LabVIEW FPGA Developer Center

- AR Drone Toolkit for LabVIEW - LVH

- Driver Development Kit (DDK) Programmers

- Hidden Gems in vi.lib

- myRIO Balancing Robot

- ROS for LabVIEW(TM) Software

- LabVIEW Project Providers

- Power Electronics Development Center

- LabVIEW Digest Programming Challenges

- Python and NI

- LabVIEW Automotive Ethernet

- NI Web Technology Lead User Group

- QControl Enthusiasts

- Lab Software

- User Group Leaders Network

- CMC Driver Framework

- JDP Science Tools

- LabVIEW in Finance

- Nonlinear Fitting

- Git User Group

- Test System Security

- Developers Using TestStand

- Product Groups

-

Partner Groups

- DQMH Consortium Toolkits

- DATA AHEAD toolkit support

- GCentral

- SAPHIR - Toolkits

- Advanced Plotting Toolkit

- Sound and Vibration

- Next Steps - LabVIEW RIO Evaluation Kit

- Neosoft Technologies

- Coherent Solutions Optical Modules

- BLT for LabVIEW (Build, License, Track)

- Test Systems Strategies Inc (TSSI)

- NSWC Crane LabVIEW User Group

- NAVSEA Test & Measurement User Group

-

Local User Groups (LUGs)

-

Idea Exchange

- Data Acquisition Idea Exchange

- DIAdem Idea Exchange

- LabVIEW Idea Exchange

- LabVIEW FPGA Idea Exchange

- LabVIEW Real-Time Idea Exchange

- LabWindows/CVI Idea Exchange

- Multisim and Ultiboard Idea Exchange

- NI Measurement Studio Idea Exchange

- NI Package Management Idea Exchange

- NI TestStand Idea Exchange

- PXI and Instrumentation Idea Exchange

- Vision Idea Exchange

- Additional NI Software Idea Exchange

- Blogs

- Events & Competitions

- Optimal+

- Regional Communities

- NI Partner Hub

View Ideas...

Labels

-

Data Portal

21 -

DataFinder

14 -

DataPlugins

7 -

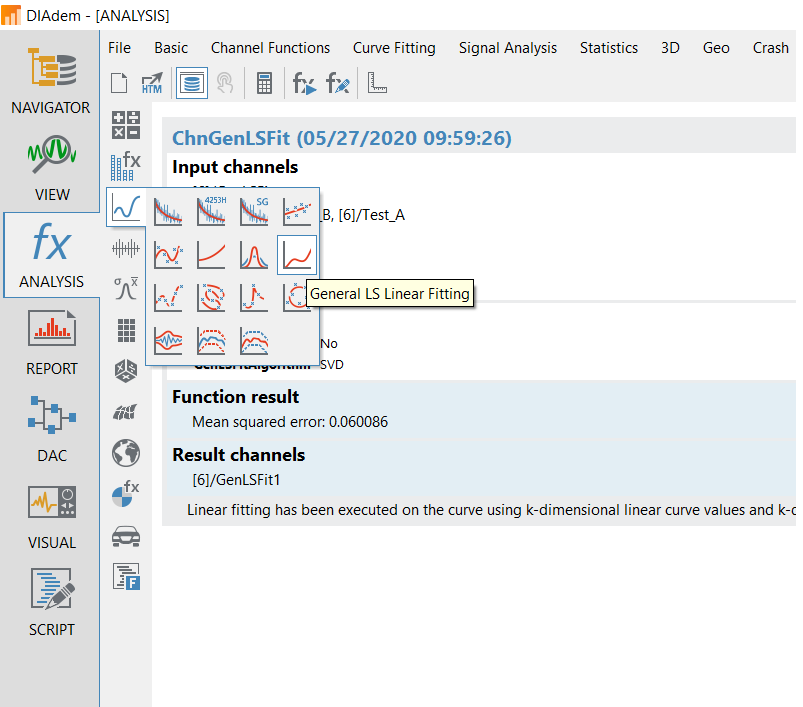

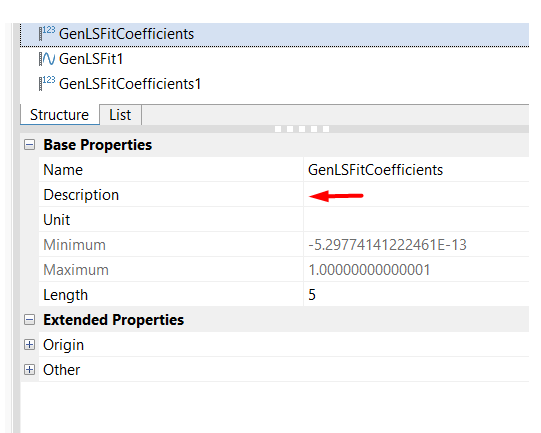

DIAdem ANALYSIS

29 -

DIAdem DAC & VISUAL

14 -

DIAdem NAVIGATOR

18 -

DIAdem REPORT

13 -

DIAdem SCRIPT

83 -

DIAdem VIEW

88 -

Documentation

4 -

Performance

14 -

Usability

79

Idea Statuses

- New 27

- Under Consideration 63

- In Development 0

- In Beta 0

- Completed 90

- Duplicate 1

- Declined 118

- Already Implemented 14

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Options

- Mark all as New

- Mark all as Read

- Float this item to the top

- Subscribe

- Bookmark

- Subscribe to RSS Feed

Showing ideas with label DIAdem ANALYSIS.

Show all ideas

Status:

New

Submitted by

MMargaryan

on

04-04-2024

04:27 AM

Comment

MMargaryan

on

04-04-2024

04:27 AM

Comment

Labels:

Labels:

Labels:

Labels:

Labels:

Labels:

Labels:

Labels:

Labels:

Labels:

Status:

Under Consideration

Submitted by

nath05

on

06-04-2020

05:27 PM

1

Comment

nath05

on

06-04-2020

05:27 PM

1

Comment

Labels:

Status:

Under Consideration

Submitted by

ATarman

on

09-23-2019

09:53 AM

8

Comment

ATarman

on

09-23-2019

09:53 AM

8

Comment

Download All

Virus scan in progress. Please wait to download attachments.

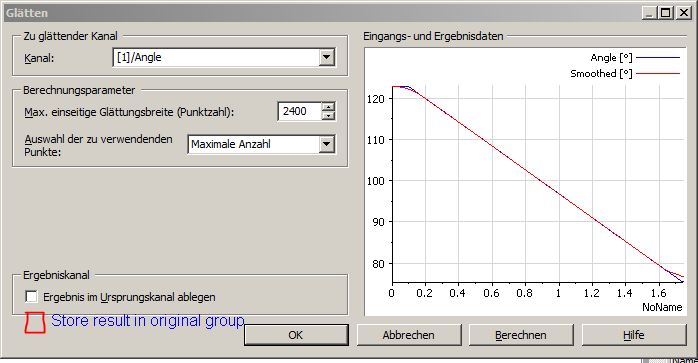

Many DIAdem ANALYSIS functions offer storing the result in the original channel. And if this is not chosen, result goes into 'default group'.

Many DIAdem ANALYSIS functions offer storing the result in the original channel. And if this is not chosen, result goes into 'default group'.